As hyperscale data centers multiply to support AI workloads, communities are pushing back — raising concerns about rising electricity costs, water use and who ultimately bears the burden of digital infrastructure growth. That tension is now forcing major cloud providers to rethink how and where they build.

Microsoft recently unveiled what it calls a “community first” approach to data center development, pledging to cover its full power costs, avoid local property tax incentives, replenish more water than it consumes and invest in workforce training and AI education programs. The shift follows growing local opposition to large-scale data center projects, including the company’s decision to cancel a planned facility in Caledonia, Wisconsin, after residents raised concerns about energy demand and water resources.

Microsoft says it will instead concentrate expansion at its existing data center campus in nearby Mount Pleasant, while emphasizing greater transparency and community engagement as AI infrastructure continues to scale.

Beyond better community outreach, data center developers are looking for alternatives, including orbital data centers and facilities buried underground (or underwater).

Table of Contents

- Data Centers Go Underground

- Data Centers Dive Into the Ocean

- Can Data Centers Really Work in Orbit?

- The Limits of Urban Data Center Growth

Data Centers Go Underground

In Stockholm, Bahnhof operates its Pionen data center inside a former nuclear bunker carved into granite beneath the city, using the natural rock mass for physical security, thermal stability and resilience against extreme weather or external disruption.

In the US, Iron Mountain runs large-scale underground data centers in repurposed limestone mines, including facilities outside Kansas City, where hundreds of feet of rock provide passive cooling benefits, protection from storms and a controlled environment for mission-critical IT workloads.

In both cases, going underground reduces cooling demand, improves security and highlights how data center design is adapting as power, land use and resilience become harder constraints.

Related Article: AI's Voracious Appetite for Land, Water and Power Is Your Next Big Business Risk

Data Centers Dive Into the Ocean

Underwater data centers are considered a practical solution to many traditional data center difficulties as they can cut cooling costs with natural ocean cooling and reduce energy usage. Plus, with more than half of the world's population living within 120 miles of the coast, putting data centers underwater near coastal cities would mean data would have shorter distances to travel, leading to faster and smoother web surfing, streaming and more.

Microsoft's Project Natick is one such initiative. In Phase 1 of the project, the tech giant deployed a shipping container-sized data center prototype down to the floor of the North Sea, 117 feet deep. In June of 2018, as part of Phase 2, they deployed a full-scale datacenter module.

According to Microsoft — who says it's still reviewing data from the project — the servers in Natick Nothern Isles showed a failure rate of 1/8th that of a land-based control group.

In Hainan, China, the Beijing Highlander Digital Technology Company has developed an advanced underwater data center. This data centers sits 35 meters underwater and is designed to contain 198 server racks capable of holding 396-792 AI-capable servers.

The Hainan facility reportedly uses 30% less electricity than on-land data ceneters, thanks to natural cooling via seawater. In addition, a nearby offshore wind farm is set to supply 97% of the data center's energy.

Now, another, more advanced underwater data center is underway off the coast of Shanghai.

The Tradeoffs: Efficiency Gains vs. Operational Risk

Underground facilities almost completely reduce the visual footprint and provide natural cooling, said Emma Reese, research analyst at DC Byte — but staff safety and ventilation are prominent concerns, not to mention the architectural prowess necessary to design the facility around the support structures.

“Undersea data centers require fewer operating costs due to their increased physical resilience and stability but are vulnerable to attack by playing a single note at a high enough frequency from 200 feet away,” she explained.

Mixed-use integration, she added, is a slightly more feasible option, but the current vertical required for a hyperscale-level deployment may be more than what some cities are prepared for.

“However, both of those solve some major issues in the space: transforming the community image of a data center and promoting energy efficiency according to spatial and environmental advantages."

Can Data Centers Really Work in Orbit?

Orbital data centers are designed to be resilient in both structure and data delivery and have the entire sun available to supply power, but operating costs can be eaten up by needing an entire space mission to make more serious repairs.

While no commercial-scale facilities exist today, small “server room-class” payloads hosted on satellites are already being planned, with initial tests expected to begin as early as 2027, said Autumn Stanish, director analyst with Gartner.

The idea is not to replace terrestrial data centers anytime soon, but to explore whether space-based compute could one day support specialized use cases, such as low-latency satellite processing, space research or resilient backup infrastructure.

“We’re really more talking about a server room on a satellite,” Stanish said, emphasizing that current efforts are limited in scope and largely experimental.

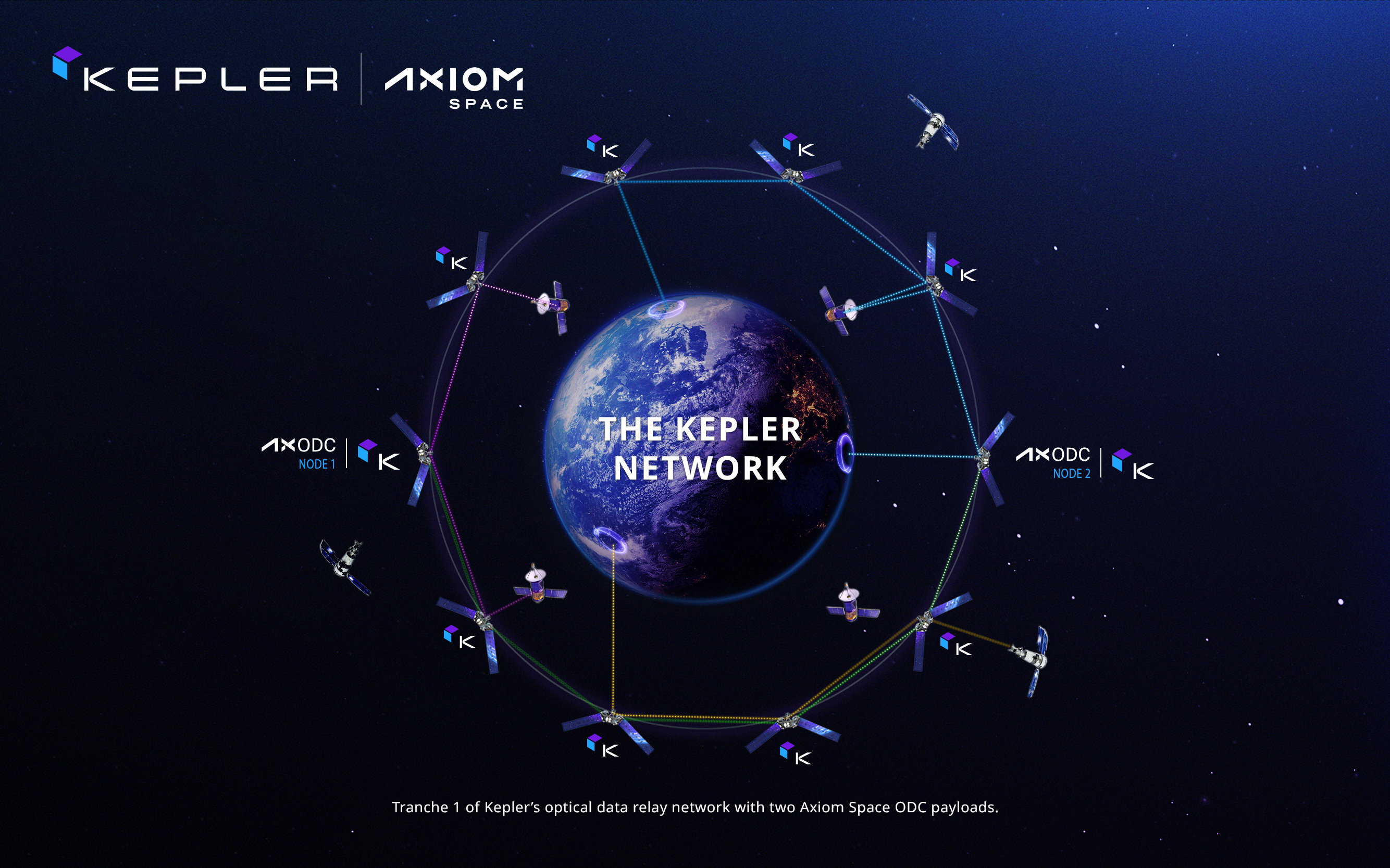

One such effort has been taken on by Axiom Space, whose first orbital data center nodes are now on orbit. According to Kam Ghaffarian, co-founder and executive chairman of the board at Axiom Space, "This is the foundation of secure, real-time data processing in space. There is still work ahead, but this milestone moves us closer to an orbital data network that will change how space missions are built and run."

The Hard Physics of Space-Based Infrastructure

The challenges, however, are substantial.

Maintenance is one of the biggest hurdles, given the cost and complexity of repairing hardware in orbit. Sending humans into space to service servers would be prohibitively expensive, making automation essential. That puts pressure on robotics technology, which is not yet mature enough. “Right now, robots have a task and they do their task well,” Stanish said, noting that truly multifunctional, autonomous robots capable of complex repairs are still unreliable.

Hardware durability is another major obstacle. Conventional servers are not designed to withstand radiation exposure, pushing interest in alternative architectures such as photonic computing, which uses light instead of electrons and generates less heat.

However, those technologies remain early-stage, and as a result, Stanish cautioned against near-term hype. “This is so far more academic in nature rather than commercial. I wouldn’t expect to see a lot of this stuff actually commercially viable for many years out.”

The biggest technical hurdle for orbital data centers is latency, since placing compute infrastructure in space inherently increases data transmission distances.

Researchers are experimenting with optical links between what Stanish described as “space nodes” to improve bandwidth and reduce latency, and while that work looks promising, it remains early.

Until orbital data centers undergo all required testing, Reese added, it’s more likely to see developments from subsurface designs.

Related Article: AI Training Is Moving Beyond Data Centers

The Limits of Urban Data Center Growth

Surafel Tadesse, another research analyst at DC Byte, noted that data centers are not like quiet office buildings. “Cooling machines, generators and rooftop fans operate around the clock, and in mixed-use areas this constant equipment noise can conflict with nearby homes, schools and hospitals."

The visible components, such as cooling units, generators and electrical infrastructure, can also appear out of place in residential or civic settings, leading to zoning challenges and planning pushback.

One of the primary drivers of data center growth is AI, said Tadesse, noting AI workloads, specifically large-scale model training, are different from traditional cloud or enterprise compute. “They require vast amounts of power, large contiguous land parcels and purpose-built infrastructure that cities are structurally ill-equipped to deliver at scale. Because of this, data centers will likely remain a standalone industrial asset.”

However, in enterprise-led colocation markets such as New York City, demand is driven less by hyperscale AI training and more by latency, network density and proximity to enterprise and financial customers. As a result, data centers are embedded infrastructure, integrated into cities, often as multi-story facilities located in core business districts.

“A shift to an embedded infrastructure is possible only under specific constraints,” said Tadesse, explaining that the workload must skew toward inference, enterprise IT and financial-services compute, not large-scale AI training.

“Training workloads are power-dense, land-intensive and operationally incompatible with dense urban environments, while inference and enterprise compute can tolerate smaller footprints, higher power costs and vertical form factors."