"Pretraining is dead," said Ilya Sutskever, co-founder of OpenAI, in response to December 2024 questions about the future of AI scaling, marking a public turning point for AI developers. For years, the dominant strategy for building more capable models was simple: feed them more data and compute. But with high-quality human data nearing its limits, Ilya's comment marked a strategic shift already happening across the field.

One of the new approaches are reasoning models — also called "thinking models." From OpenAI's GPT-o1 to Claude's "Extended Thinking," Google's "Flash Thinking," DeepSeek's r1 and xAI's Grok updates, nearly every major provider now has a model designed not just to generate, but to reason.

While these models’ reasoning isn't the same as human reasoning, they are increasing the task complexity that LLMs can undertake, particularly in areas like math and coding, where answers tend to be more defined. But because they differ from the chatbot models of recent years, they require a slightly different approach for the best outcomes. In this guide, we’ll explore the foundational principles of reasoning models and how to prompt them effectively.

What Are Reasoning Models?

Reasoning models integrate the best insights from model development and prompt engineering. A core principle in their design is using chain-of-thought prompting, which improves results by encouraging the model to reason step-by-step. Unlike base models, this strategy is trained into the system: instead of needing to be told to “think step by step,” the model does so by default, using a more process-oriented and strategic approach.

To use a metaphor from human cognition:

- Standard models respond with the “first thing on their mind” — like Daniel Kahneman’s Type 1 thinking: intuitive, fast and top-of-mind.

- Reasoning models pause to “take a moment and think” — more like Type 2 thinking: slow, reflective, metacognitive.

Some of that thinking is surfaced to the user, but much of the reasoning remains internal. Because of this, reasoning models can take longer, sometimes minutes — as with OpenAI’s o1-Pro — to respond, which increases inference costs and often results in stricter rate limits. The delay can become an operational constraint even when cost isn’t a factor. That’s why these models benefit from a more strategic prompt design.

Related Article: Chain-of-Thought (CoT) Prompting Guide for Business Users

Prompting Reasoning Models: Best Practices

There’s no universal “best practice” for prompting — if something works for you repeatedly, it works. However, there are core principles that matter more in reasoning models. Here are three that stand out, followed by a synthesized list of recommendations drawn from leading model providers.

Use a Different Mental Frame: Reasoning Models Are Hyper-Focused

Reasoning models operate more like analytical researchers if standard LLMs function like helpful interns. While reasoning models are often better at requesting clarity when faced with ambiguity, they still take a prompt, regardless of how clearly stated, deliberate on it and then respond with their best assessment.

Although they may be more effective with mathematical and logical problems, they remain fundamentally predictive engines that can follow incorrect reasoning paths. They're powerful, but they will methodically analyze every word in your prompt — sometimes advantageously, sometimes to your frustration.

Most importantly, running side-by-side experiments between existing prompts and reasoning-optimized prompts is invaluable, especially for those new to reasoning models. Users are often disappointed with reasoning models' outputs when using standard prompts, particularly as the models' distinctive characteristics can be diminished by their highly recursive inference process. Often, the most effective initial prompts for reasoning models address problems that existing models consistently fail to solve.

Clearly Define Success, Goals and Output

One of AI’s greatest strengths is recognizing patterns too subtle or broad for humans to spot. But that’s a double-edged sword: just as models can find useful insights, they can also create misleading patterns, resulting in hallucinations or flawed logic.

That’s why it’s critical to clearly define:

- Your objective

- Success criteria

- Output expectations

Be explicit. Set boundaries. Provide examples. If you don’t, the model will try to fill in the blanks — and might take your task in a direction you didn’t intend.

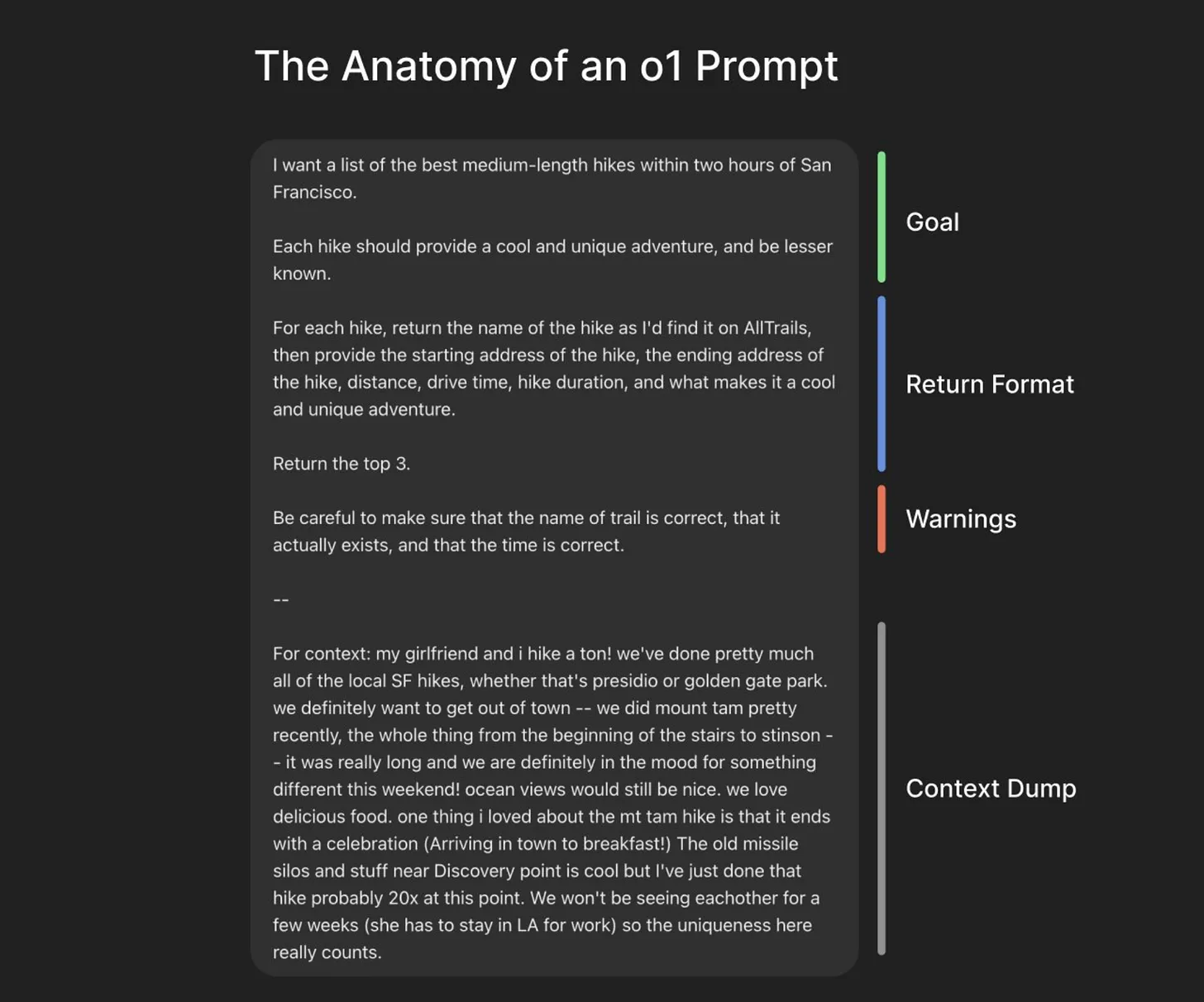

A reasoning prompt outline endorsed by Greg Brockman on how he prompts AI

Conversely, humans often lack complete clarity about their objectives, which is why, alongside experimenting with challenging prompts for standard models, it can be valuable to pose metacognitive questions to reasoning models, such as "What do you think my goal should be given this situation?" Rather than immediately seeking specific answers, reasoning models can be most effective when helping you think through a problem holistically.

Use a Clear Structure for Communicating and Organizing

Prompting frameworks always depend on having a general prompt structure. Assigning roles, sharing examples and being specific with styles are ways to optimize the density of user intent and context in a prompt. However, with reasoning models, using a clear organizational structure is especially useful.

Delimiters for various request sections, such as <task>, #context or other markdown/XML/formatting, help the model consider different sections individually. Although this approach benefits standard models as well, it becomes essential for reasoning models to ensure accurate contextualization of each information component.

This structured approach also helps you, the user, as well. The discipline of using structured prompts often encourages deeper thinking about the underlying problem and potential solutions. It's common to begin drafting a reasoning prompt with delimiters and tags, only to realize that essential data or context is missing. Oops, I should include some #industrydata. Labeling information facilitates more effective troubleshooting and refinement of reasoning prompts, particularly given their extensive context windows that can accommodate entire documents or codebases for review.

Summary of Prompting Best Practices (AI Example)

To see how these principles translate in practice, I created a reasoning prompt using OpenAI’s o1 model. The task: evaluate prompt guides from Anthropic and OpenAI, then generate a ranked list of the most important considerations when prompting reasoning models. I ran this across o1, o1-Pro and 4o. Below is the response from 4o, which read the clearest in the context of this article:

- Use clear and direct instructions.

Reasoning models excel with concise prompts that clearly define the task. Overly verbose or ambiguous inputs reduce performance — state what you want, precisely. - Avoid step-by-step prompting unless necessary.

These models internally handle multi-step reasoning. Instructing them to "think step-by-step" can sometimes degrade results rather than help. - Start with zero-shot, escalate only if needed.

Begin with a clean prompt and only add examples (few-shot) if the model struggles. Too many examples may confuse or constrain the model’s internal reasoning. - Delimit input sections with structure.

Use markdown, XML tags or clear titles to separate instructions, data or examples. This helps models parse input and focus on the correct information. - Be explicit about constraints and success criteria.

Define any limits (e.g., “under $500”) or output requirements directly. Reasoning models respond well when the definition of “success” is clear. - Don’t pre-script the model’s reasoning path.

Instead of prescribing a fixed logic flow, let the model determine the best way to solve the problem — it often finds smarter or more efficient approaches. - Treat the model as an expert, not a follower.

Reasoning models perform best when prompted as autonomous problem-solvers, not as instruction executors. Ask for thoughtful analysis, not rote computation. - Iterate with feedback, not rephrasing.

If results are off, refine with feedback like “this missed X” or “please include Y” rather than rewording the original prompt. - Use thinking modes (like Claude’s extended thinking) when depth matters.

Enabling internal reasoning (e.g., with thinking blocks) lets the model deliberate and often improves accuracy and traceability for complex tasks. - Prefer general prompts, then refine.

Start with high-level instructions like “analyze thoroughly” or “evaluate trade-offs.” Only move to detailed scaffolding if necessary to guide the model’s logic.

Author's Prompt Evaluation

Here are some quick reflections:

- I included the 4o output above because it "reads" the best. This fits my experience that chatbots are much better for communicating, while reasoning models are best for rationalizing — an important distinction.

- I liked o1-Pro's response the second best, but it didn't mention the importance of structure, which wasn't great given how important my experience has shown it to be.

- Had I not been using the mental frame that I was using a reasoning model, 4o would not have been the winner with a one-shot prompt (I checked). Usually, I would have been lazier and said something like "synthesize this, etc.," on my first try to see how far I got.

- If I were optimizing this for a reasoning model to be the "best" option, I would follow the list above. A reasoning model would be the best option in a systemic setting.

Related Article: How to Evaluate and Select the Right AI Foundation Model for Your Business

When to Use Reasoning Models

Reasoning models excel at tasks that demand accuracy, nuance and thoughtful deliberation. They are particularly well-suited for:

- Strategic problem-solving of multi-step, complex challenges

- Navigating ambiguity or incomplete instructions by intuiting what should be done given vague instructions

- Parsing large or unstructured datasets to extract key insights, especially with longer context widows

- Planning and executing multi-step workflows in a single-shot prompt, versus a prompt chain

- Debugging code or analyzing complex logic that is more likely to have a rational answer

In contrast, standard models are ideal for well-defined, fast-execution tasks: generating summaries, drafting emails or blog posts, answering straightforward questions or handling repetitive requests where speed and efficiency matter more than depth.

In practice, the most effective workflows often involve both. Use reasoning models for framing, analysis and decision-making; then pass the results to standard models for communication, formatting or execution. Reasoning models help you solve the right problem. Standard models help you move quickly once you know what to do.

Reasoning Models: A Core Component of Future AI

While reasoning models are new, they are likely to become more integrated into daily model usage. ChatGPT-5 reportedly will have a built-in model switcher so that thinking mode may be activated automatically depending on a user prompt. Some commentators see them as becoming a core component of models' generalizable abilities.

Thinking models’ focus on deterministic domains like math and code makes them core components of future synthetic data generation, which will improve future models. However, no matter how they determine the future of AI, effectively communicating intent, vision and process to reasoning models now and in the future will allow skilled users to obtain better and more nuanced outputs.