While generative AI and its large language models (LLM) are all the rage, one of the important reasons for ChatGPT’s success is that it could do what previous generations of AI (largely rule engines) could not: deal with imprecise language.

Think about pre-generative AI chatbots. Unless your question was a close approximation to previously stored Q&A rule bases, your experience was inevitably utter frustration. That said, if you do manage to match the required syntax, they could navigate you through complex logic rules that could defy even the most diligent human operators.

Much of the early success of rule based expert systems were in “deterministic” domains where the rules of operations could be precisely defined. Think scheduling, logistics or compliance applications, where a myriad of interlinked rules are typically created and need to be applied. It would be nice to think that the new generation of AI would comfortably subsume the capabilities of the previous generations. But this is not yet the case.

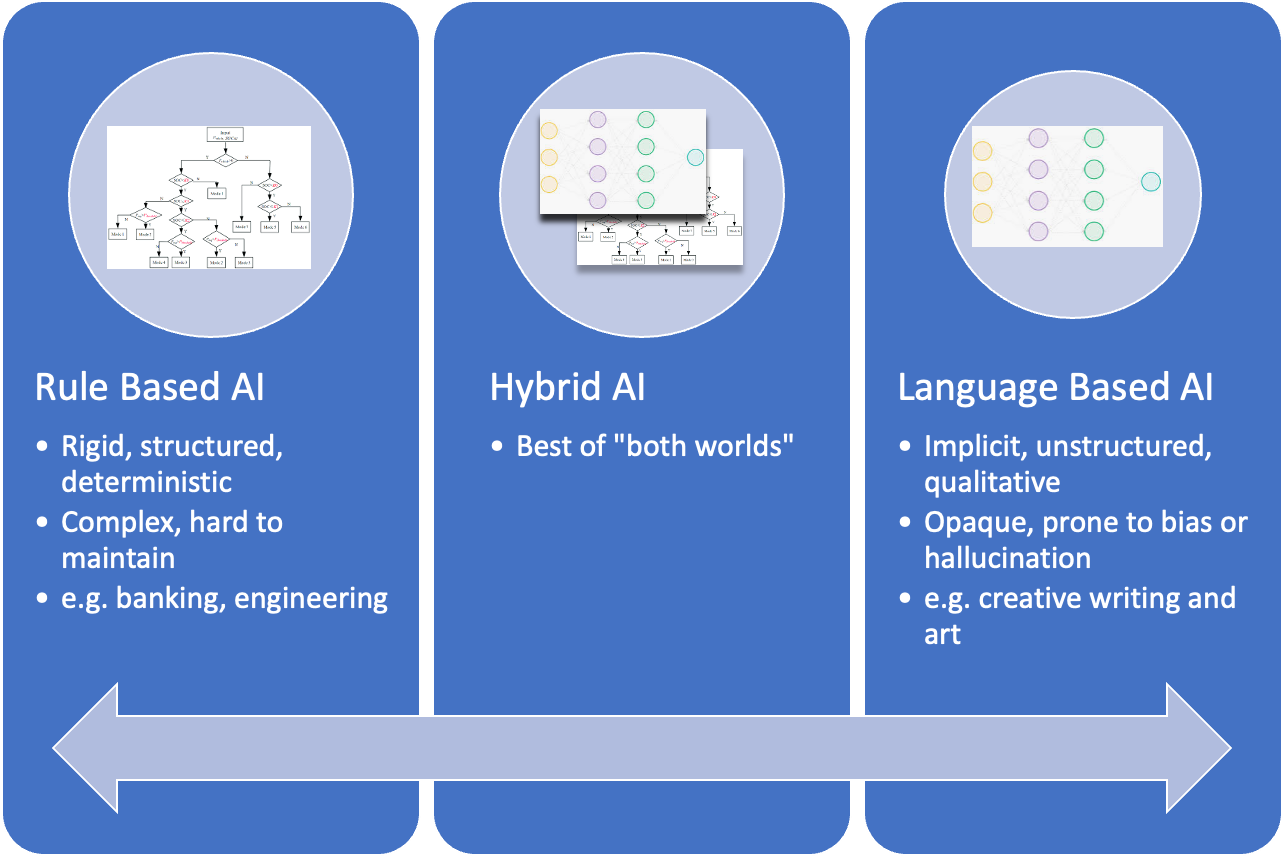

They are a fundamentally different approach, though with the same goal of demonstrating human-like intelligence. In fact, you can think of the two generations of AI at different ends of a spectrum with quite complementary strengths and weaknesses. Maximum capability can therefore only be achieved by a hybrid usage.

The AI Spectrum

One can be easily fooled into thinking generative AI can stretch beyond its large language strengths to complex logic or mathematics problems. Type a simple sum into ChatGPT and it will quickly provide the correct answer. What it is actually doing is calling on dedicated plugin applications to do this. There is a limit to this functionality though. Ask ChatGPT itself, and it provides the response: “... while I am not a calculator, I can still solve math problems using my language processing capabilities. However, for more complex problems, it may be better to use a dedicated calculator or other specialized tools.”

It is therefore important to recognize that “generative AI” is not just language related. The large language models facilitate the integration of many types of AI, making them accessible to the general public at large. Dalle-3, the image creation system built within ChatGPT, is one more prominent plugin.

For a more detailed account of why we need to judge generative AI differently to how we have judged information systems to date, look at “What the Lone Banana Problem reveals about the Nature of Generative AI” by Reimer and Peter from the University of Sydney. The authors use the inability for generative AI to create an image of a single banana (it could only create bunches), to argue that generative AI are essential “style engines” more so than “logic engines.” Style is more associated with the creative capabilities we have all been amazed with. It cannot provide the accuracy and veracity we have become to expect from traditional computing to date.

My own experiments with generative AI have regularly resulted in 80 – 90% solutions. Much of the time, generative AI is doing an amazing job; but for a minority of the time, it can be inappropriate or just plain wrong. Trying to wrangle the prompts to close the gap can be fruitless, especially when you are looking for generative AI to behave like a rule engine. The challenge is being able to identify the extent of the limitations. The Open AI foundation and ChatGPT is building out a general AI capability with a plethora of “plug-ins” that will be largely invisible to us.

Unfortunately, there is no easy way of discovering the limits of the generative AI model other than experimentation. If your application can’t tolerate a wrong or inappropriate response that isn’t easily fixed with a better prompt, it’s time to look elsewhere.

Related Article: From AI Authorship to AI on the Shoulder

Designing a Hybrid AI

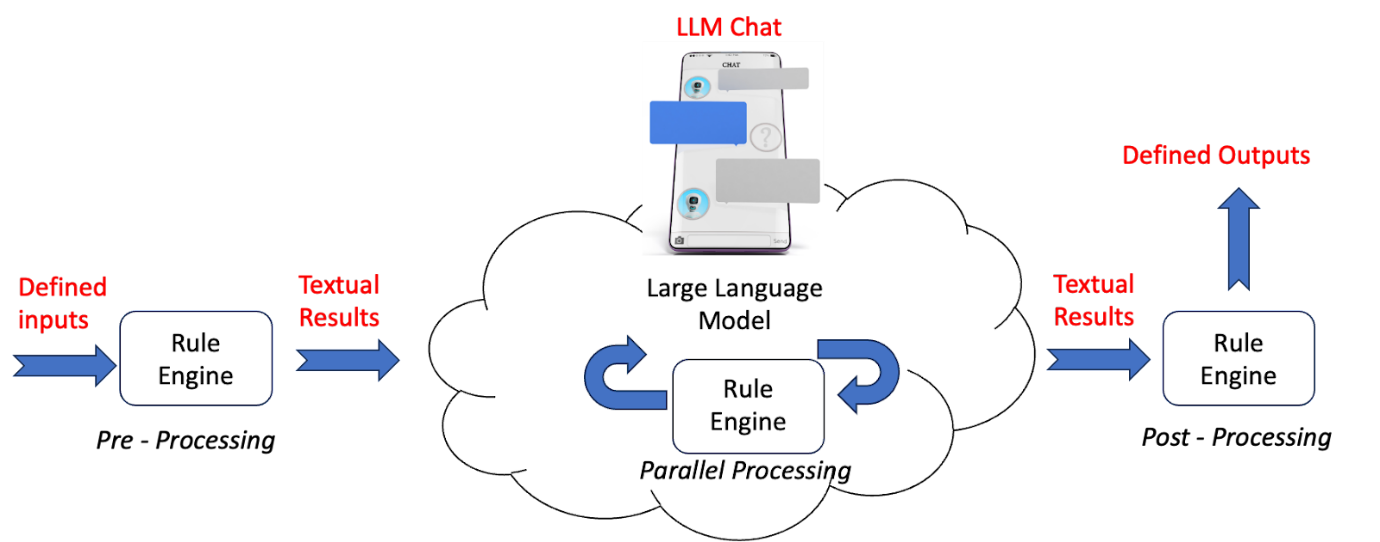

Once you have “ring fenced” the generative AI system, you can think about integration points. Basically, there are three options:

- Rule Engine as a “Pre-processor” for LLM

- Rule Engine as a “Post Processor” for LLM

- Rule Engine in parallel with LLM

As an example, let’s think about an AI personal financial assistant chatbot. The first task for the chatbot is to understand your current personal situation. In this situation analysis, we would expect “flags” representing either concerns or opportunities. This process is best suited to the Rule Engine in a secured environment. Let’s say that the situation analysis identified a concern (mortgage commitments too high) and an opportunity (taxation rebate for health contributions.) These “flags” can be fed to the LLM to generate specific actions and follow up discussions as you see fit. This would be an example of “pre-processing”.

Now, perhaps the financial chatbot is made available for general exploratory financial advice. In the course of the online chat, you identify that there might be an opportunity to lower your mortgage commitments on a suggested mortgage comparison site. You would then leave the LLM for the suggested comparative site. This is an example of “post-processing”.

Parallel processing requires more detailed knowledge on “plugins'' for LLMs. In essence, the “rule engine” becomes part of the LLM processing, with the LLM being used to evaluate rule engine outputs and which can be fed back into the LLP for deeper levels of learning. The technical sophistication required means that this option is not for the faint hearted.

The key takeaway is not to let mixed results put you off generative AI. Use experimentation to identify the boundaries of its capabilities for your application. Then use alternative approaches as hybrid add-ons, to achieve your desired solutions.

Learn how you can join our contributor community.