I've seen it countless times. A company gets excited about AI, launches a few pilot projects, sees some initial success and then... stalls. They're stuck in "AI purgatory" — realizing the potential but unable to scale it across the organization. This isn't a technology problem; it's a strategic problem. And it reminds me of a very old story — the story of Noah. While everyone else went about their daily lives, Noah saw a fundamental shift coming. He didn't wait for the rain; he prepared.

This article isn't about building a literal ark, but about building an organizational one — a framework for thriving in the coming flood of enterprise-wide AI. We will lay out a roadmap for moving beyond isolated AI tools to a truly intelligent enterprise, where AI permeates every function and decision-making process.

How the 'Log of Resources' Principle Transforms AI's Potential

Many organizations start with AI in a single department — marketing, customer service or maybe supply chain. They see some improvements, a bit of cost reduction or a slight boost in efficiency. But this is like trying to power a city with a single solar panel. You get some energy, but you're nowhere near realizing the full potential.

Think of each AI deployment as adding a log to a fire. A single log burns, providing some heat. But as you add more logs, the fire doesn't just get bigger; it gets hotter, more intense and capable of generating far more energy. This is the "log of resources" principle in action. Each AI agent, each data point, each successful implementation adds to the collective intelligence, creating a network effect that far surpasses the sum of its parts.

Related Article: The AI Agent Explosion: Unexpected Challenges Just Over the Horizon

Analogies Can Be Fun to Use

Let's compare this concept to the advice for visceral fat reduction given by Ken D. Berry, MD, a family physician who creates content about health, particularly focusing on low-carbohydrate diets. He emphasizes a holistic, integrated approach rather than isolated solutions. Just like spot-reducing fat is ineffective, deploying AI in isolated pockets won't transform an organization.

Dr. Barry advocates for:

- Eliminating ultra-processed foods: This is analogous to removing outdated systems and processes that hinder AI integration.

- Stopping sugar intake: This is like stopping the reliance on "quick fix" AI solutions that don't address the underlying issues.

- Reducing overall carbohydrates: This represents a strategic shift away from traditional, less efficient ways of operating.

- Integrating multiple lifestyle changes (diet, exercise, sleep, sunlight): This mirrors the need for a comprehensive AI strategy that touches all aspects of the business.

Just as Dr. Barry's approach requires a fundamental change in lifestyle, scaling AI requires a fundamental change in organizational mindset and infrastructure.

Related Article: Navigating the New Normal With Real-Time AI in Business

Strategies for Cross-Departmental AI Scaling

This isn't about forcing AI into every corner of the business. It's about identifying areas where AI can create the most value and strategically expanding from there. Start with a "lighthouse" project — a high-impact initiative that demonstrates the potential of enterprise-wide AI. Then, create a roadmap for deploying AI across other departments, prioritizing those with the greatest potential for synergy and data sharing.

Build an Infrastructure for 1,000 AI Agents (and Beyond)

You need a platform that can handle thousands of AI agents, processing vast amounts of data in real-time. This requires a shift to cloud-based solutions, robust data pipelines and a flexible architecture that can adapt to evolving AI technologies.

Infrastructure Specifics:

- Compute: This involves specialized hardware like GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units) that are designed for parallel processing, critical for AI workloads. Server farms with high-density GPU configurations are becoming the norm.

- Networking: High-bandwidth, low-latency networks are essential for moving massive datasets between storage and processing units. This often involves specialized networking hardware and protocols optimized for AI traffic.

- Data Storage and Management: AI models thrive on data. Scalable storage solutions (cloud-based or on-premise) that can handle petabytes (or even exabytes) of data are necessary. Data lakes and data warehouses need to be integrated with AI pipelines. Data quality and relevance are also part of the mix.

- Software Stack: This includes machine learning frameworks (like TensorFlow, PyTorch), programming languages (primarily Python) and distributed computing platforms (like Apache Spark).

- MLOps Platforms: Machine Learning Operations (MLOps) platforms are becoming critical for managing the entire AI lifecycle, from model development and training to deployment, monitoring and updating.

Cross-Departmental Strategies:

- Collaboration: Involve stakeholders from various departments (customer service, finance, legal, etc.) in the AI development process. This ensures alignment with business needs and facilitates smoother adoption.

- Use Case Expansion: Start with a successful pilot project and expand its application to other departments or broaden the scope of the use case. For example, if AI-powered summarization works for incident reports, apply it to case summaries or knowledge generation.

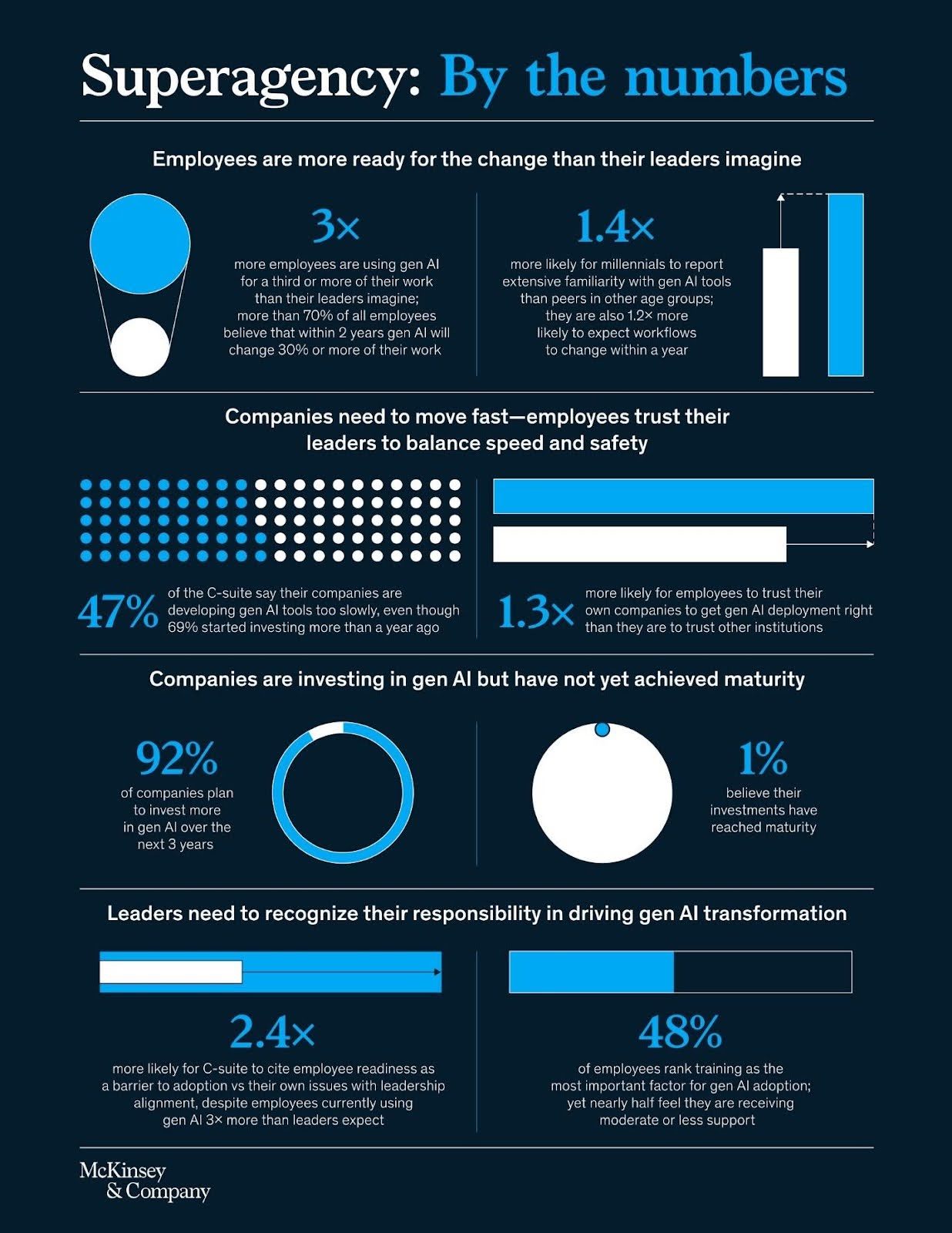

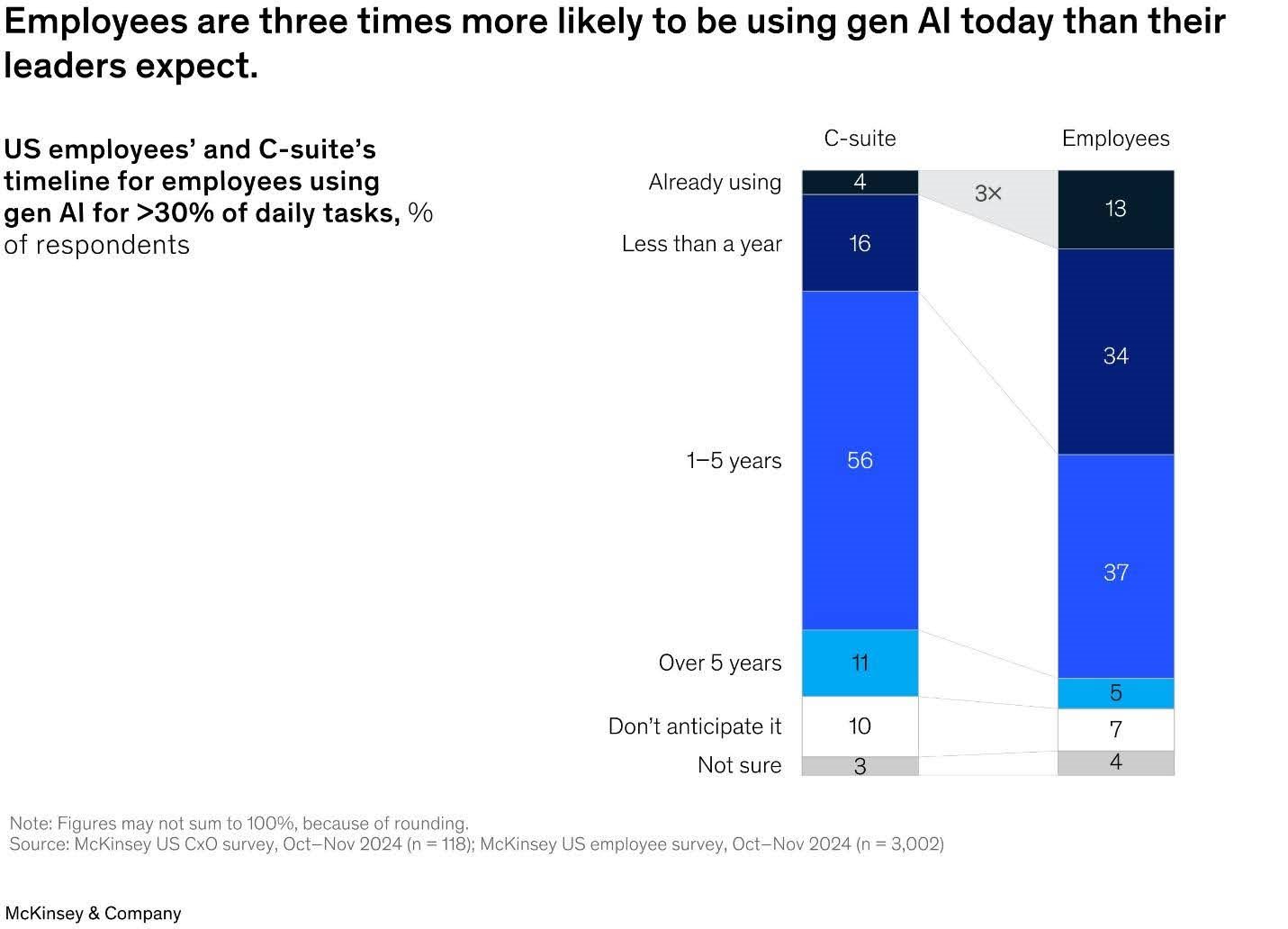

- Phased Rollout: A recent McKinsey report shows that many organizations are still in the early stages of Gen AI adoption. Only 1% of C-suite respondents described their Gen AI rollouts as "mature," indicating that genAI is fundamentally changing how work is done. This highlights the importance of a strategic, phased approach.

The Transition: Point Solutions to Systemic Integration

This is the heart of the matter. It's about moving from using AI to solve specific problems to using AI to rethink how the entire organization operates. This requires a change in mindset, a willingness to experiment and a commitment to continuous learning. It's also about data.

Data Silos Are the Enemy

Isolated AI projects often create data silos. Each department has its own data, its own models and its own metrics. To achieve enterprise-wide intelligence, these silos must be broken down. Data needs to be shared, standardized and accessible across the organization. This is where a robust data governance framework becomes critical.

Security and Governance at Scale: A Non-Negotiable

As AI becomes more pervasive, the risks increase. Data breaches, biased algorithms and lack of transparency can have serious consequences. A strong governance framework must address data privacy, security, ethical considerations and regulatory compliance. This isn't just about ticking boxes; it's about building trust — with employees, customers and regulators.

Cost-Benefit Analysis: Thinking Long-Term

Large-scale AI deployment is a significant investment. But the potential ROI is equally significant. It's not just about cost savings; it's about increased revenue, improved customer experience, faster innovation and a more resilient organization. The cost-benefit analysis must consider these long-term strategic advantages, not just short-term operational efficiencies. A PWC study estimates that 45% of total global economic gains by 2030 will be driven by AI.

Related Article: Shaping AI Strategy in the Enterprise

Building Your Organizational Ark: Preparedness in the Age of AI

The shift to enterprise-wide AI isn't just about technology; it's about preparedness. It's about recognizing that a fundamental change is underway, a change as significant as the advent of electricity or the internet. Like Noah, we need to see beyond the immediate horizon and prepare for a future where AI is not just a tool, but an integral part of how we operate.

This isn't about fear; it's about conscious preparation. It's about building an "organizational ark" — a resilient, adaptable structure that can weather the coming wave of AI-driven transformation. This means:

- Education as a Foundation: Your organization needs to invest in understanding AI's capabilities and limitations. This isn't just for the IT department; it's for everyone.

- Continuous Learning: AI is not a static technology. Treat it as a collaborative partner, constantly evolving and requiring ongoing learning and adaptation.

- Ethical Engagement: Approach AI with mindfulness, intentionality and a strong ethical framework. This is crucial for building trust and avoiding unintended consequences.

- Practical Application: Don't just talk about AI; experiment with it. Encourage employees to explore AI tools in their daily work and share their findings.

- Community Connection: Share knowledge and experiences with other organizations. Learn from their successes and failures.

Consider the example of Tesla, a company that, much like Noah, anticipated a major shift — the transition to electric vehicles — long before it became mainstream. Their early preparation allowed them to lead the charge.

Or, look at Google DeepMind's AlphaFold project, which used AI to solve a decades-old problem in protein folding. This demonstrates the power of AI to tackle complex challenges and unlock new possibilities. Even a small marketing firm I know, by integrating AI-powered content generation, saw a 50% increase in productivity, not by replacing writers, but by empowering them. These are the kinds of transformations I am talking about.

Real-World Enterprise Examples

These are some of the study cases I came across in my AI specialization at Stanford.

- A Global Bank: Implemented an AI-powered fraud detection system across all its divisions, resulting in a 30% reduction in fraudulent transactions and significant cost savings.

- A Retail Giant: Used AI to personalize customer recommendations and optimize its supply chain, leading to a 15% increase in sales and a 10% reduction in inventory costs.

- A Manufacturing Company: Deployed AI-powered predictive maintenance across its factories, reducing downtime by 20% and improving overall equipment effectiveness.

Actionable Recommendations

- Form an AI Center of Excellence: Create a dedicated team responsible for driving the enterprise-wide AI plan.

- Develop a Data Strategy: Prioritize data quality, accessibility and governance.

- Invest in Scalable Infrastructure: Build a platform that can support thousands of AI agents.

- Embrace MLOps: Implement processes and tools for managing the entire AI lifecycle.

- Start With High-Impact Projects: Demonstrate the value of AI early on to secure buy-in and build momentum.

- Foster a Culture of Experimentation: Encourage employees to explore new AI applications and share their learnings.

Implementation Frameworks

A phased approach is recommended, starting with a pilot project, followed by expansion to other departments and ultimately achieving full integration. Key stages include:

- Assessment: Identify AI opportunities and assess organizational readiness.

- Pilot: Develop and deploy a high-impact AI project.

- Scaling: Expand AI to other departments and use cases.

- Integration: Embed AI into core business processes.

- Optimization: Continuously monitor and improve AI performance.

Risk Mitigation Strategies

- Data Security: Implement robust security measures to protect sensitive data.

- Bias Detection: Regularly audit AI models for bias and ensure fairness.

- Transparency: Make AI decision-making processes transparent and explainable.

- Compliance: Adhere to relevant regulations and ethical guidelines.

- Redundancy: Build redundancy into AI systems to prevent single points of failure.

- Human-Centered Approach: Ensure that AI deployments prioritize human well-being and ethical considerations, fostering a collaborative relationship between humans and AI.

Future Scenario Planning

- AI-Driven Automation: What jobs will be automated, and how will the workforce adapt?

- Hyper-Personalization: How will AI transform customer experiences?

- Algorithmic Competition: How will AI change the competitive landscape?

- The Rise of AI-as-a-Service: How will organizations access and utilize AI capabilities?

- Ethical Dilemmas: What new ethical challenges will AI present?

- The Conscious Technology Revolution: How will we ensure that AI is used to enhance human potential, not diminish it? How do we foster a future where AI serves humanity's best interests?

Thriving in the Age of AI

The transition from isolated AI tools to organization-wide intelligence is a strategic imperative for businesses seeking to thrive in the age of AI. It requires a fundamental shift in mindset, a commitment to building scalable infrastructure and a robust governance framework. The "log of resources" principle highlights the exponential value that can be unlocked through widespread AI deployment. By embracing a phased, strategic approach and preparing like Noah for the coming flood, organizations can harness the transformative power of AI and gain a significant competitive advantage.

The future belongs to organizations that can think at scale and prepare with foresight. This isn't about deploying a few clever algorithms; it's about building a truly intelligent enterprise, where AI is woven into the fabric of the organization, much like Noah built his ark plank by plank. It's a challenging journey, but the potential rewards — increased efficiency, innovation and resilience — are too significant to ignore.

The wave of AI is coming. It's not a destructive deluge, but a surge of unprecedented technological possibility. Will your organization be prepared? Will you be on board, or left behind? Breathe. Learn. Grow. The time to act is now. Start building your AI-powered future today.

Frequently Asked Questions

What's the biggest obstacle to scaling AI?

Mindset. Many organizations are still stuck in a traditional, siloed way of thinking. Overcoming this requires strong leadership and a clear vision for the future.

How do we get started?

Begin with a high-impact pilot project that demonstrates the value of AI. This will help secure buy-in and build momentum for wider deployment.

What about the cost?

Large-scale AI deployment is an investment, but the potential ROI is significant. Focus on long-term strategic advantages, not just short-term cost savings.

How do we address ethical concerns?

Develop a strong governance framework that addresses data privacy, security, bias and transparency.

What skills do we need?

You'll need data scientists, AI engineers and MLOps specialists. But you'll also need to upskill existing employees and foster a culture of AI literacy across the organization.

Learn how you can join our contributor community.