Key Takeaways From OpenAI's State of Enterprise AI Report

- Enterprises are moving from pilots to operational AI.

- A major gap is forming between AI-ready and AI-lagging organizations.

- Confidence in AI is rising faster than operational preparedness.

- Governance, skills shortages and fragmented data remain top blockers.

OpenAI's new State of Enterprise AI report comes at a pivotal moment, and the findings show businesses are accelerating faster than their foundations can support.

The report draws from more than 1,000 enterprise leaders across six markets, and the picture it paints is both urgent and uneven: although AI investment has skyrocketed, operational readiness, governance and measurable outcomes are still forming a patchwork.

What’s emerging is a new enterprise divide: the businesses that are building AI as an operating layer, and those still treating it as a tool.

Table of Contents

- Finding #1: Enterprise AI Entered Its Maturity Curve

- Finding #2: AI Adoption Accelerated, But Confidence Outpaces Readiness

- Finding #3: Productivity Gains Mask Deeper Reliability Issues

- Finding #4: AI Is the New Coordination Layer

- Finding #5: Where AI Efforts Stall Inside the Enterprise

- Finding #6: The Skills Gap Widens as AI Moves to the Core

- Finding #7: Risk and Trust Outweigh Performance Concerns

- Finding #8: The Emerging Playbook for AI Leaders

- Key Questions Moving Forward

Finding #1: Enterprise AI Entered Its Maturity Curve

"Enterprise AI is no longer defined by ambition alone. It is increasingly defined by execution."

For the first time, enterprise AI adoption is no longer dominated by pilots or proofs of concept. Instead, AI is increasingly embedded into core operational workflows and decision infrastructure. The question is no longer whether enterprises are experimenting with AI, but whether they are prepared to operate it reliably at scale.

Across OpenAI's report, AI is described less as a tool and more as a foundational capability. Enterprises are moving beyond ad hoc deployments to more structured approaches by adopting:

- Standardized platforms

- Shared governance models

- Production-grade architectures

At the same time, the report reveals a widening gap between businesses that are structurally ready for AI and those that are not.

AI-Ready Enterprises vs. AI-Lagging Businesses

| Trait | AI-Ready Enterprises | AI-Lagging Businesses |

|---|---|---|

| Data Foundations | Invested in data foundations | Constrained by fragmented data |

| Ownership | Set up cross-functional ownership | Have unclear accountability |

| Operational Discipline | Have operational discipline | No systems for continuous Ai-driven decisioning |

As AI becomes more deeply integrated into enterprise operations, this divide is no longer theoretical. It directly influences speed, cost, risk and competitive position.

This maturity curve sets the context for everything that follows in the report. Enterprise AI is no longer defined by ambition alone. It is increasingly defined by execution.

Related Article: Why Only 5% of Companies Are Seeing Real AI ROI

Finding #2: AI Adoption Accelerated, But Confidence Outpaces Readiness

Enterprise enthusiasm for AI has clearly crossed a threshold. What's new:

- AI budgets are increasing, often by more than 10%

- Pilots are moving into production at unprecedented speed

- Productivity gains are concentrated in copilots and chat interfaces

At the same time, confidence is running ahead of capability. While leaders report improved efficiency and faster decision-making, far fewer businesses have the foundations required to run AI reliably at scale. Common challenges include:

- Inconsistent alignment across teams

- Fragmented data pipelines

- Unclear ownership of AI behavior

- Governance frameworks lacking rigor

Finding #3: Productivity Gains Mask Deeper Reliability Issues

"Where many [enterprises] are still unprepared is making AI reliable enough to run parts of the business."

- Vaibhav Bajpai

Sr. Director, Group Engineering Manager of Core AI, Microsoft

"Enterprises are significantly ahead in employee productivity and team workflows, where copilots and chat-based tools are already delivering clear value," Vaibhav Bajpai, senior director, group engineering manager of Core AI at Microsoft, told VKTR.

"Where many are still unprepared is making AI reliable enough to run parts of the business. Moving from people using AI to systems operating on AI exposes gaps in evaluation, monitoring and ownership that most organizations haven’t fully solved yet."

The AI Optimism Paradox: Confidence vs Readiness

AI investment and confidence are rising faster than the operational foundations required to run AI safely and reliably at scale.

| Where AI Confidence Is High | Where Readiness Lags | Why It Matters |

|---|---|---|

| AI is boosting productivity | Mostly limited to copilots and chat tools | Surface gains don't translate into durable advantage |

| Pilots are moving into production | No clear owner for AI outcomes or failures | Deployed systems lack accountability |

| AI can scale across teams | Fragmented data and weak orchestration | Agents can't act reliably without shared context |

| Governance is "in place" | Evaluation and monitoring are inconsistent | Trust erodes when behavior can't be proven safe |

| AI will run workflows end-to-end | Limited oversight and escalation paths | Humans can't supervise systems they don't understand |

AI is delivering value at the surface layer, helping individuals work faster, but the systems underneath are not yet prepared to support AI as a true operational layer. As brands move from people using AI to businesses running on AI, this readiness gap becomes impossible to ignore.

Finding #4: AI Is the New Coordination Layer

For some enterprises, AI is no longer something bolted onto existing tools. It's become an operating layer that coordinates work across systems, teams and processes. Businesses are increasingly expecting AI to handle end-to-end workflows, moving from signal detection to decisioning to action without constant human intervention.

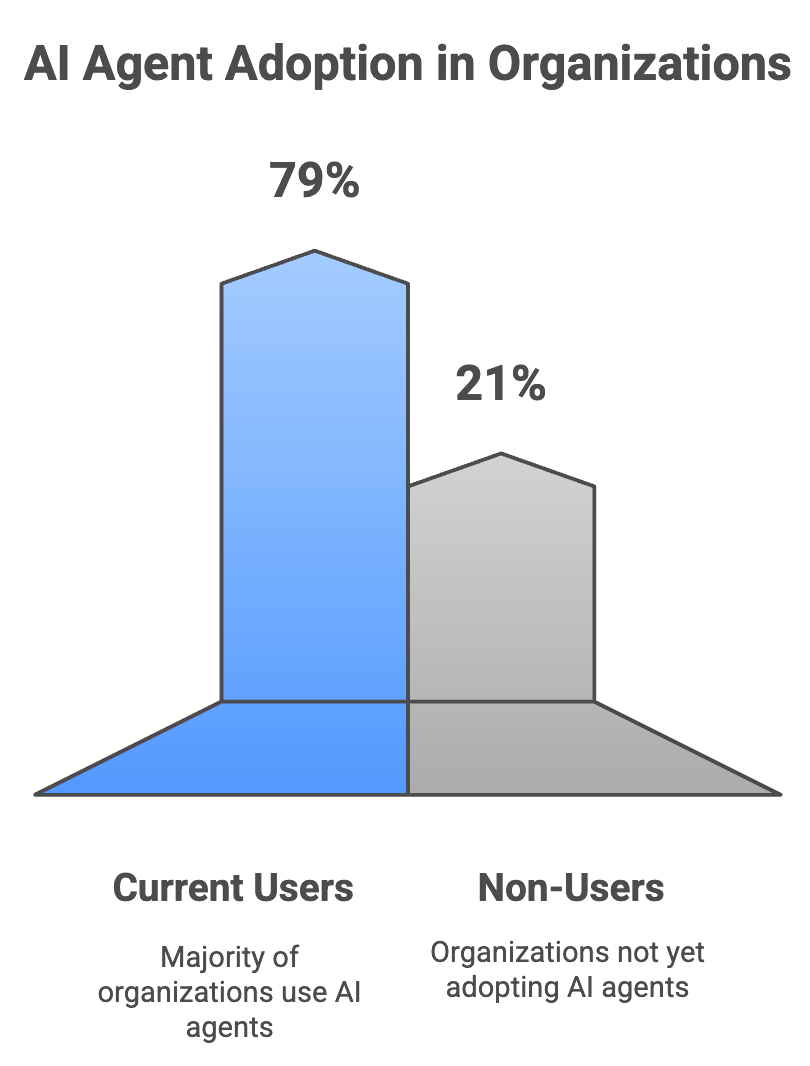

The rise of AI agents accelerates this transition, with 79% of organizations surveyed saying they already use agents. Instead of supporting isolated tasks, AI systems now triage requests, route work, monitor outcomes and initiate follow-up actions across multiple platforms. These agents operate continuously, not as one-off assistants, which changes expectations around reliability and accountability. As autonomy increases, so does the need for clear ownership of how decisions are made and how systems behave over time.

Processes that were once human-first are being redesigned around AI coordination, with people stepping in for escalations and high-stakes decisions. In practice, this creates AI-native processes where automation, data and human oversight are designed together from the start, rather than layered on afterward.

As AI becomes embedded at this level, the competitive advantage shifts. Success is no longer defined by which tools a brand adopts, but by how well it redesigns workflows to operate with AI at the center.

Related Article: Your Science Fair Is Over. It's Time to Build the AI Factory

Finding #5: Where AI Efforts Stall Inside the Enterprise

Despite growing confidence in AI’s potential, organizational friction remains the most persistent obstacle to meaningful progress. Key challenges that slow adoption and limit impact include:

- Fragmented data environments

- Deeply embedded legacy systems

- Siloed ownership structures

Even when AI tools perform well in isolation, they often struggle to operate effectively across disconnected systems and teams.

AI initiatives frequently span multiple functions, yet responsibility for outcomes is often diffuse. When no single team owns end-to-end performance, the result is evaluation, governance and remediation falling through the cracks. This creates risk not only for reliability and trust, but also for scaling AI beyond pilot programs.

OpenAI's AI report makes a clear distinction between adopting AI and transforming with AI. The latter requires businesses to rethink how decisions are made, how work flows across departments and who is accountable when automated systems act. In this framing, AI transformation is less about deploying new models and more about aligning people, processes, and governance around shared outcomes.

Enterprises that fail to address these structural issues risk stalling in a middle ground where AI exists everywhere, but drives limited change.

Finding #6: The Skills Gap Widens as AI Moves to the Core

Despite AI adoption accelerating, organizations see a skills gap that is widening rather than shrinking.

Many leaders now acknowledge that they are underprepared in areas that matter most at scale, particularly AI governance, security, evaluation and operational oversight. While technical teams may understand how models function, far fewer employees understand how to assess reliability, detect failure modes or intervene when automated systems behave unexpectedly. Even when this expertise exists, it is often siloed or poorly communicated across teams. These gaps become more visible as AI moves closer to core operations, and they are no longer confined to technical teams.

Culture is the hardest problem to solve, according to Brady Lewis, senior director of AI innovation at Marketri. "Not because people resist new technology, but because AI implies new uncomfortable conversations. Who is liable for an incorrect recommendation made by AI? Which automatable recommendations are still considered to be safe?"

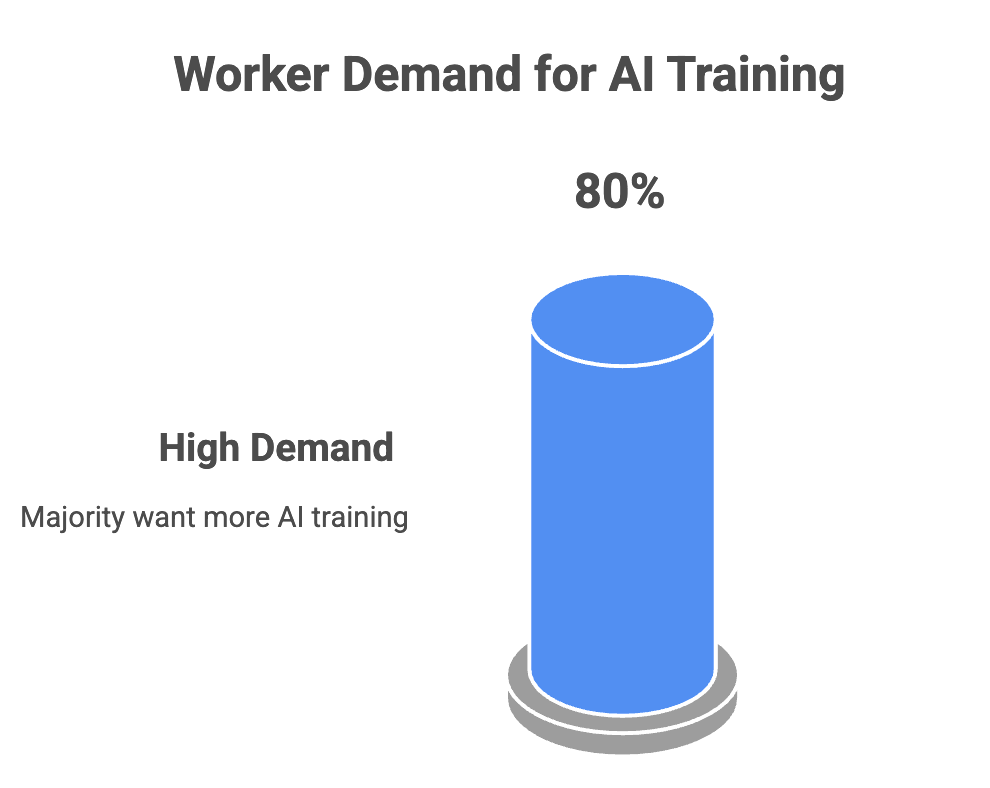

Unfortunately, training has not kept pace with deployment. In many companies, AI tools roll quickly in an attempt boost productivity, but structured enablement often lags. Employees are expected to work alongside AI systems without clear guidance, despite 80% of workers saying they want more AI training.

Other Common Questions on the AI Skills Gap

As AI shifts from isolated tools to operating infrastructure, the most in-demand roles are not traditional ML engineers. Enterprises increasingly need:

- AI Workflow Designers, who re-engineer processes around automation and agents.

- AI Reliability & Evaluation Specialists, responsible for failure mode testing, drift detection and model performance audits.

- AI Governance Leads, who unify risk, compliance and technical oversight.

- AI Product Owners, who manage cross-functional outcomes when AI systems influence entire business processes.

These roles didn’t exist in most companies 18–24 months ago, but they are quickly becoming foundational.

Most skills gaps surface only during incidents, such as a missing escalation path, unclear ownership or an unmonitored workflow.

Companies can get ahead of this by:

- Running AI tabletop simulations (similar to cybersecurity drills).

- Mapping every automated decision to an accountable owner.

- Performing process heat-maps to find where human oversight is weak.

- Auditing workflows for points where AI acts independently without clear fallback conditions.

This proactive approach reveals gaps long before real harm occurs.

When used intentionally, AI certifications can create a shared foundation of knowledge across teams — something many enterprises don’t currently have. Certifications offer value in three areas:

- Baseline Literacy: They ensure employees across functions understand fundamental concepts like model behavior, hallucinations, bias and evaluation practices.

- Common Vocabulary: Certifications help technical and nontechnical teams speak the same language, speeding up decision-making and reducing miscommunication in cross-functional workflows.

- Structured Upskilling Pathways: They provide a clear roadmap for employees who want to grow into AI-related roles such as AI product owner, evaluator or workflow designer.

While certifications alone won’t close the skills gap, they jump-start organizational alignment and give employees a clear starting point for deeper, role-specific training.

Finding #7: Risk and Trust Outweigh Performance Concerns

Concerns around AI risk are beginning to outweigh concerns about performance. Many leaders are less worried about whether AI can deliver value and more concerned about whether it can be trusted to operate safely, consistently and in alignment with business and regulatory expectations. The report is indicative of a growing recognition that speed without safeguards creates exposure rather than advantage.

Despite this awareness, most enterprises still lack standardized guardrails for AI deployment. In many cases, AI systems are evaluated during pilot phases but receive far less scrutiny once they move into production, creating blind spots around model drift, bias, reliability and unintended outcomes.

This gap is driving demand for stronger evaluation frameworks — like the NIST AI Risk Management Framework — and governance infrastructure. Leaders are looking for ways to continuously test AI behavior, monitor decision quality and verify that systems behave as intended over time. Provenance and traceability are becoming essential as well, especially as models ingest diverse data sources and generate outputs that influence high-impact decisions.

Finding #8: The Emerging Playbook for AI Leaders

A clear pattern has emerged: enterprises are moving beyond experimentation and into sustained AI advantage. A small but growing group of enterprises is beginning to separate itself by treating AI as infrastructure rather than experimentation. AI leaders are differentiating with:

- Deep data integration

- Workflow redesign around AI

- Shared cross-functional ownership

- Continuous governance infrastructure

- Controlled agentic workflows with human-in-the-loop escalation

Related Article: Do's, Don'ts and Must-Haves for Agentic AI

Key Questions Moving Forward

Leaders must increasingly prioritize:

- Data infrastructure and interoperability

- Governance and monitoring frameworks

- Skills development across business functions

- Workflow redesign around agentic systems

Beyond technical ML roles, enterprises need:

- AI evaluation and oversight specialists

- Workflow designers comfortable with automation

- Operational leaders who can supervise AI-driven decisions

- Governance professionals who can detect, escalate and remediate failures

The skills gap now affects the entire organization, not just engineers.

Expect increased requirements for transparency, documentation, traceability, monitoring and model-level accountability.

Currently in the US, many states have their own legislation regarding organizational AI use. However, the federal government is also attempting to mandate country-wide AI regulation.

Additionally, those doing business in the European Union — even if they are not located there — may need to adhere to laws like the EU AI Act and the General Data Protection Regulation (GDPR).