We stand at a fascinating crossroads.

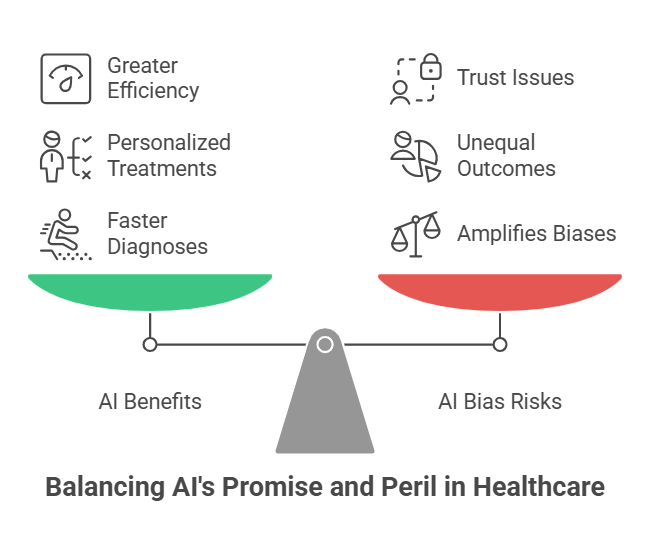

On one hand, we see the enormous potential for AI to revolutionize how we deliver medical care, promising faster diagnoses, personalized treatments and greater efficiency. On the other hand, we face a significant challenge: the potential for AI to perpetuate and even amplify existing biases, leading to unequal healthcare outcomes for some and putting at risk the trust we need in these systems to provide for the health needs of our communities.

This isn't just a technological hurdle; it's a human one.

I believe this is a moment when we must ask not just whether we can use AI but whether we should and, if so, how to do it fairly. I've seen this issue firsthand during my specialization in AI and Healthcare at Stanford, where I learned that AI, while powerful, is not immune to the flaws of the data it learns from. We'll look at how biases creep into algorithms, their impact on real patients and what we must do to build truly equitable AI systems in healthcare.

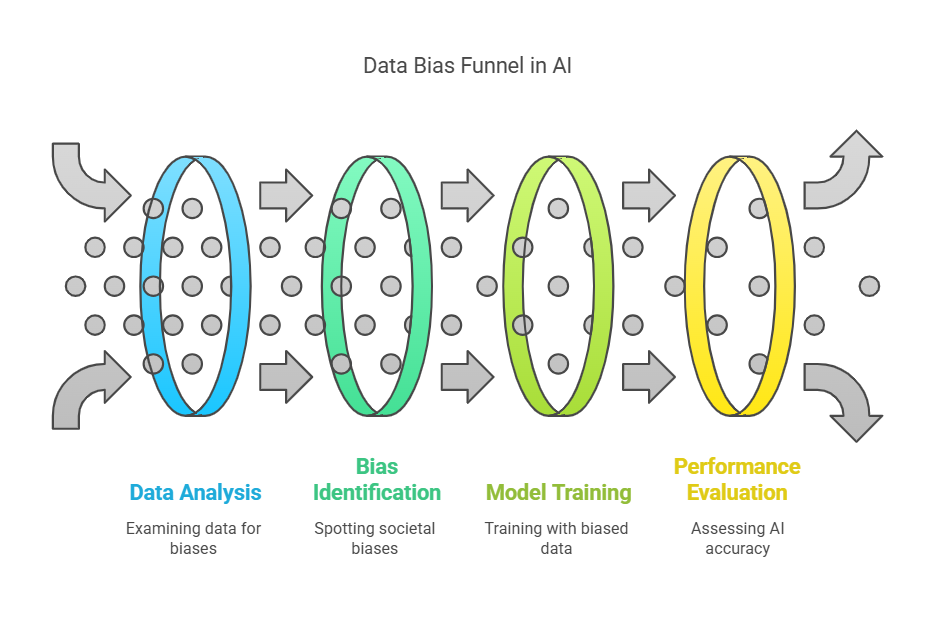

The Hidden World of Biased Data

It all begins with data. AI algorithms learn from the information fed into them, and if that data reflects existing societal biases, the AI will, too. Think of it as training a student with textbooks that only feature examples from one demographic; it will limit their capacity to comprehend diversity.

While at Stanford, I was involved in a project that highlighted this directly. We were looking at an AI system designed to predict patient risk for certain conditions, and we noticed that the algorithm performed less accurately for minority populations. It became clear that the data we were using to train the model was not fully representative, a gap that needs to be filled.

For example, in a 2024 study by Nature Medicine, researchers found that AI algorithms used to predict hospital readmission rates were often trained on data from underrepresented minority groups. This led to these algorithms underestimating the risk of readmission for these groups and, in turn, affecting the treatment of those patients with a higher risk of readmission.

It is about the data used to train these AI systems and their ability to represent diverse populations.

Related Article: 5 Levels of AI in Healthcare: From Chatbots to Scaled Innovation

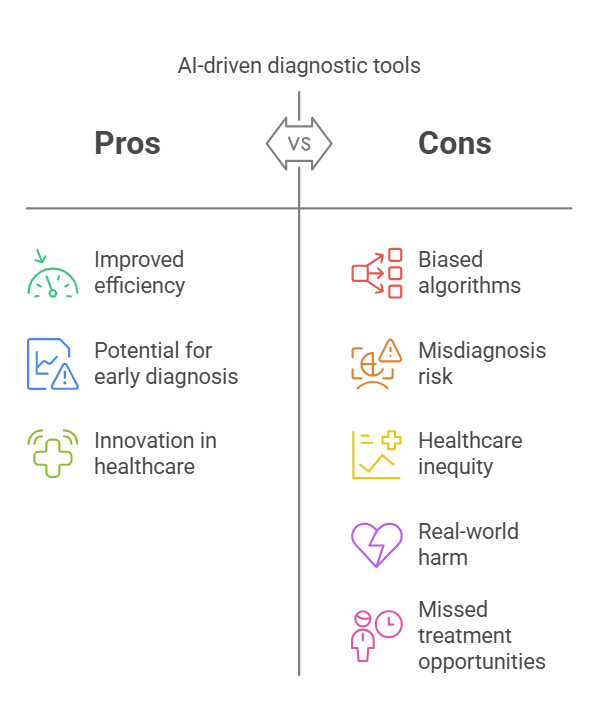

The Consequences: When Algorithms Discriminate

The consequences of biased algorithms are not abstract; they are real and impact people's lives daily.

I recall a case in 2023 when I observed discussions during my specialization, where an AI-driven diagnostic tool, trained primarily on images of skin conditions on lighter skin tones, was less accurate in diagnosing skin cancer in patients with darker skin. This misdiagnosis isn't just an error; it's a missed opportunity for early intervention. It’s a reflection that in our haste to innovate, we may forget to include the whole population and, as a result, create a two-tiered healthcare system.

The real cost comes when individuals are not treated promptly, not only for the patients but also for the entire system. During this time, I saw how these flaws in AI systems could lead to real-world harm, making this issue all the more personal.

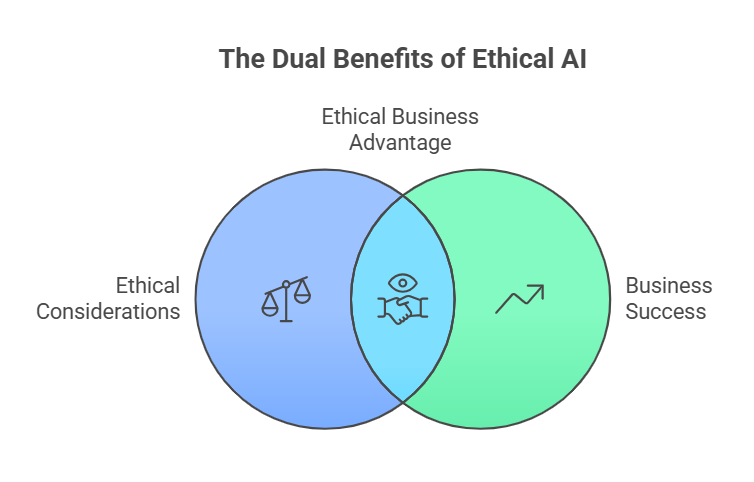

A Business Case for Fairness

Now, some may see this as primarily an ethical concern, but I see it as a critical business matter as well.

Think of the long-term effects of not creating a trustworthy system. No one will want to use it. Organizations that adopt biased AI risk damaging their reputations, eroding public trust and even facing legal challenges.

A study in JAMA in 2024, the cost of these disparities was calculated to be billions of dollars. On the flip side, businesses prioritizing fairness build a foundation for future success. They are more likely to attract patients, talent and investors who value ethical practices. It's not only the right thing to do; it's the smart thing to do.

I saw this principle in action at Stanford, where the emphasis was on creating ethical AI as a prerequisite for technological advancement.

How to Build Fairer Algorithms

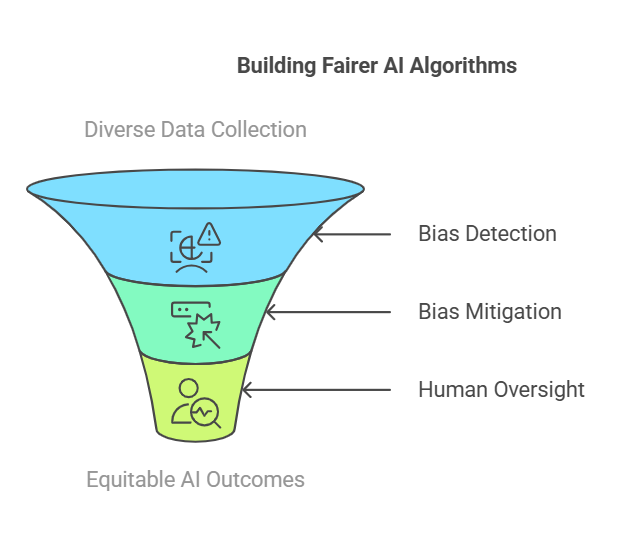

So, how do we fix this? It requires a multi-pronged approach.

- We must collect diverse and representative datasets that accurately reflect the populations served.

- We need to use techniques to detect and mitigate algorithm bias during development, a type of "ethical auditing."

- Last, and maybe most importantly, we must maintain a "human-in-the-loop" approach where clinicians and medical professionals are part of the AI decision-making process, acting as checks and balances.

This is not an impossible task; it requires a concerted effort and a commitment to ensuring equitable outcomes. At Stanford, we worked on many projects that involved data scientists and healthcare professionals working closely together to validate these algorithms, a crucial practice.

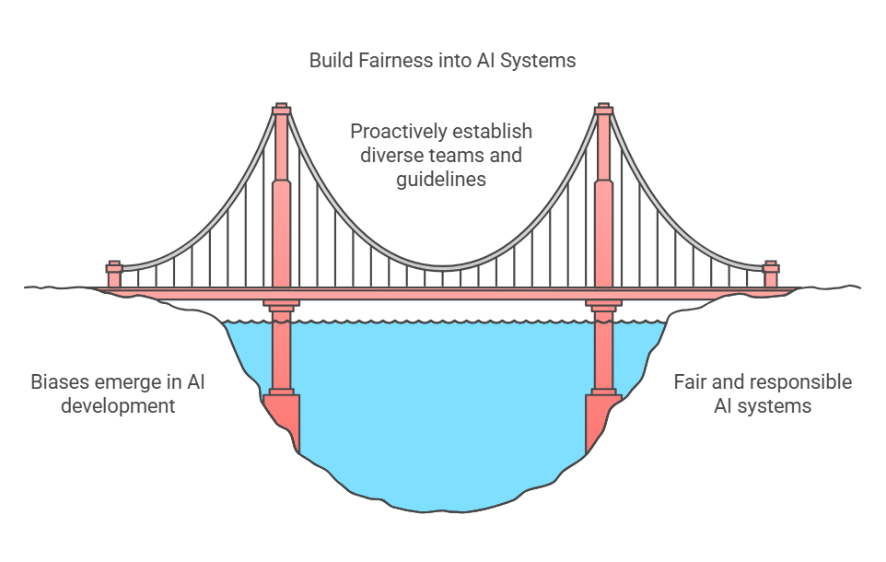

Moving From Reactive to Proactive Measures

We can't wait for biases to emerge and then try to fix them. We need to be proactive, building fairness into our AI systems from the ground up. This means building teams with diverse backgrounds and perspectives, establishing clear ethical guidelines and developing frameworks to continually assess and improve the fairness of AI algorithms.

This is where leadership and strategy come in, and not only from tech professionals but also clinicians and executives who know the complexities of healthcare. In 2023, Harvard Business Review published an article emphasizing the necessity of leaders to create a safe environment that promotes the discussion of ethics and bias in the development process. In my health center, I observed how leadership actively advocated these measures, creating a culture of responsible AI development.

Related Article: What Are Ethicists Saying About AI?

The Opportunity for Revolution

I believe that we can use technological advancements to promote a more equitable healthcare system. It is not enough to not be racist; we need to be actively anti-racist and to correct systemic imbalances.

This is an opportunity to leverage AI to address some of the most complex challenges in healthcare and, frankly, any industry, but only if we do it right. By committing to fairness, we not only advance healthcare, we build systems that are just, inclusive and effective for everyone. My experiences during my time using AI and Healthcare at Stanford have solidified my belief that we are at a point where AI can either exacerbate existing problems or become a powerful tool for change.

The road to equitable AI in healthcare is not easy, but it is essential. It requires collaboration across many areas: data scientists, clinicians, policymakers, patients, executives and thought leaders. By focusing on transparency, accountability and fairness, we can create an AI ecosystem that improves healthcare for all, leaving no one behind. This is not only the right thing to do, but it is also what the healthcare system needs to build a better future, and the experiences from my specialization at Stanford have made this commitment a personal and professional priority.

Frequently Asked Questions

How do I know if an AI algorithm is biased?

It can be tricky, but one way is to check the data used to train the algorithm. Was it representative of the populations it will be used on? Another way is to test the algorithm on diverse data sets and see if the outcomes differ for different groups.

What role do clinicians play in mitigating bias?

Clinicians bring a wealth of real-world experience. They can recognize when an algorithm is making a questionable decision. They also help identify sources of bias and advocate for fairness in developing and implementing AI systems.

Are there any regulatory frameworks for AI fairness in healthcare?

Not many, but this is an area that is developing. Governments and regulatory bodies are beginning to look into standards for AI systems. These frameworks must be created and implemented.

Is it possible to make an algorithm completely unbiased?

Complete fairness is a challenging goal because biases can appear in subtle ways. However, we can always strive to minimize biases and improve the fairness of our systems. There are many techniques for creating fair and effective systems that require active work to maintain.

What can patients do to advocate for fairer AI?

Patients can ask questions, seek transparency and push for more diverse representation in the design and development of AI systems. They can also support organizations prioritizing ethical AI and demanding fairness in the healthcare sector.

Learn how you can join our contributor community.