Key Takeaways

- Extropic claims its new “probabilistic” hardware could run GenAI using far less energy than GPUs.

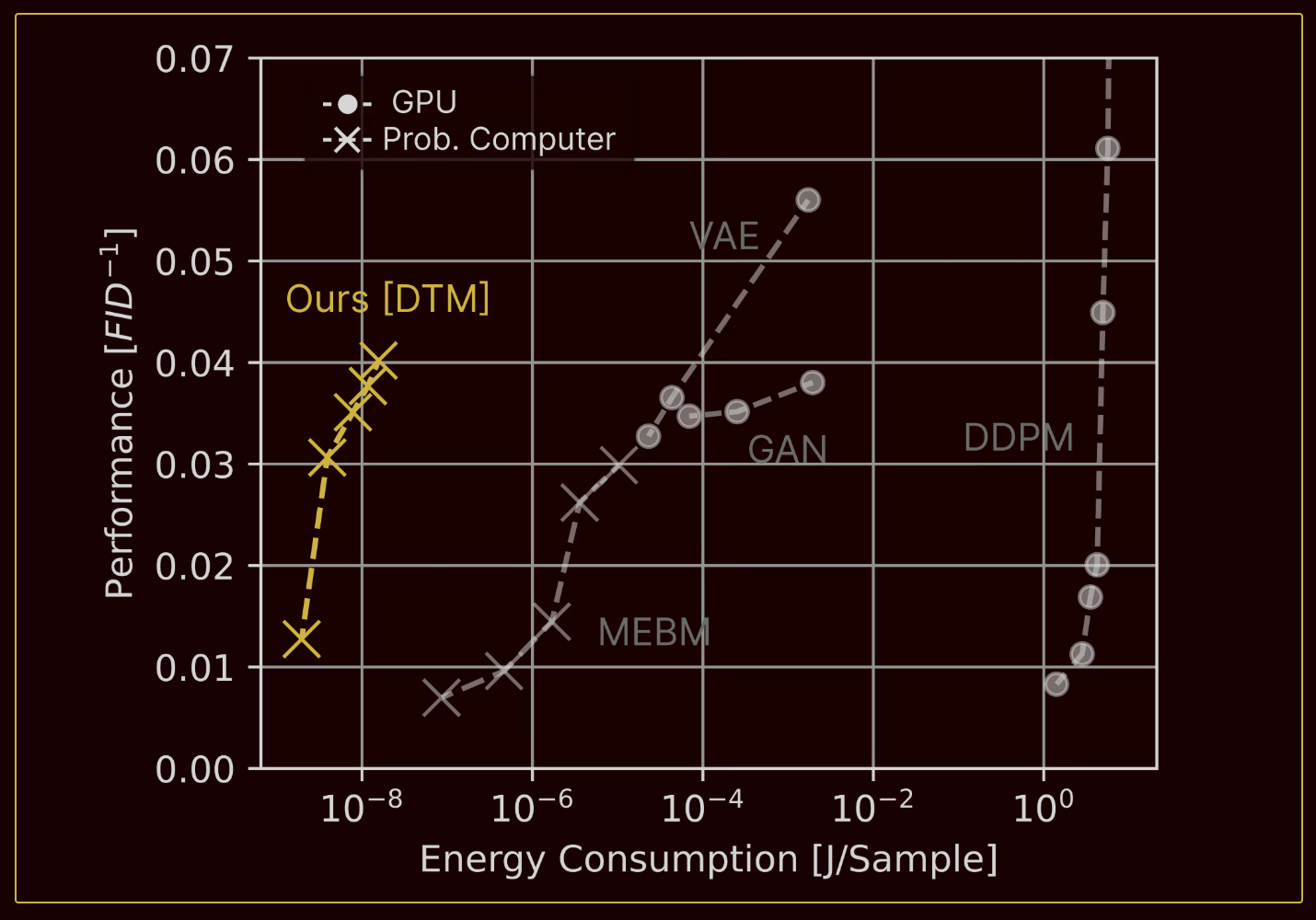

- Simulations show ~10,000x energy savings with its new Denoising Thermodynamic Model (DTM).

- Extropic said it plans to remove power constraints that limit AI scaling today.

Table of Contents

- AI Maxes Out the Power Grid

- Extropic Introduces First Scalable Probabilistic Computer

- How a TSU Works

- A New Generative AI Model: The Denoising Thermodynamic Model

- Why Extropic's Breakthrough Matters

- The Real Test: From Research to Build-Out

- Key Takeaways for Enterprise Leaders

- What Comes Next in AI Computing

- Frequently Asked Questions

AI Maxes Out the Power Grid

The AI boom has come with a physical constraint most consumers never see: electricity.

Data centers across the world struggle to secure enough power to support AI training and inference. Three years ago, tech startup Extropic bet that energy — not chips, not data — would become the primary limit to AI scaling. In their latest announcement, they say that bet has proven correct.

"With today’s technology, serving advanced models to everyone all the time would consume vastly more energy than humanity can produce."

- Extropic Officials

Rather than work on energy generation, which would require major infrastructure and government support, Extropic targeted another side of the problem: how to make AI itself more energy efficient.

Extropic Introduces First Scalable Probabilistic Computer

Modern AI is built on GPUs, a type of processor originally designed to render graphics. GPUs evolved into AI accelerators because they are good at matrix multiplication, the core mathematical operation behind neural networks. But GPUs are not energy efficient, and most of their power consumption goes into moving information around the chip, not the math itself.

Extropic claims to have designed an alternative: a new class of AI chip — a scalable probabilistic computer — built for sampling probability directly instead of performing GPU-style matrix math.

According to the company, their hardware:

- Uses “orders of magnitude” less energy than GPUs

- Performs AI tasks by sampling probability, not crunching large matrices

- Was fabricated and tested in silicon

- Runs a new kind of generative AI algorithm

This new device is called the Thermodynamic Sampling Unit (TSU).

Related Article: The Billion-Dollar Data Center Boom No One Can Ignore

How a TSU Works

TSUs function as probabilistic AI chips. Most AI chips today, including GPUs and TPUs, perform massive matrix multiplications to estimate probabilities and then sample from them. Extropic’s hardware claims to skip the matrix multiplication entirely and directly sample from complex distributions.

Key Claims About TSUs

Extropic states that TSUs:

- Are built from large arrays of probabilistic cores

- Sample from energy-based models (EBMs), a class of machine learning (ML) models

- Use the Gibbs sampling algorithm to combine many simple probabilistic circuits into complex distributions

- Minimize energy by keeping communication strictly local — circuits only interact with nearby neighbors

This last point is critical. Extropic argued that the biggest energy drain in GPUs is data movement. By designing hardware where communication is entirely local, the TSU architecture avoids expensive long-distance wiring and voltage changes within the chip.

In other words: TSUs are built to be physically, and therefore energetically, optimized for probability, not arithmetic.

How TSUs Compare to AI Chips

- GPUs/TPUs: Deterministic math engines optimized for matrix multiplication

- TSUs: Probabilistic chips that generate samples directly

- pbits: Transistor-based probabilistic bits that fluctuate between 0 and 1

- Goal: Deliver generative AI using far less energy than GPU-based systems

The Smallest Building Block: The pbit

At the core of the TSU is what Extropic calls a pbit.

- A traditional digital bit is always a 1 or a 0

- A pbit fluctuates randomly between 1 and 0

- The probability of being in either state is programmable

This makes a pbit essentially a hardware random number generator.

A single pbit is not very useful. But, as Extropic noted, neither is a single NAND gate. Combine enough of them, and you get a functioning computer.

Extropic claims that:

- Existing academic pbit designs were not commercially viable because they required exotic components

- Extropic designed a pbit built entirely from transistors

- Its pbits use orders of magnitude less energy to generate randomness

- A hardware “proof of technology” has already validated the concept

Because pbits are small and energy-efficient, they can be packed tightly into a TSU. And because they are made from ordinary transistors, they can be integrated alongside standard computing circuitry.

A New Generative AI Model: The Denoising Thermodynamic Model

To show how their hardware can be used in real applications, Extropic also developed a new generative AI algorithm called the Denoising Thermodynamic Model (DTM).

DTMs are inspired by diffusion models, the same broad family used by image generators like Stable Diffusion. Like diffusion, a DTM starts with noise and iteratively transforms it into structured output.

However, Extropic states that DTMs are designed specifically for TSUs and are therefore far more energy-efficient.

According to Extropic:

- Simulations of DTMs running on TSUs could be 10,000x more energy-efficient than modern algorithms running on GPUs

- Results can be replicated using thrml, their open-source Python library

Why Extropic's Breakthrough Matters

Extropic framed the problem in simple terms: the world does not have enough power for unlimited AI.

The Bottleneck

- Every major AI model increases compute requirements

- Every increase in compute increases energy demand

- Data centers are already struggling to secure power

If generative AI were served to billions of users continuously — at scale similar to email or search — today’s hardware could consume more energy than the world currently produces.

The Proposed Solution

Improve energy generation and reduce computing energy consumption, removing the energy ceiling preventing widespread, always-on AI.

Related Article: Why AI Data Centers Are Turning to Nuclear Power

The Real Test: From Research to Build-Out

Extropic said the “fundamental science is done” and the company has entered the build-out phase. To move from small prototypes to production-scale systems, it is hiring:

- Mixed-signal integrated circuit designers

- Hardware systems engineers

- Probabilistic machine learning experts

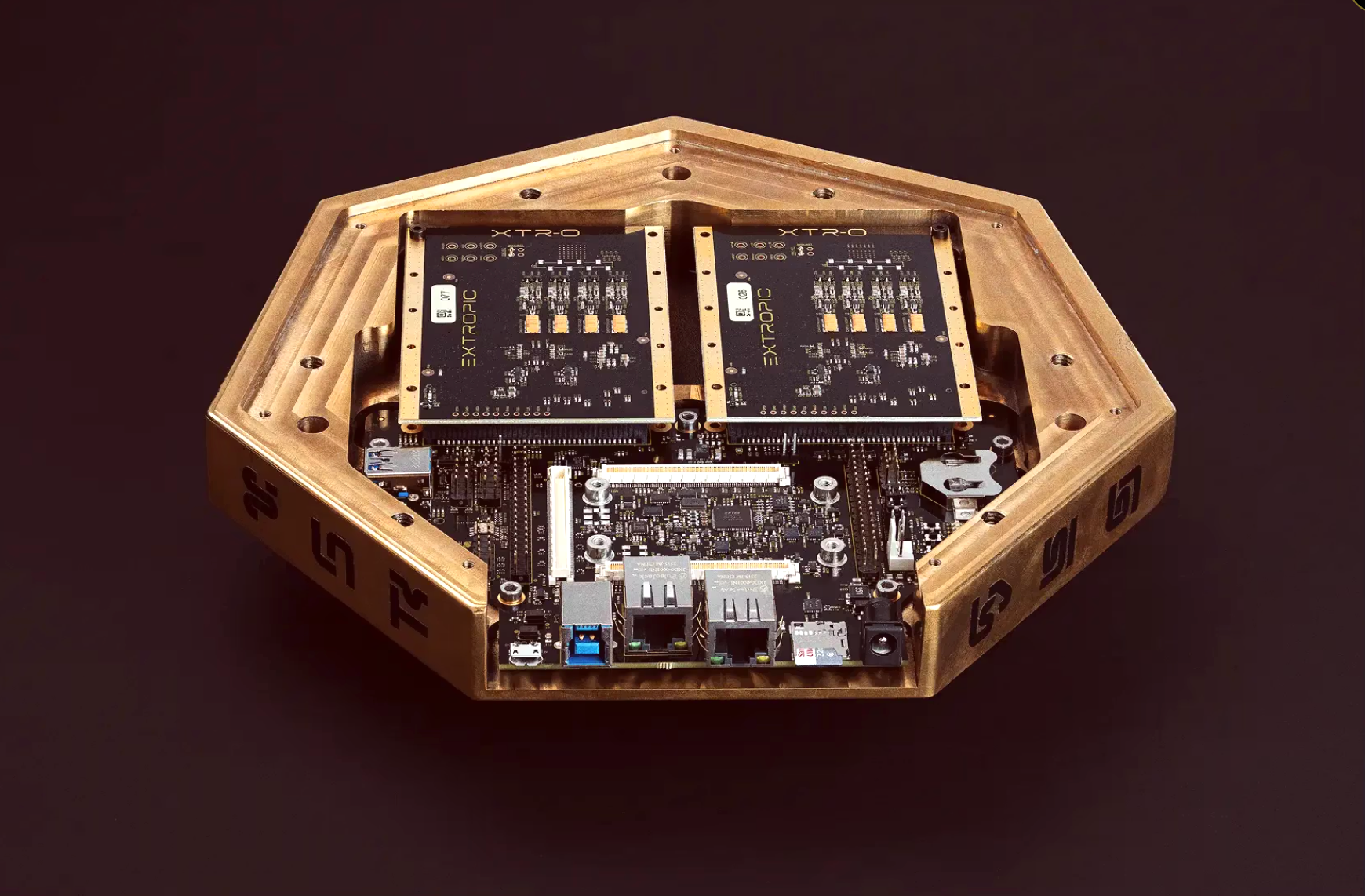

The company’s XTR-0 development platform has already been beta-tested by early partners, though no partner names were disclosed in the announcement.

Key Takeaways for Enterprise Leaders

1. AI will hit physical energy limits sooner than compute limits.

Data centers cannot scale indefinitely, and electricity is the next bottleneck.

2. Extropic offers a new class of AI chip.

It does not replace GPUs through faster matrix math — TSUs avoid matrix math altogether by generating samples directly.

3. The company has demonstrated early, small-scale tests in hardware and simulation.

This includes a working pbit design and open-source algorithm replication.

4. The energy-efficiency claim — up to 10,000x — remains the centerpiece.

If validated at scale, it would represent a major shift in AI infrastructure economics.

What Comes Next in AI Computing

As of today, Extropic’s achievements exist at prototype and simulation scale. The next step, building full production TSUs, will determine whether this becomes a foundational shift in AI computing or a promising research direction.

The company is confident in its trajectory:

"Once we succeed, energy constraints will no longer limit AI scaling."

- Extropic Officials

For now, Extropic is still in the early stages of turning breakthrough into deployment. But the ambition is clear: rebuild computing from the ground up to match what AI actually is — probabilistic, not deterministic.

Frequently Asked Questions

No. Extropic has produced a hardware proof of technology and a development platform called XTR-0. It has been beta tested by early partners, but is not yet a commercial-scale system.

The startup is now hiring hardware and machine learning experts to scale TSUs into production-ready systems.