A global retailer prepares for the holiday rush with AI models that forecast supply and optimize shipping. The models influence purchase orders, warehouse staffing and customer promises on delivery dates. An executive asks for an audit trail that shows why the system is prioritizing one region over another. The answer carries financial and reputational weight.

The team consults its closed-API partner. The interface shows uptime, cost and summary outputs that look polished, yet the lineage of decisions remains out of reach. The request for transparency stalls in the dashboard.

An internal group experiments with open models like LLaMA 3 and Mistral. The models run inside secure environments with configuration files in version control and logs that trace each output to a data source. The retailer prints reports with a reproducible path from input to outcome. Leadership sees a system it can both use and explain.

This is the choice now confronting enterprises everywhere. Open-source models offer transparency and adaptability, while closed-source models or proprietary platforms promise stability and polish. The decision is less about technology and more about posture: which approach reduces risk and earns trust.

Table of Contents

- The Boardroom Challenge: Can You Explain What Your AI Just Did?

- Open-Source Models vs Closed-Source Models

- The Case for Open-Source AI

- The Case for Closed-Source AI

- Which Type of AI Model Is Best?

- Opacity vs Convenience in Closed-Source AI Models

- Transparency vs Risk in Open-Source AI Models

- Final Verdict: Open or Closed, What’s Right for You?

- Frequently Asked Questions

The Boardroom Challenge: Can You Explain What Your AI Just Did?

Open-source AI models have evolved from tech curiosities into enterprise contenders. Releases such as LLaMA 3 and Mistral exemplify rapid innovation through open communities. Compared to closed APIs, they offer:

- Cost savings

- Greater customization

- Faster iteration

For businesses, transparency has become as valuable as capability. Regulatory pressure raises the stakes in sectors like finance, healthcare and government. Enterprises are expected to trace data lineage, provide audit trails and explain AI-driven outcomes. In this environment, closed systems begin to feel like brittle liabilities instead of obscure luxuries.

Related Article: Inside Hugging Face’s Strategic Shift: APIs, Safety & Surviving the AI Platform Wars

Open-Source Models vs Closed-Source Models

Empirical analysis supports this shift. "Open-source models are not actually open source in the sense that other software and chip designs are… You get the weights, but not the actual data that was used to produce the weights,” said Dr. Mark Nitzberg, executive director of the Center for Human-Compatible AI at UC Berkeley.

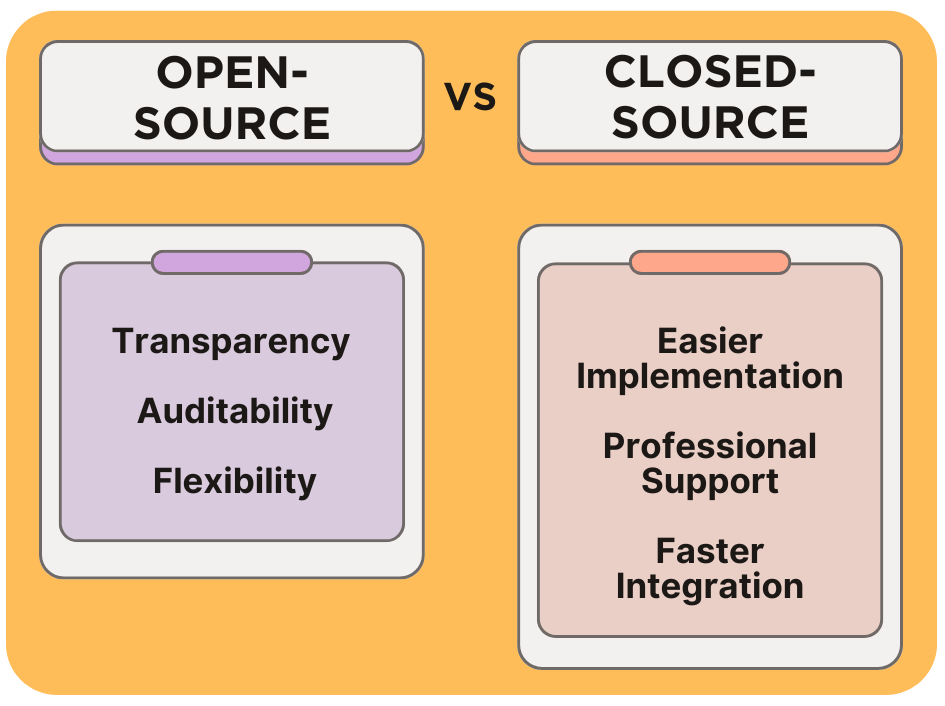

A recent comparative study found that open-source AI models deliver superior transparency, auditability and flexibility, while closed systems offer ease of implementation, professional support and faster integration with other proprietary software.

Closed platforms such as OpenAI and Gemini still lead in production polish and reliability. Yet the question for the enterprise is no longer one of capability alone. It’s about whether a system can be explained as easily as it performs.

Dr. Virginia Dignum, Professor of Responsible AI at Umeå University and director of the AI Policy Lab, said that the challenge is creating broader frameworks for shared responsibility. “The issue is not simply open versus closed source, but how to build structures of shared governance: for instance tiered/contextual openness, controlled access with oversight or international frameworks that ensure both safety as promoted by the ‘closed source’ camp and scientific progress and inclusive, democratic legitimacy as stressed by the ‘open source’ camp.”

The Case for Open-Source AI

“Open-source models are surpassing proprietary ones,” Yann LeCun, Meta’s chief AI scientist, said after DeepSeek’s R1 achieved benchmark performance above GPT-4.

“Our ability to derive revenue from this technology is not impaired by distributing the base models in open source,” LeCun added. “In fact, we see this already: millions of downloads, thousands of people improving the system and thousands of businesses building applications. This clearly accelerates progress.”

Venture capitalist Marc Andreessen has urged the US to lead in open-source AI in order to preserve technological sovereignty. He recently explained that control of foundational models connects directly to values and trust, especially in sectors where provenance and compliance carry weight.

The Case for Closed-Source AI

Dr. Nitzberg described large language models (LLMs) as “a beast” created through feeding billions of pages of text and images into training, then tuned with reinforcement learning: “You pat it on the head when its output is good, and smack it when its output is bad. This gives you a beast that (mostly) behaves as you would wish.”

Proponents of closed platforms emphasize different virtues. Reliability, integration and vendor accountability often matter more to IT leaders than transparency. A closed API may not reveal its inner workings, but it offers deployment speed and predictable uptime.

The result is a split screen. On one side, open systems offer transparency, adaptability and oversight. On the other, closed providers promise simplicity and polish. Enterprises must decide which form of trust matters most.

Which Type of AI Model Is Best?

A pharmaceutical company builds on open models, tuning them inside secure environments and logging every decision. The cost in talent and infrastructure rises, but the compliance team can defend every output. Trust takes shape in the audit trail.

A retailer across the street signs with a closed provider. The models arrive through APIs that plug neatly into existing systems. The performance feels seamless, the uptime is contractual and the dashboards satisfy executives who want results over mechanics. Trust takes shape in the vendor relationship.

Both approaches show rational tradeoffs. One emphasizes transparency through greater complexity. The other emphasizes convenience through managed services. Each reflects a different calculation of control, cost and accountability.

Opacity vs Convenience in Closed-Source AI Models

Many enterprises treat managed opacity as a service. Under clear SLAs, closed-source AI platforms offer:

- Model selection

- Updates

- Compliance reviews

- Incident response

Teams gain predictable performance, straightforward integration and a single partner accountable for outcomes.

Risk leaders also see a paperwork advantage. The NIST AI Risk Management Framework encourages supplier management practices that include service level agreements and third-party attestation reports. Those artifacts help organizations demonstrate oversight of external AI services during audits, which strengthens the case for a vendor-managed approach.

A transportation company illustrates this appeal. The team standardized on a closed provider, received scheduled updates, used built-in monitoring and kept deployment windows tight. Product managers focused on customer features while the vendor handled tuning and safety reviews. In settings like this, trust grows from consistent operations and a documented chain of responsibility.

Transparency vs Risk in Open-Source AI Models

Regulators, auditors and boards now ask enterprises to prove how their models reach decisions. That demand shifts transparency from an optional feature to a core requirement.

“Publishing the weights does not really provide transparency,” Dr. Nitzberg said. “It does allow you to bring the beast inside your own house and train it yourself… but what happened to make the underlying beast is still unknown to you.”

Dignum argued that concerns about malicious use of open models are often overstated. She pointed to cryptography as a field where open algorithms have proven more secure, since “vulnerabilities are exposed and fixed,” while security-through-obscurity has consistently failed. In her view, the harms caused by open-model releases, such as misinformation or fraud, are largely scaled versions of existing problems. “At the same time,” she added, “there is almost as much harm coming from using APIs to closed models.”

For Dignum, the more troubling risk lies not in misuse, but in concentration of power. “Closed models do not eliminate harms,” she said, “but considerably increase dependency, lack of sovereignty and centralization of control.”

Related Article: Healthcare's AI Crossroads: Open Source or Commercial Foundation Models?

Final Verdict: Open or Closed, What’s Right for You?

Closed platforms attract customers who want speed, integration and service contracts. Open models attract teams that prioritize control, adaptability and visibility.

The future will likely mix both approaches. In that mix, openness functions as the baseline, the standard against which every AI provider will stand.