Imagine an autonomous vehicle approaching an intersection. The AI-powered navigation system processes multiple inputs — traffic signals, pedestrian movements and surrounding vehicles — before making a split-second decision to stop or proceed. However, when an accident occurs, investigators struggle to understand why the AI chose one action over another. Without insight into the model’s reasoning, determining liability and improving system safety become nearly impossible.

This challenge highlights a fundamental issue in modern AI: deep neural networks (DNNs) lack interpretability, making their decision-making processes difficult to understand. As AI systems increasingly drive high-stakes applications in healthcare, finance and autonomous driving, the demand for transparency increases.

In my recent study, "Architectural Elements Contributing to Interpretability of Deep Neural Networks (DNNs)," co-authored with Dr. James Hutson, we explored the architectural components that enhance interpretability without compromising model performance. We examined how layer types, network depth, connectivity patterns and attention mechanisms influenced a model’s transparency. Our findings suggest that incorporating structured connectivity, hybrid architectures and self-explainable mechanisms significantly improves AI interpretability, fostering trust in mission-critical applications.

The question is no longer whether AI can make high-accuracy predictions — it’s whether AI can justify its decisions in a way that is comprehensible, accountable and fair.

The Challenge: Black-Box AI and the Need for Interpretability

Deep Neural Networks (DNNs) have exceptional abilities in image recognition, speech processing and complex decision-making. However, their high-dimensional structures create opaque models, making it difficult to trace how inputs are transformed into outputs. This lack of transparency presents several critical challenges:

- Regulatory Compliance and Legal Accountability: Industries such as finance, healthcare, human resources and autonomous systems must adhere to laws requiring explainability in AI-driven decisions, including the General Data Protection Regulation (GDPR) and Fair Credit Reporting Act (FCRA). Without clear reasoning behind AI-generated outcomes, institutions risk regulatory violations and legal repercussions.

- Bias and Fairness Risks: Hidden biases in training data can go undetected without transparency, leading to discriminatory outcomes. Unexplainable AI models may perpetuate systemic inequalities in hiring, lending and law enforcement, reinforcing rather than mitigating biases.

- Trust and Ethical Concerns in Workforce AI: In 2024, the US Consumer Financial Protection Bureau (CFPB) cautioned employers about the risks of AI-driven worker surveillance and algorithmic scoring. The agency warned against third-party digital tracking systems and black-box decision-making tools, emphasizing that employees should not be subjected to unchecked surveillance or have their careers dictated by opaque AI models.

The opacity of DNNs hinders compliance and fairness and undermines trust in AI adoption across industries. Ensuring explainability is essential for ethical deployment, regulatory approval and public confidence in AI-driven decision-making.

Related Article: Cracking the AI Black Box: Can We Ever Truly Understand AI's Decisions?

How AI Models Can Explain Their Decisions

AI is getting better at making decisions, but how do we know why it makes those decisions? Many AI models work like a "black box," we see the input (what we ask) and output (what AI spits out), but we don’t understand what happens in between. This makes it hard for people to trust AI, ensure fairness and comply with regulations in fields like healthcare, finance and law.

AI interpretability depends on how models are built and what tools are used to explain their decisions. The following key elements help make AI more understandable.

1. Types of AI Layers and What They Do

Different types of AI layers process information in unique ways. Some are easier to interpret than others.

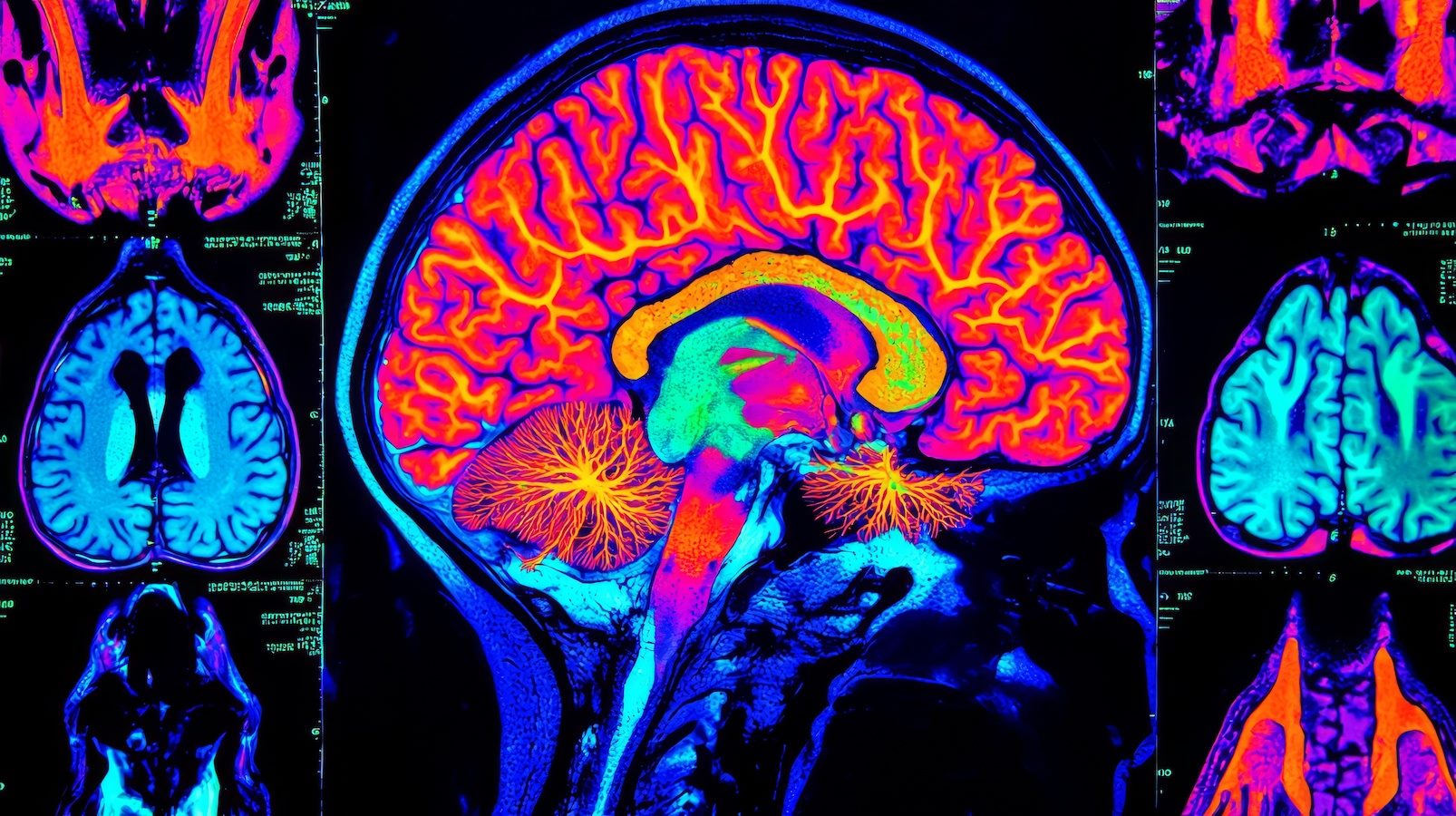

Convolutional Layers (CNNs) — AI for Images

- These layers help AI recognize patterns in images, used in medical imaging and facial recognition.

- Reading list: AI-driven medical imaging systems used CNNs to identify abnormal tissue, but lacked interpretability can hinder adoption in radiology.

Recurrent Layers (RNNs, LSTMs) — AI for Speech & Text

- These layers help AI understand speech and written text, such as in voice assistants (Siri, Alexa) or text predictions.

- Reading list: Automatic Speech Recognition (ASR) systems relied on RNNs but required additional transparency tools to explain misclassifications.

Attention Layers — AI That Prioritizes Information

- These layers are used in powerful AI models like ChatGPT and BERT to highlight important parts of text or images.

- Reading list: Attention-based models have displayed promising potential in the realms of biomedicine and drug discovery.

2. Deep vs Shallow AI Models: The Trade-Off

- Shallow models (simple AI) are easy to understand but less powerful.

- Deep models (complex AI) are more accurate but harder to interpret.

- Reading list: A study in AI-assisted radiology found that hybrid models improved interpretability by 30% with high accuracy, helping doctors understand why the AI made certain diagnoses.

3. How AI Connects Information

How layers connect affects whether AI decisions can be traced:

- Fully Connected Layers: Give flexibility but make AI decisions harder to explain.

- Residual Connections (ResNets): Improve transparency by helping information flow more clearly through layers.

- Graph Neural Networks (GNNs): Show clear decision paths, useful for social networks and drug discovery.

- Reading list: In fraud detection, GNNs helped track how suspicious transactions are linked, making AI’s decisions more explainable to banks.

4. Attention Mechanisms: AI That Shows Its Work

- Self-Attention Models: Show which inputs influenced the decision the most.

- Feature Attribution Maps: Highlight what the AI focused on, making decisions easier to trust.

- Reading list: Attention-based AI in medical imaging successfully identified critical image regions which contributed to diagnostic decisions and enhanced transparency in radiology.

Why This Matters

AI must not only be accurate but also explainable so that humans can trust it. By designing AI with transparency in mind, industries can use AI more effectively, ethically and fairly.

How do you think AI transparency will shape its future? Let’s discuss!

Learn how you can join our contributor community.