Another day, another more powerful AI model appears, seemingly set to "transform your business." But even as frontier models improve, organizations still struggle to integrate them into their particular operational flows. To be truly relevant, these models need to adapt to each company's data, voice and specific needs.

Prompt engineering can address localized issues, but that is often a stopgap for operational scale.

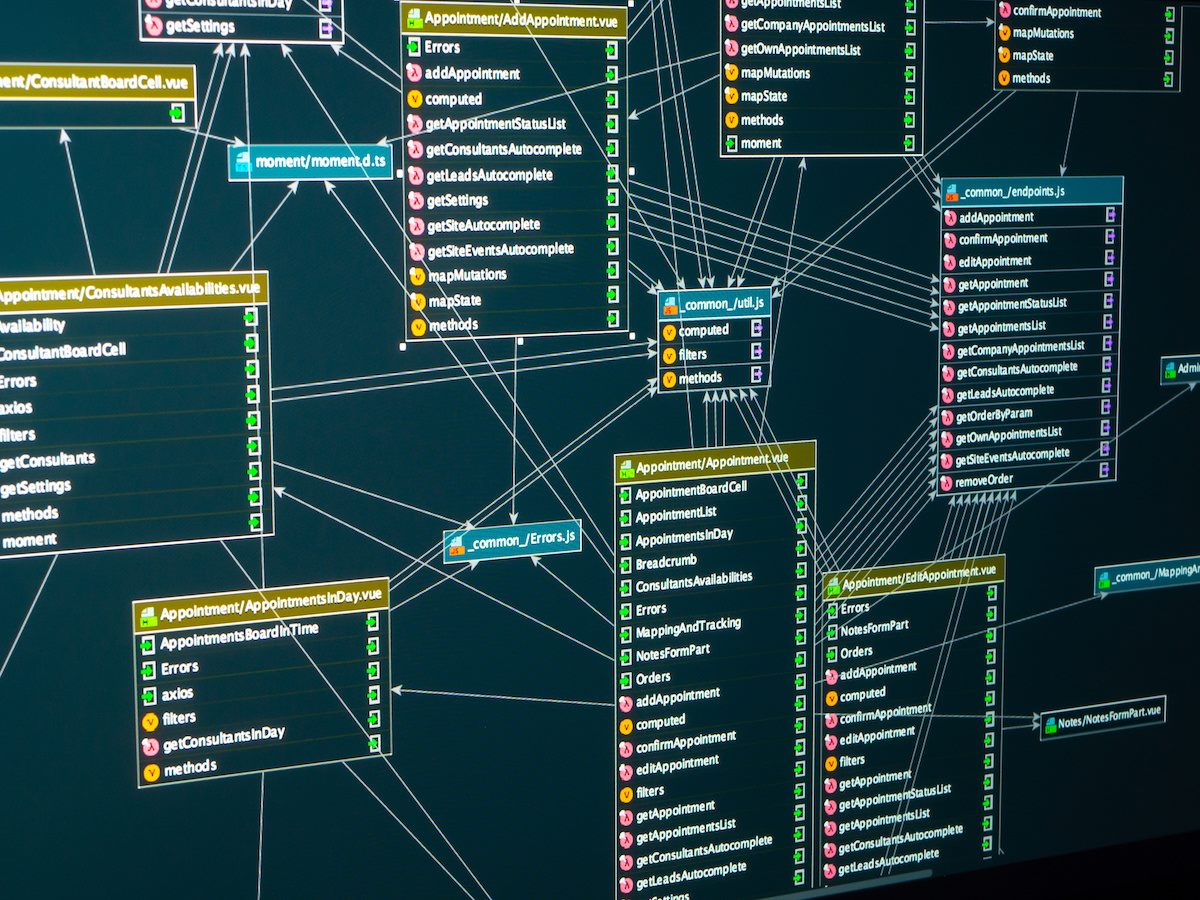

Two infrastructure approaches have emerged for customizing LLMs:

- Retrieval-Augmented Generation (RAG): Connects models to external knowledge sources

- Fine-Tuning: Adjusts the model's internal parameters

The decision between RAG and fine-tuning depends on the use case, industry context and strategic positioning. Let’s explore.

Table of Contents

- LLM Limitations: Why Base Models Aren’t Enough

- What Is LLM Fine-Tuning?

- Considerations for Fine-Tuning LLMs: Data, Documentation and Costs

- Fine-Tuning Case Study: Harvey

- What Is Retrieval-Augmented Generation (RAG)?

- Considerations for RAG: Infrastructure, Data and Maintenance

- RAG Example: Customer Support System

- Fine-Tuning vs RAG: Key Differences Explained

- Use Cases for RAG and Fine-Tuning

- ROI of RAG vs Fine-Tuning: 6 Questions to Ask

LLM Limitations: Why Base Models Aren’t Enough

At their core, LLMs are massive prediction engines trained on enormous datasets. After enough learning (supervised, unsupervised and reinforcement learning from human feedback), they generate coherent text by predicting the following word (token) given a user prompt. This prediction relies on the parameters LLMs developed during training.

However, while base models are powerful predictive engines, they face several key limitations:

- Static Knowledge: Most models have no information about events after their training cutoff date, often 6-12+ months in the past

- Context Limits: Even advanced models can only "remember" a finite amount of text at once

- One-Size-Fits-All Behavior: Out-of-the-box models trained on internet-scale data might not capture your company's tone or emphasize important industry nuances

Over time, base models have added additional features to augment the base predictive power, such as browsing capabilities (Claude being the most recent) and larger context windows (1-2 M+ context windows for Gemini). However, these features often aren't enough to improve a digital organization's infrastructure efficiencies or can introduce costs. For example, the more tokens used within a context window, the higher the inference costs. That's where RAG and fine-tuning enter the picture.

What Is LLM Fine-Tuning?

Fine-tuning is like sending your off-the-shelf large language model (LLM) to a finishing school. It involves taking a pre-trained model, iterating on its existing training with your data and adjusting its internal parameters to adopt specific behaviors, such as understanding and mimicking a company's tone or learning to emphasize important industry nuances. When the model has completed its fine-tuning, it will respond with the speed of a base model, but with the training and memory of the fine-tuned information.

Considerations for Fine-Tuning LLMs: Data, Documentation and Costs

Fine-tuning requires strategic planning around several critical factors:

Data Quality and Uniqueness

The most valuable input for fine-tuning is proprietary data that models haven't encountered during their original training. Generic industry content often provides minimal advantage, as most models have already processed similar examples. The competitive edge comes from unique data:

- Internal conversation transcripts

- Company-specific documentation

- Proprietary research or knowledge

For example, communication company DialPad’s knowledge of business conversations enabled its fine-tuned DialpadGPT, while sales company Clay uses its fine-tuned “Claygent” to automate data collection and entry.

Technical and Resource Requirements

Fine-tuning demands significant technical resources:

- GPU compute for training

- Machine learning expertise to optimize

- Ongoing evaluation to prevent overfitting and address issues

These services can range from API-based platforms like Cohere, to flexible open-source ecosystems like HuggingFace, to fully managed enterprise offerings like AWS Bedrock — each with different trade-offs around control, cost and customization.

Data Preparation Challenges

The most underestimated aspect of fine-tuning is data preparation. This process involves:

- Structuring content in training-ready formats

- Labeling or pairing examples for supervised learning

- Cleaning and normalizing text

This preparation can easily become a primary bottleneck for many organizations, sometimes requiring more effort than actual training processes.

Related Article: How to Evaluate and Select the Right AI Foundation Model for Your Business

Fine-Tuning Case Study: Harvey

Harvey, an AI-focused legal company, fine-tuned OpenAI’s models on 10 billion tokens (roughly 7.5B words) of case law in 2023/4. Through testing, the fine-tuned model's responses were preferred by 97% of lawyers compared to the base model. Harvey has leveraged this advantage to provide a suite of legal-oriented workflows.

Harvey recently announced a $300M fundraise, doubling its valuation to $3B and increasing revenue 4x annually. Their success likely stems partly from an efficient fine-tuning approach, leveraging new legal data from each of the 235 countries in which they operate. Fine-tuning also benefits Harvey from frontier model improvements as they evolve continuously.

What Is Retrieval-Augmented Generation (RAG)?

RAG is like giving your AI an open-book test. Instead of retraining the base model, you build a search layer that augments user queries with external data sources. Before the prompt is sent to the LLM, these external data sources increase the context of the prompt query. This extra information refines the specificity of the user’s request, which then assists the model in generating more accurate, grounded responses. LLMs can better predict the correct response by stuffing the context window with this additional information.

RAG can lead to significant increases in accuracy. In one study, RAG increased the accuracy of a base model by 40%, and similar improvements are standard. Even when it doesn't improve quality, RAG provides the ability for auditing and operational improvement, allowing teams to identify areas for knowledge base enhancement. RAG allows a more flexible and iterative approach by modularizing the internal knowledge base from the base model (versus updating the base level through fine-tuning).

Considerations for RAG: Infrastructure, Data and Maintenance

While RAG eliminates the need for model training, it has its implementation challenges. The key considerations include:

Infrastructure Requirements

Unlike fine-tuning, which edits the base model, RAG adds an infrastructure layer. At one end of the spectrum, teams can create basic RAG responses with no-code tools (such as n8n or even simple Zapier setups) or more technical solutions using open-source tools like LangChain. Alternatively, more full-service providers like Pinecone offer managed vector search and retrieval pipelines, abstracting much of the operational overhead. As with fine-tuning, choosing the right approach depends on your team’s technical maturity, compliance requirements and appetite for control.

Data Quality: The Backbone of RAG

A RAG system is only as good as the internal data that augments user queries. Poorly organized, outdated or irrelevant documentation will yield poor results. Many organizations find they need to invest in:

- Document cleanup and standardization

- Strategic chunking of long documents

- Metadata enrichment to improve retrieval

This process can be more straightforward when organizations have had a process-driven, documentation-based approach (such as those in areas with high compliance requirements). In healthcare, for example, studies show that RAG-enhanced LLMs can standardize emergency medical triage and reduce variability caused by personnel experience and training. In fact, a RAG-enhanced GPT-3.5 model "significantly" outperformed EMTs and emergency physicians.

Ongoing Operational Costs and Impact

While upfront costs are lower than fine-tuning, RAG has more continuous operational expenses:

- Ongoing documentation cleanup and maintenance approaches

- Increased token usage from longer prompts

- Database hosting and maintenance

- Infrastructure maintenance and optimization

Latency Is a Vital RAG Consideration

Depending on the solution, RAG may substantially increase query latency. Unlike fine-tuned models, which will respond immediately, RAG requires an intermediary step to search through additional knowledge bases. This latency may not be an issue in certain use cases, like internal tooling, but if performance and customer experience are paramount, alternate solutions may be better.

RAG Example: Customer Support System

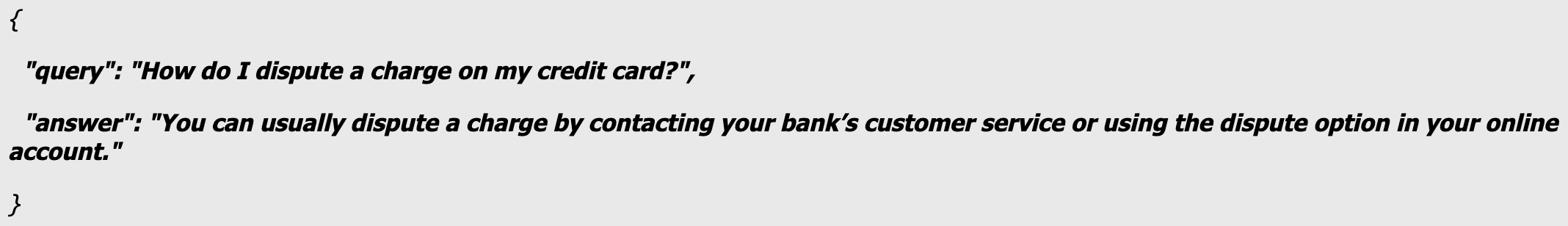

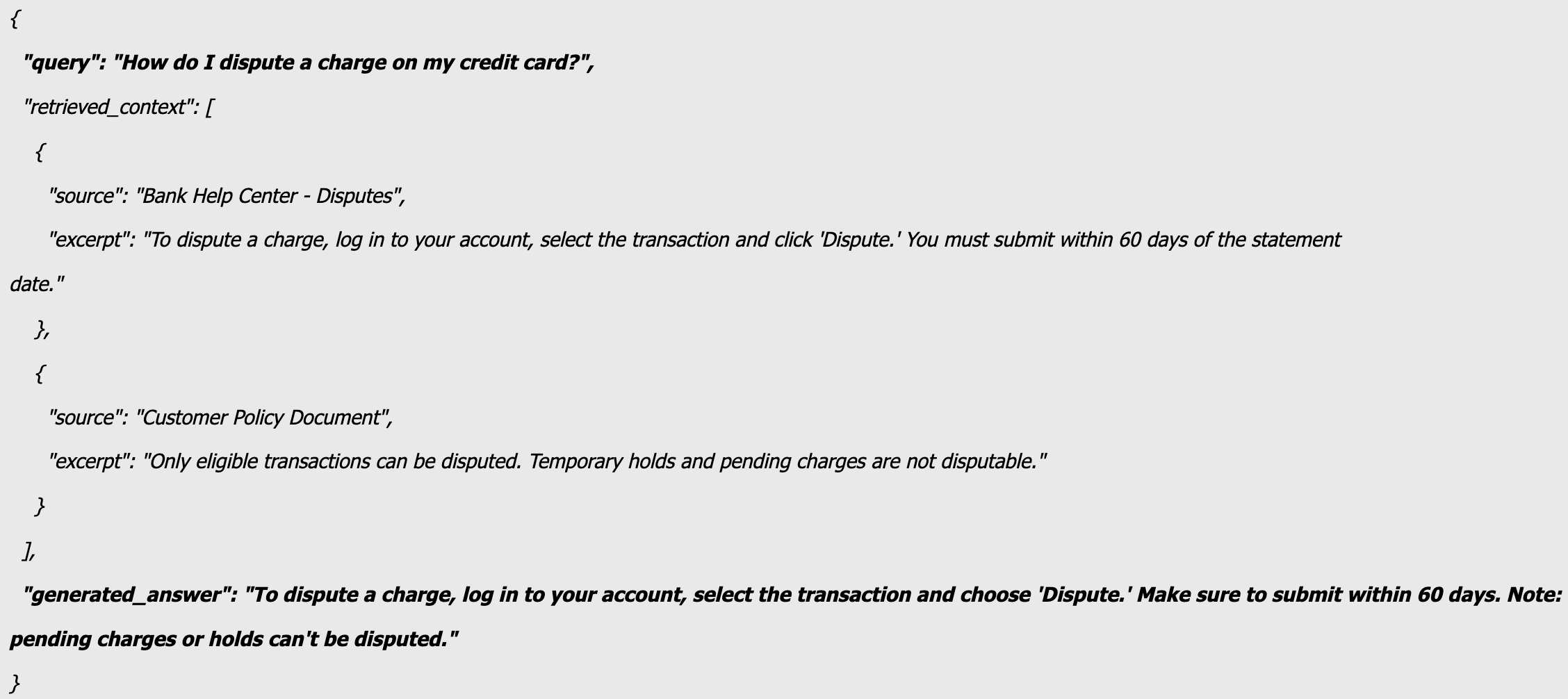

Imagine a situation where a customer is asking an AI-backed chatbot a support question. Under normal circumstances, this is what the exchange with an AI would look like:

However, with the RAG retrieval layer implemented, the RAG system evaluates the user’s query to identify relevant, internal knowledge resources. Then it appends the query as it is passed to the LLM with pertinent information from specific documentation sources (like a help center), resulting in the following example response:

Significantly, unlike fine-tuning, this RAG implementation did not impact the base model, so the above-generated answer would still have been generated by an LLM like GPT-4o or Claude. However, the LLM would generate an aligned response because of the appended information.

Related Article: Chain-of-Thought (CoT) Prompting Guide for Business Users

Fine-Tuning vs RAG: Key Differences Explained

| Aspect | Fine-Tuning | RAG |

|---|---|---|

| Adaptation Method | Changes the model's weights | Adds dynamic external context |

| Best For | Specialized behavior, tone, structured tasks | Real-time knowledge, wide-ranging queries |

| Setup Needs | Labeled training data, compute resources | Document indexing and retrieval system |

| Latency | Fast (inference only) | Slower (due to the retrieval step) |

| Knowledge Freshness | Static, as of training | Dynamic, real-time |

| Security | Data embedded at train time | Data fetched live (requires access control) |

| Costs | High upfront (training) | Lower upfront, higher per-query costs |

| Tooling | Hugging Face, OpenAI, Cohere, Bedrock, Gemini, Open source | LangChain, Haystack, LlamaIndex, vector databases |

Use Cases for RAG and Fine-Tuning

While use cases can vary given particular situations, here are some standard best practices.

RAG excels when the challenge is about knowing things — current events, proprietary documents or domain-specific facts that change frequently:

- Internal knowledge assistants (e.g., pulling from HR or policy docs)

- Financial advisors need access to daily reports

- Healthcare bots referencing updated treatment guidelines

- Customer support systems that rely on evolving knowledge bases

Fine-tuning optimizes on how to say something, such as maintaining a consistent brand voice, following a specific structure or performing complex reasoning in a defined domain:

- Domain-specific writing (legal, medical or marketing style)

- Structured tasks like classification or summarization

- Chatbots that must follow a specific tone, style or policy

- Offline or low-latency use cases (e.g., on devices)

Many successful organizational deployments blend both approaches. You might fine-tune a model on past customer service interactions to perfect your tone, then use RAG to feed it the most current troubleshooting steps.

ROI of RAG vs Fine-Tuning: 6 Questions to Ask

To simplify your decision process, ask these key questions:

- Do your users need cited sources for trust or compliance? RAG inherently supports this.

- Are fast responses essential? Fine-tuned models typically deliver better speed.

- Is your knowledge changing daily or weekly? RAG reflects new data instantly.

- Do you have structured training data ready? If yes, fine-tuning becomes viable.

- Are you deploying on your infrastructure? Fine-tuning provides complete control.

- Will you need to customize the tone deeply? Fine-tuning enables this baked-in behavior.

Ultimately, both approaches provide distinct benefits. RAG provides flexible breadth with access to the latest facts and situational knowledge, while fine-tuning delivers default depth through trained skills, tone and contextual mastery.

Like all AI initiatives, the best approach is to start small and then iterate flexibly. By combining both methods, you create systems that are reliable, efficient and aligned with your strategic goals.