Throughout history, humans have expanded our collective knowledge through libraries, universities or infrastructure like roads. We've successfully scaled our knowledge systems, creating an integrated world by increasing education and knowledge quality, effectiveness and reach. Today’s technology giants and AI startups look to scaling artificial intelligence beyond even those human capabilities. However, achieving scalable AI systems presents systemic obstacles across hardware, software and policy.

This article frames current AI scaling challenges through the broader lens of human knowledge expansion to provide valuable insights and understanding of the existing challenges.

A Brief History of Scaling Intelligence

To better understand how knowledge has scaled over time, we can explore three essential pillars: quality, speed and reach. These elements have played a crucial role in shaping human progress and now provide a valuable framework for analyzing AI's evolution:

- Improve Quality (Attention to Detail): Enhancing the accuracy and reliability of recorded knowledge through peer-reviewed texts and curated libraries, with advancements in materials and evaluation methods.

- Increase Speed (Reduce Friction): Improving the dissemination of ideas, whether via Roman roads, the printing press or modern communication networks, leads to faster teaching, collaboration and innovation.

- Expand Reach (Broadening domains): Broadening access to knowledge through public libraries, open universities and digital platforms is often achieved through targeted decentralization and democratization.

The Importance of Quality

Institutions like the House of Wisdom, Hanlin Academy and the Royal Society improved knowledge quality by setting standards and gathering texts. Many critical ideas — such as the Scientific Method — were refined and developed within these intellectual enclaves.

Modern equivalents like Silicon Valley continue to showcase how high-quality intelligence inputs yield exponential returns. In tangible terms, education has profound measurable effects on society, amounting to a roughly 7x societal return for each dollar invested. Notably, modern AI systems exhibit similar emergent behaviors, where training on high-quality inputs has steadily increased model quality in novel ways.

The Importance of Speed

While maintaining quality is crucial, it is equally important to consider how quickly and efficiently knowledge transmission occurs. Throughout history, accelerating the flow of information has been a driving force behind major societal advancements. From the Roman Empire’s extensive road networks facilitating trade and cultural exchange to the printing press revolutionizing information accessibility, speed has consistently amplified the impact of ideas. Faster communication enables more efficient collaboration, innovation and decision-making — whether in the coordination of armies or the rapid spread of scientific discoveries.

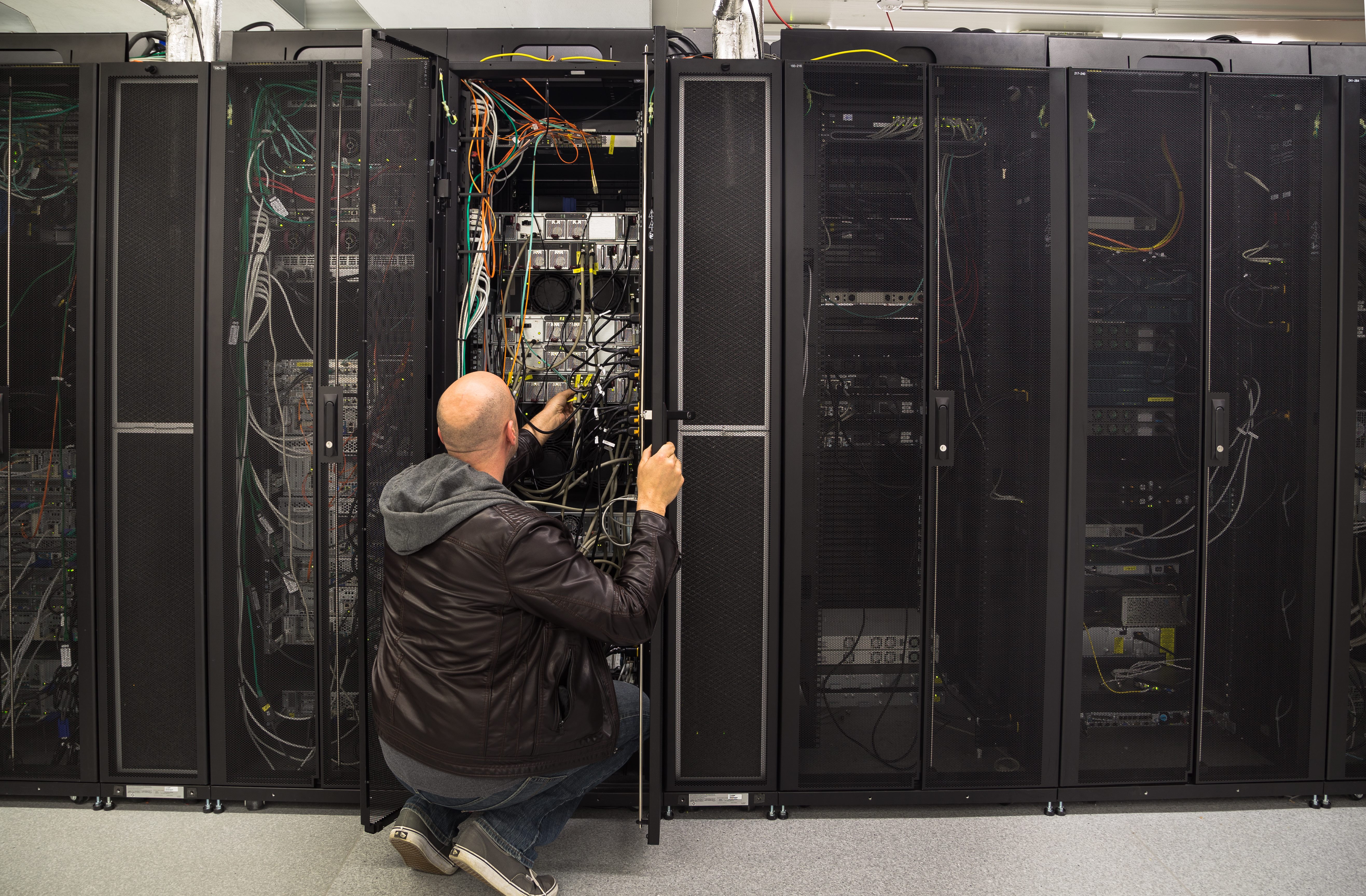

In the context of AI, speed is equally critical. Advancements in hardware, such as GPUs and TPUs, and optimized software frameworks have dramatically reduced processing times, enabling AI systems to analyze vast datasets and deliver real-time insights, making them more generally applicable.

The Importance of Reach

Beyond speed, the broader impact of knowledge depends on its distribution breadth. The reach of information determines how deeply it influences societies and fuels innovation across different regions and demographics.

For example, the invention of the printing press in the 15th century revolutionized knowledge dissemination by decentralizing book production and breaking the monopoly of religious institutions over written information. Similarly, expanding public education in the US democratized access to learning, leading to broader societal benefits such as higher employment rates, improved public health and greater civic coordination.

These historical advancements illustrate how increasing access to knowledge can create compounding effects. In the context of AI, this concept of "emergence" suggests that high-quality systems can achieve capabilities surpassing the sum of their components.

Related Article: How to Evaluate and Select the Right AI Foundation Model for Your Business

AI Scaling: From Early Efforts to the Transformer Era

By the 20th century, knowledge expansion evolved into machine intelligence. The Perceptron, an early neural network model from the 1950s, hinted at AI’s potential, but faced limitations identified by pioneers like Marvin Minsky and Seymour Papert, leading to the first "AI winter."

AI research resurged in the 1980s with breakthroughs in backpropagation and neural architectures by Geoffrey Hinton, Yann LeCun and Yoshua Bengio. By the 2000s and 2010s, increased data availability, faster processors and improved machine-learning techniques fueled object detection and speech recognition advancements. Despite these gains, early AI systems remained confined to narrow domains due to scalability and generalizability obstacles.

The Transformer Revolution and AI Boom

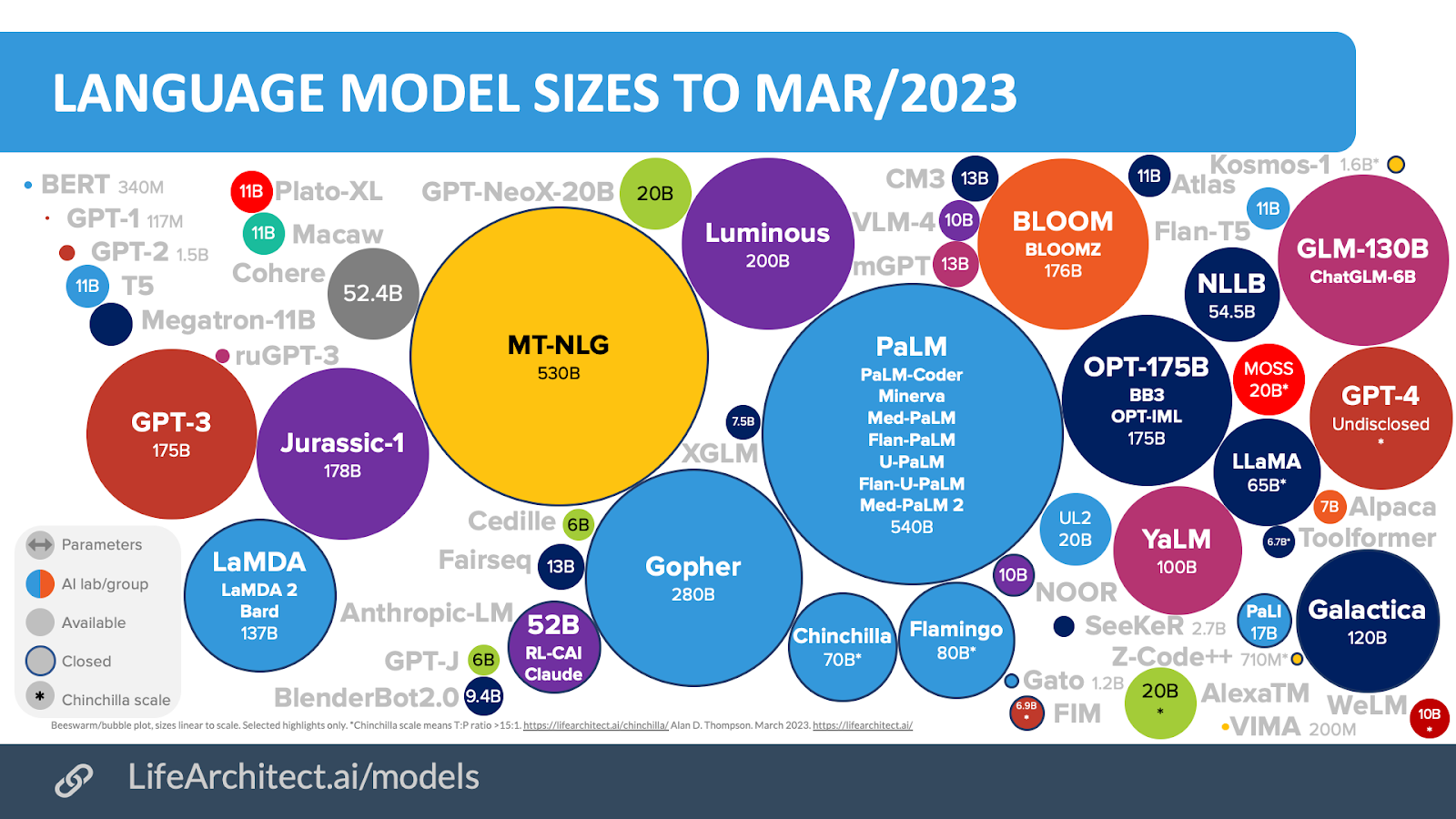

Incremental improvements have marked the evolution of AI. However, a rapid acceleration arrived with the discovery of transformer models in 2017, which enabled better parallelization and improved the model’s ability to understand nuance.

OpenAI's GPT series began with GPT-1 in 2018, and ChatGPT's public release in late 2022, based on GPT-3, marked a turning point and reached 100 million users within two months. This rapid growth fueled competition among tech giants like Alphabet (Gemini), Meta (LLaMA) and Anthropic (Claude), driving the race to develop frontier models.

Related Article: Open Innovation: Fueling AI That's Responsibly Developed and Useful

Challenges and Limitations in AI Scaling

For years, scaling — through larger models, more data and more significant computing — was the key to achieving AGI. However, despite billions in investment, concerns have been growing that brute-force scaling may reach its limits. Rising costs, resource constraints and stagnating performance improvements have led some experts to question whether scale alone remains viable.

On the other hand, AI boosters don’t see an impending “scaling wall,” and many see AGI as imminent.

there is no wall

Learning Opportunities — Sam Altman (@sama) November 14, 2024

Often drawn on ideological lines and economic incentives, obstacles and opportunities across historical challenges of quality, speed and reach underlie the differences of opinion. For example:

Quality: Data Saturation, Synthetic Data & Algorithms

- Data limitations are becoming evident: OpenAI co-founder Ilya Sutskever has stated that the industry has exhausted high-quality training data, asserting that “we’ve achieved peak data.” This constraint has shifted toward synthetic (AI-generated) data, raising concerns about potential “model collapse” due to repetitive feedback loops.

- Targeted learning may reduce data dependency: Advances in self-supervised learning and retrieval-augmented generation (RAG), such as contextual retrieval, enable AI models to achieve high performance with smaller, more specialized datasets. Additionally, Open-AI co-founder Noam Smith recently spoke on how enabling models to “think” for longer results in orders of magnitude improvements without traditional training.

- Synthetic data presents new possibilities: Companies like Anthropic are reportedly leveraging advanced models (e.g., Claude 3.5 Opus) to generate training data for smaller models, a process known as distillation that can dramatically reduce hallucinations. While promising, synthetic data may lack the diversity and unpredictability of real-world human inputs, potentially introducing biases and limitations in the longer term.

Speed: Hardware Bottlenecks & Environmental Costs

- Hardware shortages remain a concern: GPU supply chain disruptions, driven by geopolitical tensions and soaring demand, have hindered AI scaling efforts. Although the 2023-2024 chip shortage has resolved, future demand and potential pricing spikes may disrupt scaling by 2026, according to Bain & Company.

- Manufacturing expansion offers hope: Major chipmakers, including NVIDIA and AMD, are scaling production capacity, and new fabrication plants (such as TSMC’s Arizona plant) may alleviate future supply gaps. However, higher demand may still increase compute costs, but chip and algorithm efficiency improvements have historically offset these increases. According to the Wall Street Journal, NVIDIA’s newest chips, to be released later this year, are expected to be 30x faster and 25x more efficient than existing chips.

- Energy and water consumption may be the biggest “compute” barrier: Energy consumption is becoming a significant constraint on AI scaling, and rising water and power demands are leading industry leaders, like Meta CEO Mark Zuckerberg, to raise concerns. The actual environmental impact might be higher than tech CEOs admit, with the Guardian reporting that AI data center ecological impact in 2025 may be 600% higher than currently understood, complicating compute expansions.

Reach: Rising Competition & Geopolitical Pressures

- Open-source initiatives are gaining traction: Projects like Meta’s LLaMA and Hugging Face models democratize AI development, fostering broader participation and innovation.

- Regulatory scrutiny is rapidly evolving: Policymakers, particularly in the EU, impose stricter regulations, such as the AI Act, which could reduce development. At the same time, Trump has rescinded Biden-era AI executive orders that may accelerate it.

- Geopolitical pressures are evolving in reach and collaborators: China-US collaborations on AI through research and talent exchange have historically benefited both countries. Still, the US government sees China as a key geopolitical competitor in AI. Such competition reduces talent mobility but also incentivizes increased governmental spending. China’s “Big Fund” recently increased AI investment by $47 billion. At the same time, the Trump administration recently announced a $500 billion investment plan in AI called “Stargate,” a joint venture between American tech companies and the Japan-based Softbank Fund.

Scaling intelligence — whether with humans or machines — is a systemic, multi-causal process. Surprising innovations — like the discovery of the Transformer — or sudden disruptions — like the chip supply chain crisis — are inevitable. With winners unclear and AI tools constantly in flux, maintaining flexibility, curiosity and, perhaps most importantly, a critical eye are foundational guideposts for navigating AI.