Many people are tapping into generative AI tools to speed up work, help their teams accomplish more, cut out drudgery, improve accuracy and process vast stores of data to improve decisions. But how do you know the AI is speeding things up and not making things up?

AI is notorious for getting things wrong, making up facts and misreading data and prompts. There have been many — often disastrous — examples of AI getting it wrong with confidence and doing significant business damage. Even small mistakes and unwanted outcomes aren’t the end goal you want.

It isn’t smart to rely on AI without also having a system for checking its work. What metrics should you use to do that? Here are few tips.

Is AI Improving Productivity?

Many people, in a wide range of industries, use generative AI to improve productivity — and lift boring work from their plates. In one experiment, HBR found that those who used genAI to assist with HR functions (prepare emails, analyses, presentations and reports) saw a 29% time savings, freeing up around two hours per week. On top of that productivity boost, those who used genAI tools saw 17% higher enjoyment at work and 12% higher effectiveness.

AI can automate repetitive tasks like pulling data from documents, answering common questions, debugging code, summarizing meeting notes and other documents and otherwise doing work that is easy to define as well as slow, boring and repetitious for humans.

How to Measure Productivity Gains

To get a true sense of productivity gains, compare how long AI takes to complete a task (or human workers using AI) compared to how long is takes a person without AI to do it. In most cases, the AI use case should be faster.

Manuj Aggarwal, founder and CIO of TetraNoodle Technologies, said he uses generative AI to speed data entry and digitize paper-based processing. The AI is completing work, making decisions and automating workflows. To check its work, he looks at a few simple things.

- Are tasks getting done faster?

- Is the output accurate?

- Are people (employees and customers) having a better experience?

According to Aggarwal, he tracks human feedback and hard numbers to measure these things. “If those three things are improving, the AI is doing its job."

If AI is doing the job faster but introducing errors, then it's not the win you're looking for.

“These metrics show us what matters,” said Aggarwal. “Are we saving time? Are we keeping quality high? Are people happy using it? If the AI speeds things up but frustrates people, it’s not worth it.”

Related Article: The Benchmark Trap: Why AI’s Favorite Metrics Might Be Misleading Us

Is AI Improving (or Maintaining) Quality?

Sure, AI might be able to get work done fast. But is it keeping up the quality that your company (or customers) expect? That's a different matter.

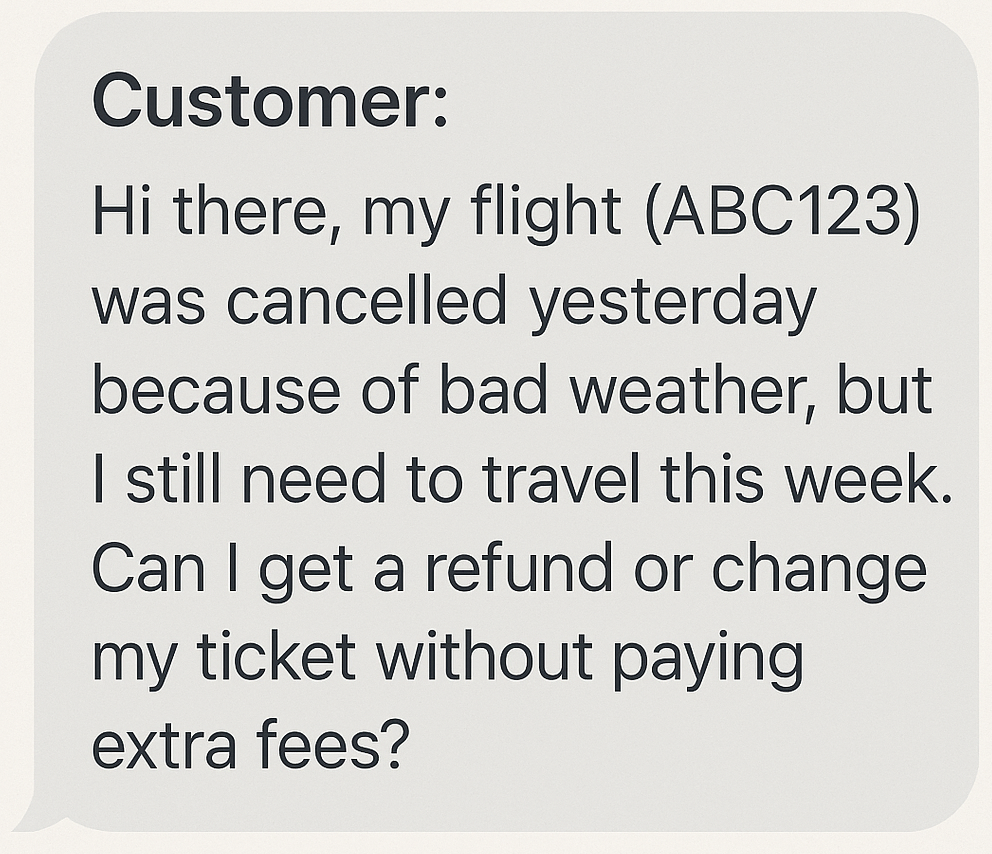

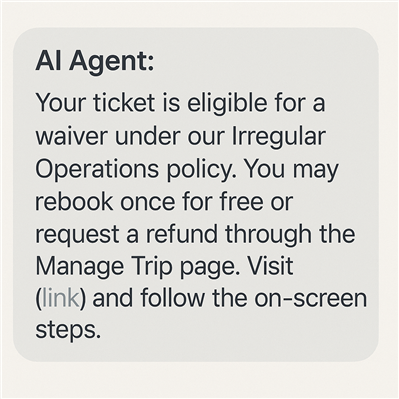

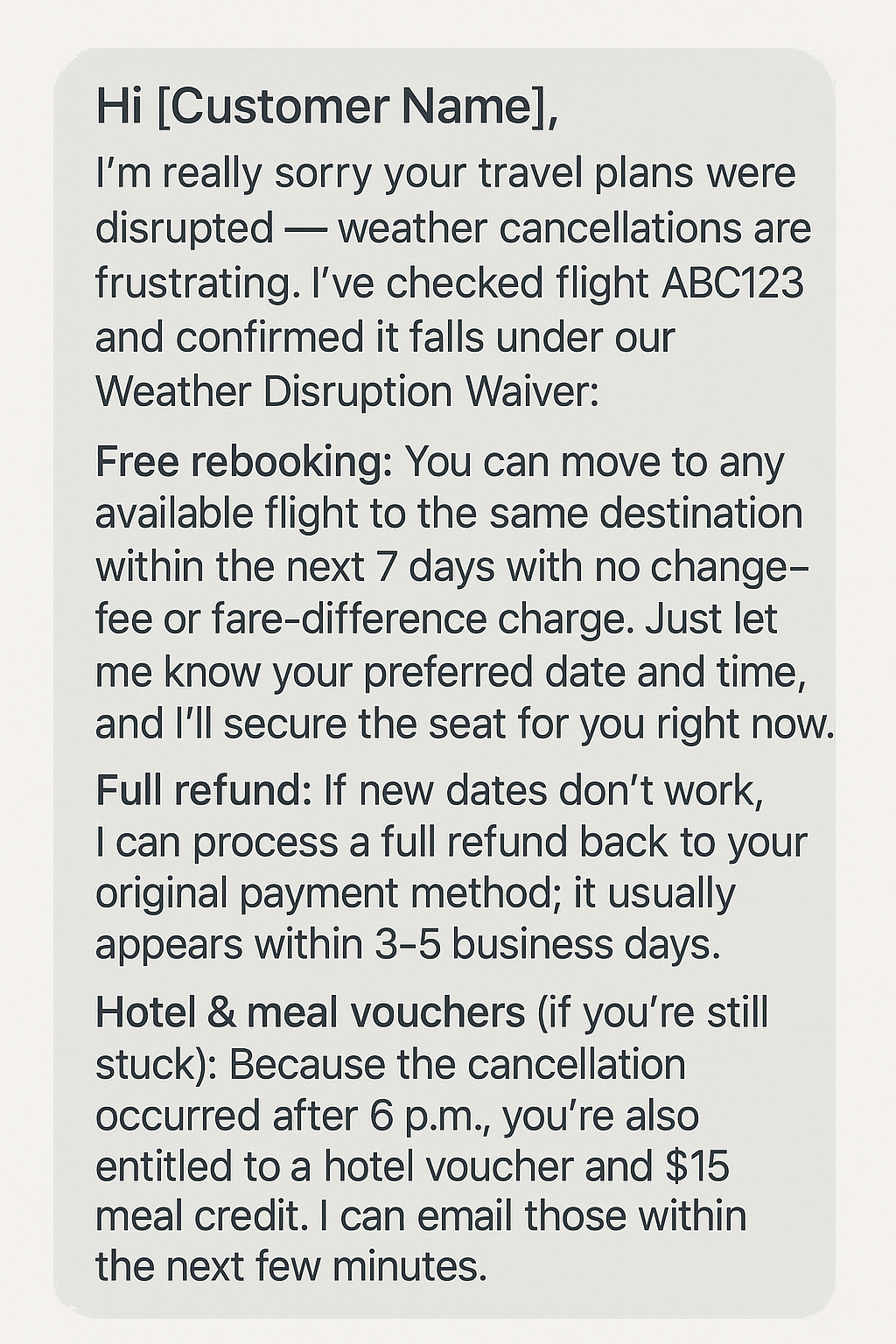

For certain tasks, measuring quality is paramount, such as when it comes to creating content, writing code or answering people's questions. Consider the difference between these two responses below to a customer query:

Customer Query

AI Agent Response

Human Agent Response

In this case, the human agents empathizes with the customer and takes on the burden of rebooking or refunding the money. They also offer additional information on hotel and meal vouchers in the event the person is stuck in a location where they don't live — information the customer may not have found on their own.

The AI answer, while still technically correct, lacks that emotional connection and requires the customer to take further steps, which may be frustrating or confusing.

How to Measure Quality

In the case of quality, you'll need a more nuanced measurement. For instance, you could:

- Check if the coding output does what you wanted it to do

- Poll customers on how well their questions were handled

- Track how often — and why — customers left a chatbot looking for human assistance

- Track engagement on content

- Compare AI-generated outputs against human-generated ones using predefined quality benchmarks (grammar, coherence, brand style, etc.)

Dr. Sam Zand, founder of the Anywhere Clinic, said he uses an AI therapy companion that allows customers to explore their emotions with the help of guided prompts. The customers know they are engaging with AI, and the clinic uses metrics to track how well the process goes.

“We track engagement frequency, emotional state transition and team productivity metrics,” he explained. “Our most telling indicator is emotional trajectory mapping — watching how a patient’s emotional tone shifts from the beginning to the end of an AI session. In therapy, those micro-shifts matter more than clicks or conversions.”

Is AI Offering Desired Outcomes?

Some applications of AI test the limits of the technology by creating AI personalities or bots that do work that you can’t hire enough humans to handle: Providing technical support, welcoming visitors to a website and answering questions or helping customers navigate the use of a product.

In these cases, you have to trust the AI to interpret your data and quickly translate FAQs, policies and manuals into answers. This can work well. But things can go very wrong if the AI starts hallucinating.

One such customer service chatbot recently created big problems for code editing company Cursor when it made up a screen-limit policy in response to a customer’s question. When the customer switched screens and was logged out of the tool, they reached out to tech support. The tech support chatbot confidently informed the customer that this was a new policy. The customer was furious, cancelled their account and got loud on social media. That led to many other customers also cancelling. But it wasn’t a new policy. The AI had hallucinated it.

In another incident, a customer reached out to Air Canada's chatbot to see if the airline offered bereavement fares following the death of his grandmother. The bot told the customer of a fare discount, which it said was claimable up to 90 days after flying. The catch? No such discount existed. While Air Canada initially tried to dodge any blame (or refunds), they were eventually mandated to follow-through with the chatbot's claims.

How to Measure Desired Outcomes

Catching this sort of problem is difficult. Curser amended its AI use by informing customers who interact with AI that they are talking to an AI that might not always be right. But this is an area that is still challenging for many companies.

In the case of Air Canada, they had to follow through on the claims the bot made to a customer, even though that claim was incorrect. This case could set a precedent as interactions between branded bots and customers continue to increase.

Ultimately, the only way to measure if AI is achieving desired (and accurate) outcomes is through human-in-the-loop strategies. Organizations must incorporate AI governance structures, which often involves first mapping out all the ways in which AI is used within a company. It also prioritizes transparency, ensuring that every decision an AI tool makes is explainable and auditable.

How Is AI Impacting the Business?

At some point, you should step back and take a bird’s-eye view of how generative AI is impacting your high-level business metrics.

Are you saving money or spending it? How long will it take to recoup your investments? In some cases where AI is used to analyze a lot of data to optimize things like routing, supply chain, personalized marketing or future product demand, implementation can be costly. If the cost translates into growth, it was worth it. But you will only know if you track key metrics.

How to Measure Business Impact

If you want an overview of AI's impact on your organization, you must keep an eye on metrics like:

- Sales growth

- Customer retention

- Upselling rates

- Net promoter score

- Customer satisfaction

- Inventory turnover

- Operation cost reductions

Similarly, organizational leaders might ask themselves:

- Are the AI tools delivering outputs that support our specific business objectives or KPIs?

- Do the outputs improve a process we’ve clearly defined as a priority?

- Are the tools generating insights or actions that are actually being used by the business?

- How often do the AI-generated results lead to decisions or outcomes we value (e.g., increased efficiency, reduced churn, improved personalization)?

Related Article: The Roadmap to AI ROI for Enterprises

Is the AI Tool Exhibiting Bias?

Bias can be a serious problem with AI, since the models amplify any human bias in their training data — and human bias is persistent and widespread. Bias can also happen when AI is trained on the wrong type of data for the required outcome. This can have serious implications for your business, so finding metrics to track this problem is important.

In one Stanford study on predicting patient risk for certain conditions, researchers found that the algorithm performed less accurately for minor populations. David Priede, a VKTR contributor involved in this project, said it became clear that the data they were using to train the model was not fully representative, leaving a gap to be filled.

In another study, researchers found that AI algorithms used to predict hospital readmission rates were often trained on data from underrepresented minority groups. The result? These algorithms underestimated the risk of readmission for these groups and, in turn, affected the treatment of patients with a higher risk of readmission.

How to Measure Bias

When it comes to custom-built AI tools, the first step is to be careful of the data with which you choose for training. In out-of-the-box solutions, like ChatGPT, you have no control over the training data. That means you need to monitor AI outputs with bias in mind. This could involve scanning AI decisions and outputs regularly to identify inequities that seem out of sync.

Companies can monitor and mitigate bias in AI outputs by:

- Establishing ground truth benchmarks (compare AI outputs against verified datasets or expert human judgments)

- Segment performance by demographic groups and evaluate performance by certain attributes

- Use fairness auditing tools like IBM AI Fairness 360, Google’s What-If Tool, Microsoft Fairlearn or others

- Perform bias stress tests, running simulations or scenarios where potential bias is likely

- Track human override rates, or how often human reviewers reject or adjust AI outputs

“When using GenAI, you need to be aware of the changing bias of these kinds of models,” explained Antony Cousins, a strategic advisor specializing in AI. According to Cousins, he does media monitoring and social media listening where fluctuations in bias would result in negative outcomes for his data.

“They're mostly a black box with hidden and changeable system prompts,” he explained. “This makes GenAI not ideal for some uses."

Determine Your Goals First, Choose AI Metrics Second

Choosing the right metrics to measure when it comes to AI use is an important consideration. It helps to first be clear about why you are using AI. Once you have that answer, you can better determine if an AI tool is the right solution to accomplishing that goal.