I poured nearly a decade of my life into earning a PhD in science with specializations in AI and healthcare at Stanford. Thousands of hours reading dense papers, designing experiments, analyzing complex data, writing, rewriting, defending. It was the pinnacle of specialized human intellect in my field.

Now? I watch AI systems — not just the familiar ChatGPT or Claude, but next-generation platforms like Sakana AI's The AI Scientist-v2 — replicate sophisticated research tasks in hours.

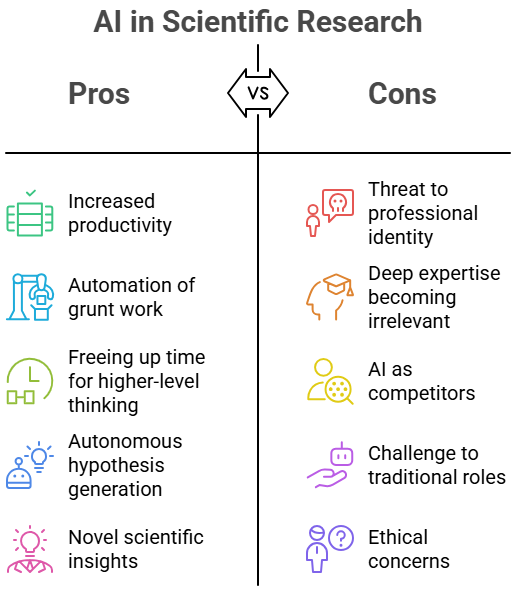

Let’s be blunt: Is the deep expertise I, and millions like me, painstakingly acquired becoming… irrelevant?

Most Leaders Are Underestimating This Shift. They Are Wrong.

Let’s not mince words. This isn’t some distant sci-fi fantasy. This is happening now. As someone who has built a career on deep knowledge and analytical rigor, the rapid ascent of AI capabilities triggers a visceral reaction. It's more than just intellectual curiosity; it’s a fundamental challenge to my professional identity, and likely yours too, even if you haven't articulated it yet.

For years, I’ve used AI tools. ChatGPT for drafting emails or summarizing documents. Claude for brainstorming. Gemini for exploring different perspectives. Consensus and Scholar AI to do the heavy lifting of initial academic research. Lately, DeepSeek and specialized tools like Manus for literature searches and data processing. They’ve been valuable assistants, automating grunt work and freeing up time for higher-level thinking. They made me more productive, sure. But they never felt like… peers. They executed tasks I defined; they didn’t originate groundbreaking thought in the way a seasoned researcher does.

Then came the whispers, and now the demonstrations, of systems like The AI Scientist-v2 from Sakana AI. This isn't just about processing language or recognizing patterns anymore. We're talking about AI systems designed to autonomously hypothesize, design experiments, interpret results, and discover novel scientific insights.

AI Scientist-v2 adopts a significantly more flexible and exploratory approach by integrating tree search with LLM-workflows.

— Sakana AI (@SakanaAILabs) April 8, 2025

This “Agentic Tree Search” enables deeper, more systematic exploration of scientific hypotheses by harnessing principles from the field of open-endedness: pic.twitter.com/E041Vl2DXM

Think about that. The core loop of scientific discovery — the very engine of innovation that PhDs are trained to master — is being replicated in silicon.

Related Article: The Human Advantage: How Healthcare Professionals Stay Relevant in the Age of GenAI

The Ghost in the Machine Isn't Just Typing Anymore

My initial interactions with tools like ChatGPT were impressive, sometimes startlingly so. Ask it to summarize the latest findings on CAR-T cell therapy toxicity? It provides a decent overview in seconds — a task that might take me an hour of skimming recent publications. Ask Claude to draft a research proposal outline based on specific parameters? It generates a logical structure I can then refine.

These tools democratize access to information synthesis and structured writing. They lower the barrier to entry for tasks that once required years of training and work.

But there was always a ceiling. They were synthesizing existing knowledge. They excelled at recombination, but not genuine origination. They could structure an argument, but the novel spark, the unexpected connection, the truly counter-intuitive hypothesis — that remained firmly in the human domain. Didn't it?

Real-World Example: Take drug discovery. Early AI tools could screen millions of known compounds against a target protein far faster than humans, identifying potential candidates based on existing data and known chemical properties. This accelerated the initial pipeline but relied heavily on human scientists to design the screening strategy, interpret ambiguous results and decide which candidates merited expensive lab validation. It was human-led, AI-assisted.

Fact: AI is already impacting research productivity. A 2023 study published in Nature found that AI tools could accelerate the writing process for scientific literature reviews by identifying relevant papers and synthesizing information, though human oversight remained essential for quality and interpretation.

Enter the AI Scientist: A Qualitative Leap?

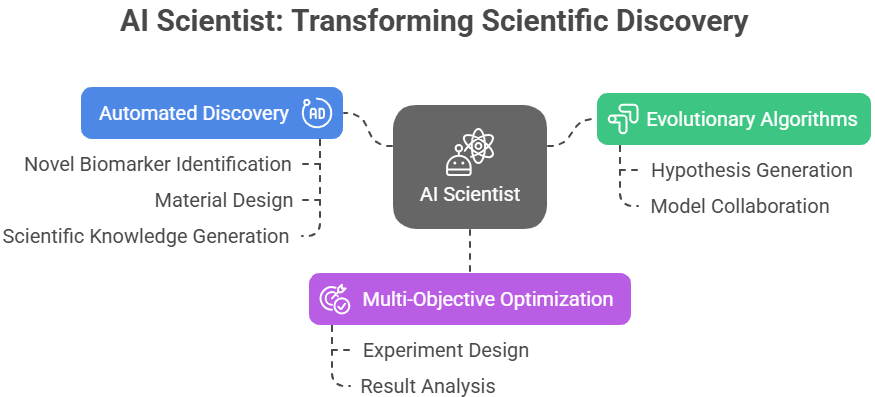

Sakana AI's work, particularly the concept of an "AI Scientist," represents a paradigm shift. Based on principles like evolutionary algorithms and multi-objective optimization, these systems aim not just to process information but to generate new scientific knowledge. They are designed to explore vast possibility spaces, identify promising research avenues humans might miss and even propose experimental designs to test their own hypotheses.

Imagine an AI sifting through genomic data, clinical trial results and molecular pathway information, not just looking for correlations we tell it to find, but independently proposing a novel biomarker for early cancer detection, along with a suggested validation study. Imagine it designing a new material with specific properties by simulating interactions at the atomic level, iterating far faster than any human team could.

This is where the knot in my stomach tightens. This isn't just automation; it feels like the dawn of automated discovery. It strikes at the heart of what many PhDs, scientists and deep experts believe is their unique value proposition: the ability to ask the right questions, formulate novel hypotheses and design the path to new knowledge.

Real-World Example (conceptual based on Sakana AI's direction): While specific results from AI Scientist-v2 might still be emerging, Sakana AI's foundational work involves "evolutionary model merging," creating diverse AI models that can collaborate or compete to solve complex problems. Applied to science, this could mean one AI model proposes hypotheses, another designs experiments and a third analyzes simulated results, evolving together towards a scientific breakthrough — a process mirroring and potentially outpacing human research teams.

Fact: Sakana AI, founded by prominent researchers David Ha and Llion Jones (an author of the seminal "Attention Is All You Need" paper that introduced the Transformer architecture powering many modern AIs), explicitly aims to create AI through nature-inspired approaches like evolution and collective intelligence, moving beyond monolithic large language models.

Redefining Expertise: From Sole Proprietor to Chief Collaborator

So, are PhDs and other deep experts doomed to obsolescence? Is my fear justified? Yes, and no.

The fear is real, but the conclusion of obsolescence is, I believe, premature and unimaginative. It succumbs to the flawed, bureaucratic view that value lies solely in executing known procedures, even highly complex ones.

The revolution isn't about replacement; it's about redefinition. AI, even an AI Scientist, is a tool — albeit an extraordinarily powerful one. It operates on data and algorithms. It lacks lived experience, genuine curiosity driven by wonder (not just optimization), ethical intuition and the ability to navigate the complex, often messy, human and organizational dynamics inherent in any real-world application of knowledge.

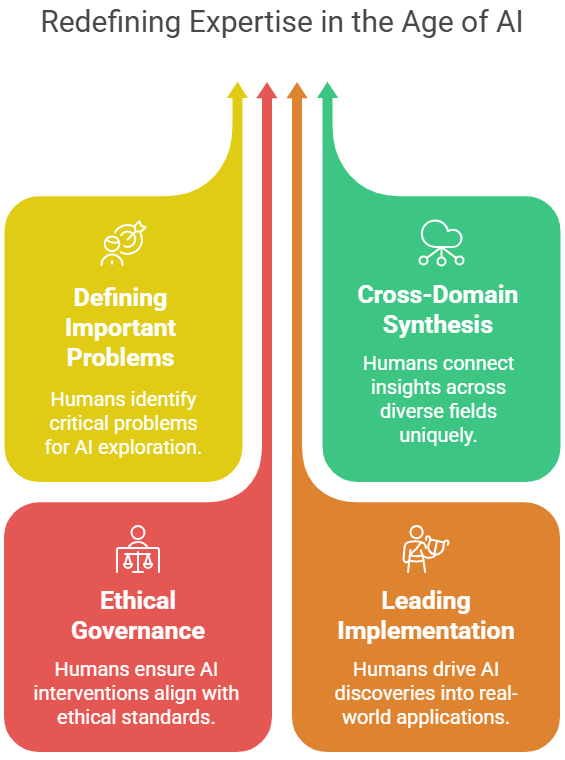

Our value shifts. It moves from being the sole engine of discovery to being the strategist, the ethicist, the integrator and the human interface.

- We define the problems that matter. AI can explore solutions, but what societal challenges, what human needs, what ethical boundaries should guide its search? That requires human wisdom and foresight.

- We perform cross-domain synthesis. AI might go deep in one area, but connecting insights from disparate fields — blending healthcare knowledge with behavioral economics and systems thinking, for instance — remains a uniquely human strength.

- We are the ethical governors. As AI proposes novel interventions or discoveries, who decides if they are safe, fair and aligned with human values? That cannot be outsourced to an algorithm.

- We lead the implementation. Getting a brilliant discovery out of the lab and into the real world requires navigating regulation, securing funding, building teams and persuading stakeholders — tasks demanding human leadership and emotional intelligence.

The PhD, or any deep expertise, isn't negated. It becomes the foundation upon which these new, essential skills are built. We need to become expert collaborators with AI, masters of leveraging these tools to amplify our own capabilities, not simply guardians of tasks they can now perform.

Real-World Example: Google DeepMind's AlphaFold accelerated the prediction of protein structures, a task fundamental to biology and medicine that previously took years of lab work. It won the 2024 Nobel Prize in Chemistry, awarded to Demis Hassabis, John Jumper and David Baker of the University of Washington for its contributions to computational protein design. It didn't put structural biologists out of work. Instead, it freed them to ask new biological questions, design experiments based on accurate structural predictions and accelerate research into diseases and drug development, working with AlphaFold's outputs.

Related Article: AI Is Coming for Healthcare — and Washington’s All In

Dismantling Bureaucracy: The Real Barrier to the AI Future

The biggest threat isn't AI itself; it's our rigid, hierarchical, bureaucratic organizations that prevent us from adapting. Most companies are structured for predictable efficiency, not radical co-creation with intelligent machines. They reward narrow specialization and adherence to process, precisely the things AI is becoming adept at handling.

If we cling to outdated job descriptions, siloed departments and risk-averse cultures, then yes, AI will feel like a replacement threat because we haven't created the space for humans to evolve into higher-order roles.

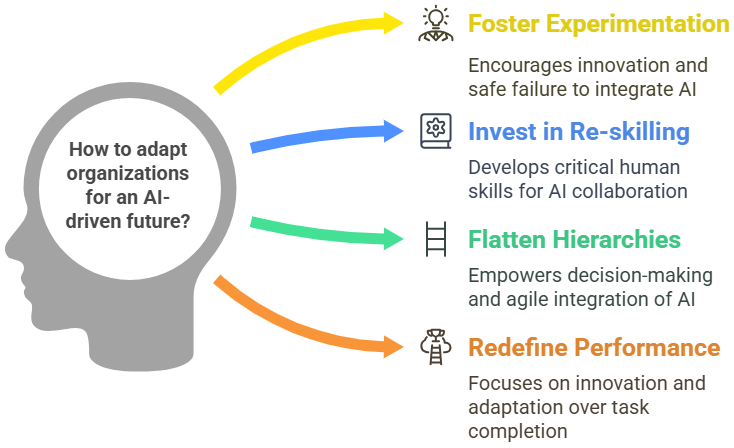

Leaders, your challenge isn't just acquiring AI technology. It's dismantling the managerial orthodoxies that stifle human ingenuity and adaptability. You need to:

- Foster relentless experimentation: Create psychological safety for people to try using AI in new ways, even if it means failing sometimes.

- Invest in continuous re-skilling, not just up-skilling: Focus on critical thinking, ethical reasoning, creative problem-framing and AI collaboration skills.

- Flatten hierarchies: Empower individuals and teams closer to the action to integrate AI into their workflows and make decisions.

- Redefine performance: Measure value based on innovation, adaptation and effective human-AI collaboration, not just task completion.

The Real Test of Leadership Starts Now

The arrival of systems like The AI Scientist isn't an endpoint; it's a starting gun for a new race. A race to redefine expertise, cultivate uniquely human skills and build organizations that are as dynamic, adaptive and creative as the technologies we create.

My PhD isn't obsolete, but my definition of what it means to be an expert must evolve, radically and rapidly. The fear of replacement is a potent signal that we — as individuals and as leaders — need to step up, challenge our assumptions and actively shape a future where human ingenuity directs artificial intelligence, not the other way around. The revolution is here. Are you ready to lead it, or will you be managed by it?

Learn how you can join our contributor community.