Every enterprise facing a GenAI initiative eventually arrives at the same question: Should metadata be created by humans or AI?

It's the wrong question.

The organizations asking it are trapped in a false dichotomy. They assume they must choose between the accuracy of human judgment and the speed of machine processing. Meanwhile, their content backlog grows and their AI initiative stalls waiting for "clean data."

The answer isn't human or AI. It's human and AI, but in the right combination, for the right tasks and with the right feedback loops.

Pure manual doesn't scale. Pure AI isn't accurate enough. The hybrid model gives you both scale and accuracy.

This isn't a compromise; it’s an optimization that uses the strengths of each approach to produce a system that is better than either one alone. The organizations that figure this out will tag 100,000 documents in the time their competitors spend arguing about methodology.

Table of Contents

- The Spectrum of Automation

- The Task Division Matrix

- The 3-Step Workflow

- The Economic Argument

- The Quality Paradox

- 5 Best Practices for Implementing AI-Assisted Metadata Creation

- Case Study: Healthcare Information Services Company

- The Implementation Path

- The Bottom Line

The Spectrum of Automation

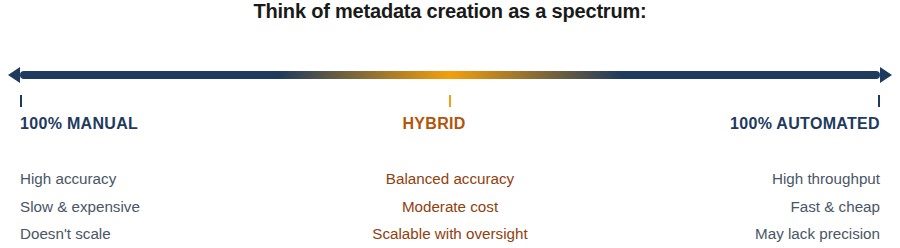

At the manual extreme, humans read every document, apply expert judgment and tag with nuanced understanding of business context. The quality can be excellent when humans do it. (But humans are notoriously inconsistent when tagging due to different interpretations of the content and tags, fatigue, subjective judgement and so on). The problem is, they rarely do. Faced with 100,000 documents, manual tagging becomes a theoretical aspiration rather than an operational reality.

At the automated extreme, AI processes documents at machine speed, applying consistent rules across the entire corpus. The throughput is impressive. But AI makes mistakes humans wouldn't, such as missing context, misclassifying edge cases and applying tags that are technically correct according to the stated rules, but semantically wrong.

Neither extreme works for enterprise GenAI. Manual can't handle the volume. Automated can't handle the nuance. But somewhere along that spectrum is a sweet spot where AI does what it does well and humans do what they do well.

Finding that sweet spot is the game.

Research from the International Data Corporation (IDC) validates this hybrid approach: organizations using AI-assisted metadata creation with human oversight achieve 94% accuracy at scale, compared to 76% for pure automated approaches and 89% for pure manual (when manual is actually completed — which it rarely is at enterprise scale).

Related Article: Beyond GPUs: Why Frontier Labs Are Now Racing to Secure Human Expertise

The Task Division Matrix

Not all metadata tasks are created equal. Some are perfect for AI, and some require human judgment. Most benefit from collaboration between the two. Here's how to think about the division:

What AI Handles Best

- Basic metadata extraction (title, date, author, format): This is mechanical work. AI extracts it in milliseconds with near-perfect accuracy. Having humans do this is like having accountants do arithmetic by hand — technically possible, economically absurd.

- Initial document classification: AI can categorize documents by type (policy, procedure, specification, FAQ) with 80-90% accuracy. That's not perfect, but it's a starting point that dramatically reduces human workload.

- Bulk tagging suggestions: AI can analyze document content and suggest relevant tags from your taxonomy. It won't get them all right, but it surfaces options humans might not think of, especially for patterns across large document sets.

- Relationship identification: AI excels at finding statistically similar documents. "This troubleshooting guide is related to these five others" is a pattern-matching task that would take humans hours but can be done well by AI in a matter of seconds.

- Compliance flag detection: AI can scan for keywords, phrases and patterns that indicate potential compliance concerns such as personally identifiable information (PII), regulated content and content with legal sensitivities. It catches what humans might skim past in document 4,000.

What Humans Handle Best

- Audience identification: Who is this content for? That requires understanding organizational context, user personas and business strategy; skills that AI doesn't possess. A document about "flexible work policy" might serve HR administrators, people managers and all employees differently — humans understand those distinctions.

- Quality and accuracy judgment: Is this content actually correct? Is it current? Is it the authoritative source or a draft that was never finalized? These assessments require domain expertise and organizational knowledge.

- Strategic tagging decisions: Should this competitive analysis be tagged for Sales only, or should Product also see it? These aren't classification decisions — they're business strategy decisions. Humans are best equipped to operationalize these insights.

- Edge case resolution: Humans are best at dealing with documents that don't fit neatly into any category, or content that uses terminology that does not match the taxonomy. Humans handle ambiguity; AI struggles with it.

How to Apply the Hybrid Approach

- Content classification (document type): The best approach for document type is for AI to suggest, and humans to confirm the classification. AI gets it right 85% of the time on average. Human review catches the 15% that need correction. Together, they achieve accuracy neither could alone.

- Topic tagging: Similarly, AI can generate candidate tags based on content analysis. Humans review and select the best 3-5 candidates, adding any that the AI missed. AI sees patterns that would be difficult for humans to detect, and humans can catch errors that AI makes.

- Relationship validation: AI identifies potentially related documents based on similarity scores. Humans can then validate whether those relationships are actually meaningful. Statistical similarity does not always constitute semantic relevance.

- Compliance validation: AI can watch for red flags that indicate a potential concern, while humans make the final determination. This is risk management; AI casts a wide net, and humans apply judgment.

The pattern is clear: AI handles volume and consistency. Humans handle judgment and context. The hybrid model combines both.

The 3-Step Workflow

Implementing a workflow for the hybrid approach should follow a consistent pattern. The specific tools used may vary, but the workflow should follow the steps described below:

Step 1: AI First Pass (Automated)

AI processes the entire document corpus, performing:

- Metadata extraction: Author, date, format, source system

- Document classification: Categorize by content type (80-90% accuracy)

- Tag generation: Suggest 5-10 relevant tags from the taxonomy

- Relationship mapping: Identify potentially related documents

- Compliance scanning: Flag content requiring human review

Time required: Seconds per document. A corpus of 100,000 documents processes within a few days, rather than years, as would be required for an entirely manual review.

Output: Every document has provisional metadata — not perfect, but a foundation to build on.

Step 2: Human Review (Selective)

Humans don't need to review everything. They should review strategically:

- High-stakes content: Anything customer-facing, compliance-sensitive or business-critical gets a human review

- Low-confidence classifications: These are cases for which AI uncertainty scores are below threshold

- Random sample: 5-10% of routine content, spot-checked for quality

- Escalated items: Content AI flagged as ambiguous or potentially problematic will get a human review.

For each reviewed document, humans:

- Confirm or correct document classification

- Select best tags from AI suggestions (typically 3-5 from 10 candidates)

- Add any missing critical tags

- Validate or dismiss compliance flags

- Approve or reject relationship suggestions

Time required: 1-2 minutes per document. With selective review, humans touch perhaps 10-20% of total content.

Step 3: Feedback Loop (Continuous)

This is where the magic happens — and where most organizations fail.

Track everything:

- Which AI suggestions get accepted vs. those that are rejected after human review?

- What tags do humans frequently add that AI missed?

- Which document types have highest error rates for classification or tagging?

- How closely does AI confidence correlate with actual accuracy?

Use tracking metrics to improve the metadata outcomes as follows:

- Retrain AI models based on human corrections

- Adjust confidence thresholds based on actual performance

- Identify taxonomy gaps (topics humans tag that aren't in the system)

- Surface systematic errors for process improvement

Result: AI gets better over time. The 85% accuracy in month one becomes 90% in month six and 93% in month twelve. Human review effort decreases as AI quality increases.

Without the feedback loop, the organization is challenged with a static system that never improves. With it, an adaptive system learns from every human decision.

Related Article: The 5-Level Content Operations Maturity Model: Where Are You on the Path to AI-Ready?

The Economic Argument

Let's do the math on a real scenario: 100,000 documents requiring metadata for a GenAI knowledge base.

Pure Manual Approach

- 100,000 documents × 5 minutes each = 8,333 hours

- At $50/hour fully loaded = $416,500

- At 2,000 hours/year per FTE = 4.2 FTE-years

- Timeline: 18-24 months (if you can find and retain the people)

Reality check: This never happens. The project gets scoped down, perhaps because documents may take longer than the estimated 5 minutes each to review. The timeline slips, corners get cut and the organization ends up with partial coverage and inconsistent quality. The theoretical cost is $400K+. The actual cost is often higher because of rework, delays and opportunity cost.

AI-Assisted Hybrid Approach

- AI processes 100,000 documents in 1 week – costs depend on size and complexity of the documents and the large language model (LLM) used

- Human reviews 10% (10,000 documents) × 2 minutes = 333 hours

- At $50/hour = $16,650

- Timeline: 2-3 months

The multiplier: The hybrid approach costs roughly 4% of manual review, while delivering comparable or better quality.

But cost isn't the only factor. Consider the following other advantages of hybrid reviews:

Consistency: AI applies the same rules to document 1 and document 100,000. Humans get tired, distracted and inconsistent. By document 50,000, manual tagging quality has drifted and typically has degraded significantly.

Completion: The hybrid approach actually gets done. The manual approach gets abandoned, scoped down or stretched over years and the content becomes stale.

Speed to value: Two months to a working GenAI system versus two years. The business value of that 22-month acceleration dwarfs the direct cost savings.

The Quality Paradox

It might seem that the quality of human-generated metadata would be uniformly better than the automated approach. However, AI-assisted metadata is often better than pure human metadata.

How is that possible?

Consistency: AI never has a bad day. It doesn't skip fields because it's rushing to a meeting. It doesn't interpret taxonomy terms differently from one day to another. Consistency enables findability in ways that deeper insights cannot.

Pattern recognition: AI sees connections across 100,000 documents that no human could hold in working memory. It identifies emerging topics, clusters related content and surfaces relationships that humans would miss simply due to cognitive limitations.

Completeness: AI fills every field for every document. Manual approaches inevitably leave gaps — fields skipped, documents missed, backlogs that never get processed. Incomplete metadata is often worse than imperfect metadata.

AI lets humans catch what matters: When humans aren't exhausted from tagging thousands of routine documents, they bring fresh attention to the edge cases, compliance risks and strategic decisions that actually require human judgment.

The hybrid model doesn't just reduce costs. It improves quality by deploying human attention where it creates the most value.

5 Best Practices for Implementing AI-Assisted Metadata Creation

Organizations that succeed with hybrid metadata follow consistent patterns:

1. Let AI Do the Heavy Lifting

Tasks like basic extraction, initial classification and bulk tagging are AI tasks. Don't waste human cognition on work that machines handle better. Every hour a human spends extracting author names from document properties is an hour they're not spending on judgment calls that actually need their expertise to resolve.

2. Focus Human Review on High Stakes

Not all content is equal. A customer-facing FAQ needs more scrutiny than an internal meeting note. A compliance-sensitive policy needs human validation; a routine status report probably doesn't. Define review tiers based on content risk and visibility.

3. Spot-Check, Don't Review Everything

For routine content, review a random 5-10% sample to monitor AI quality. If the sample shows problems, investigate and retrain. If the sample looks good, trust the system. Statistical quality control works for manufacturing; it works for metadata too.

4. Close the Feedback Loop

The value of using feedback to improve the metadata process cannot be overemphasized. Track whatever changes humans make. Then feed the corrections back to AI training. Measure accuracy over time to see if it is improving. Adjust confidence thresholds based on data. The feedback loop is what transforms a static tool into an improving system.

5. Make Human Review Frictionless

The biggest implementation mistake is to make human review as hard as manual tagging. Support human review with judicious use of AI. Consider the following pointers:

- Don't ask humans to tag from scratch → show AI suggestions and let them approve/modify

- Don't show 50 candidate tags → show the top 5, with option to see more

- Don't make every field required → focus attention on what matters most

- Don't hide AI confidence → let reviewers prioritize low-confidence items

The goal is to review AI’s work, not to duplicate it. Every friction point in the review interface costs time, quality or both.

Case Study: Healthcare Information Services Company

A healthcare documentation provider needed to tag 180,000 clinical documents for their new GenAI-powered research assistant. Initial estimates for manual tagging: 18 months and $720,000.

Using the hybrid approach:

- AI First Pass: Completed in 9 days, generating metadata suggestions for all documents

- Human Review: 15,000 documents (8.3%) flagged for review based on low confidence scores

- Specialized Review: 3,200 documents with clinical sensitivity received SME validation

- Feedback Loop: Three retraining cycles improved AI accuracy from 82% to 91%

Results:

- Timeline: 11 weeks (vs. 18 months projected for manual)

- Cost: $89,000 (vs. $720,000 for manual)

- Accuracy: 93% (exceeding the 89% historical benchmark for pure manual)

The key insight: AI handled volume while humans handled judgment. Neither could have achieved this result alone.

The Implementation Path

For organizations ready to move from theory to practice:

Phase 1: Pilot (4-6 weeks)

- Select a bounded content set (5,000-10,000 documents)

- Configure AI for basic extraction and classification

- Establish review workflows and train reviewers

- Measure baseline accuracy and time

Phase 2: Calibrate (4-6 weeks)

- Analyze pilot results — where did AI succeed and where did it struggle?

- Adjust classification models based on human corrections

- Tune confidence thresholds for review routing

- Refine taxonomy based on gaps identified

Phase 3: Scale (8-12 weeks)

- Extend to full document corpus

- Implement continuous feedback loops

- Establish ongoing quality monitoring

- Transition to steady-state operations

Phase 4: Optimize (Ongoing)

- Conduct regular model retraining on accumulated corrections

- Support taxonomy evolution based on emerging patterns

- Refine processes based on operational data

- Expand to new content types and use cases as they surface

The organizations that treat hybrid metadata as a one-time project miss the point. It's an operational capability that improves over time, but only if you build the feedback mechanisms that enable learning.

Related Article: From Siloed to Composable: Why Componentized Information Architecture Wins

The Bottom Line

The debate between manual and automated metadata is a false choice. The question isn't which approach to use — it's how to combine them effectively.

AI handles volume, consistency and pattern recognition. Humans handle judgment, context and edge cases. Together, they achieve what neither can alone: accurate metadata at enterprise scale.

The organizations that figure this out will have AI-ready content while their competitors are still deciding what to do. They'll spend 4% of the cost as compared to purely manual processing and will get better results. They'll deploy human expertise where it matters instead of wasting it on mechanical tasks.

Pure manual doesn't scale. Pure AI isn't accurate enough. The hybrid model is the only approach that actually works for enterprise GenAI.

The question isn't whether to adopt it. The question is how fast you can implement it.

Learn how you can join our contributor community.