Human genius is evolving, but not just as headlines suggest.

While debates about AI replacing jobs or outsmarting humans dominate the conversation, a quieter and more urgent question sits beneath the surface: Can we identify and appreciate the human skill, value and intelligence in AI outputs?

Where Human Thought Ends and Machine Input Begins

The boundaries between human thought and machine output are increasingly blurred. From predictive text and smart filters to advanced content generation, AI systems now guide, refine or even initiate the things we write, design and decide. Often, we engage with AI without realizing how it shapes our tone, ideas or decisions.

At the same time, the world is at a crossroads. Some governments, like the US, are actively removing regulatory “barriers” to accelerate innovation, while others are reinforcing ethical safeguards through legislation like the EU AI Act. Industry leaders have raised concerns that aggressive AI chip export controls might stifle innovation and international collaboration, and creative organizations are calling for guardrails that protect human authorship and intellectual property.

In this complex landscape, perhaps a more grounded approach is needed — a third path that aligns innovation with ethics and places human identity at the center of progress. One that neither rejects ambition nor caution, but seeks to harmonize them through meaningful collaboration.

One of the most symbolic moments of this shift came in 2016, when DeepMind's AlphaGo defeated champion Lee Sedol at the ancient game of Go. It wasn't just a win; it disrupted assumptions about creativity, intuition and the limits of machine intelligence. Since then, AI's role in creative, cognitive and decision-making domains has accelerated dramatically.

The implication? We're entering a space where authorship, creativity and responsibility are shared but not always acknowledged. The ethical concern extends beyond determining who made something to a more profound question: How do we identify and protect human creativity in AI-assisted work, especially when the universal definition of "human skill" is still evolving?

Staying stuck in a binary mindset of human-made versus AI-generated risks misses this deeper challenge.

Related Article: AI in the Workplace: Jobs, Creativity, & Ethics at Stake?

The Double-Edged Sword of AI Support

AI is already supporting human ingenuity. Streaming companies use AI to analyze audience engagement data. In music, artist Holly Herndon uses AI-driven sound synthesis to explore innovative compositions, preserving artistic integrity while expanding her sonic palette. Writers and filmmakers use AI tools to brainstorm plot lines and character arcs while maintaining full narrative control. These examples show how AI can serve as a collaborator by expanding our creativity.

Still, AI-generated content can blur authorship and ownership lines, raising questions around compensation, credit and artistic recognition. Ethical AI adoption must prioritize human creativity and agency to ensure innovation strengthens human excellence.

Beyond creative industries, AI is advancing scientific research, legal review and healthcare. AlphaFold, developed by DeepMind, has solved critical protein structure challenges, revolutionizing molecular biology and accelerating drug development, while scientists continue to lead hypothesis generation and analysis. In law, AI-powered contract analysis tools reduce review time and detect anomalies, but human lawyers must interpret nuance, legal risk, and intent.

The Australian Government’s Select Committee on Workforce Australia recently warned that workers may feel pressured to over-rely on AI due to unrealistic expectations or lack of support for human-led development. This speaks to the broader concern: when AI is overused or misused, the opportunity to develop or recognize authentic human ability can quietly erode.

AI detection tools also introduce ethical challenges. A Stanford study found AI detectors misclassified 61.22% of essays by non-native English speakers as AI-generated, compared to near-perfect accuracy for native speakers. Imperfect tools can lead to false positives, reputational harm and misplaced scrutiny. I've observed varying patterns among non-native English-speaking students. Some gain confidence using AI tools; others become overly dependent, limiting their language growth through natural trial and error. This tension reflects how academic and workplace pressures influence technology use, not a failure of the tools, but a call for thoughtful, human-centered, practical frameworks.

Internal Biases, External Consequences

Beyond simplistic dualities of promise versus peril or creativity versus automation, the AI conversation encompasses layered emotional, ethical and systemic complexities.

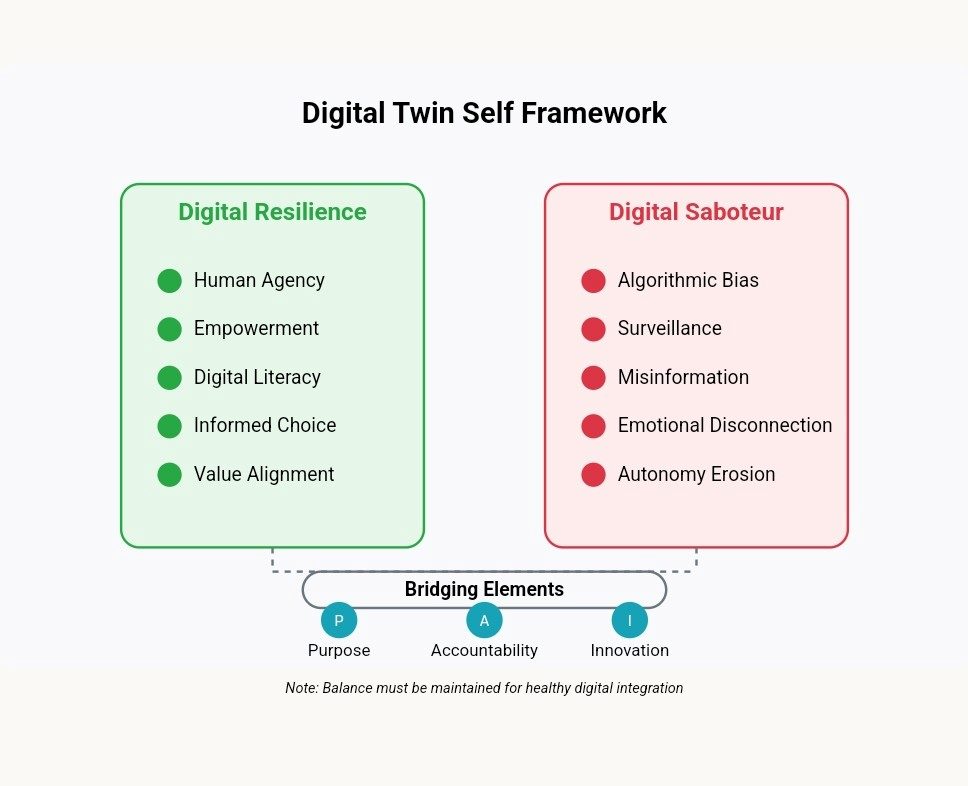

This complexity gives rise to what I call digital saboteurs, internal and systemic forces that unconsciously undermine confidence, creativity and clarity in our digital lives. These are not deliberately malicious entities but instead, psychological and organizational patterns that emerge at the intersection where innovation's promise collides with its reality. They thrive in ambiguity, feeding off fear, overconfidence and misalignment. Without directly acknowledging and addressing them, they silently shape how we adopt AI and make decisions using it.

Consider two common manifestations: the Skeptic doubts the fairness or transparency of AI systems, potentially dismissing AI-assisted work outright or overly scrutinizing peers using these tools, unintentionally reinforcing exclusion. Conversely, the Overcompensator overuses AI to mask insecurity, blurring the boundaries of authentic work and creating tension around what constitutes "original" contribution in educational or creative contexts.

These psychological patterns extend beyond personal experience to shape our collective understanding of fairness, validity and humanity itself. While legal frameworks evolve to define authorship and establish values-based boundaries, they must be informed by lived experience and the complex realities of a world in change. These moments reveal the intersection of legal, emotional and social systems that should inform, not compete, with one another.

Recognizing these psychological and systemic patterns isn’t enough. We will need to be equipped to respond to them. The emotional undercurrents, ethical ambiguities and power dynamics that shape our interactions with AI require more than individual awareness. They call for an intentional framework, one that helps individuals, teams and institutions manage these blurred lines with clarity, purpose and resilience.

The ethical integration of AI demands a collaborative approach. Individual efforts to cultivate digital resilience, complemented by systemic initiatives, create safe spaces for shared responsibility and collective action. This requires open dialogue between diverse individuals, organizations, policymakers, technologists and across disciplines to establish ethical guidelines, promote transparency and ensure that AI systems are developed and deployed in a manner that ultimately aligns with human values and promotes the common good.

This visual contrasts digital saboteurs with digital resilience, emphasizing the psychological dynamics that influence human-AI interaction and the importance of self-awareness in ethical integration.

A Framework for Navigating Human-AI Complexity

This visual contrasts digital saboteurs with digital resilience, emphasizing the psychological dynamics that influence human-AI interaction and the importance of self-awareness in ethical integration.

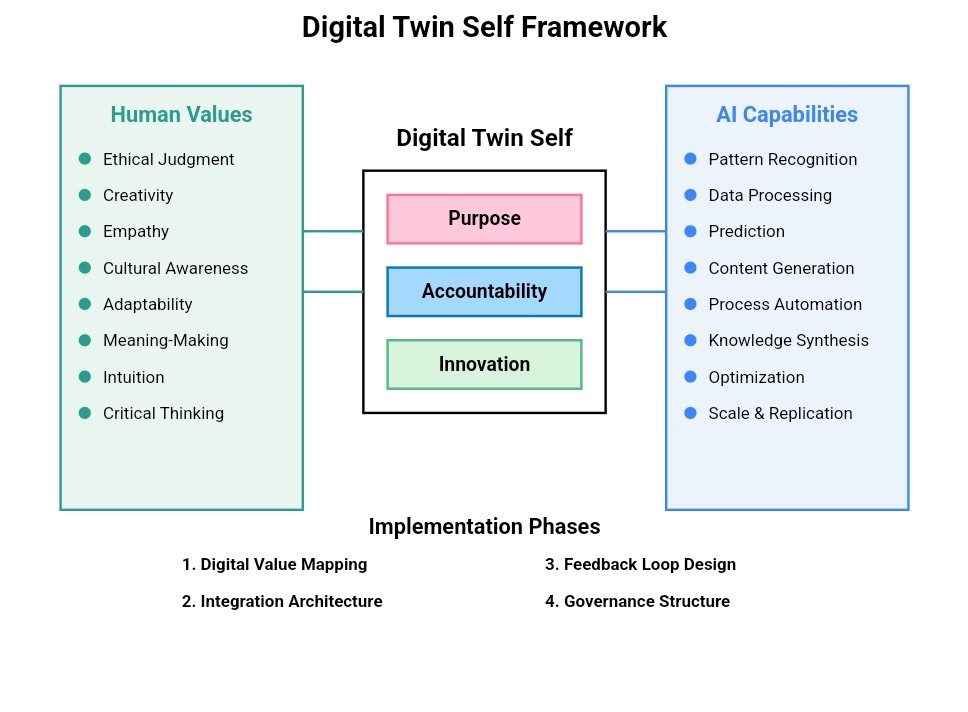

The Digital Twin Self framework, developed by CKC Cares, offers a practical approach to navigating the psychological dynamics that influence human-AI interaction and the importance of self-awareness in ethical integration. It invites reflection on our identity across digital and physical spaces, examining how our values, skills and purpose are shaped in environments touched by AI. Rather than emphasizing resistance, this structure promotes resilience through intentional alignment between human capabilities and intelligent systems.

While the model provides a powerful approach for individual ethical development, its impact can be further amplified, and its effective implementation necessitates collaboration across different levels. Organizations, policymakers and individuals should work together to establish shared ethical guidelines, develop supportive ecosystems and ensure that AI is used to amplify human ingenuity responsibly and equitably. Ideally, this collaborative approach would include open dialogue, diverse knowledge sharing and the establishment of feedback loops to continuously refine and adapt the framework to evolving circumstances. This framework can be a useful tool to help inform the development of ethical AI policies, promote responsible organizational practices and foster a culture of transparency and accountability.

The framework introduces a flexible approach for ethical AI integration. Its core principles of purpose, accountability and innovation can be adapted across diverse sectors, from education and creative work to public policy and healthcare. Ethical agility is essential in our fast-evolving AI landscape.

Flexibility isn’t only strategic; it's intrinsically human-centered. The framework is intentionally designed for iterative use, allowing it to respond to shifting contexts, emerging technologies, and community needs.

This diagram visualizes how the Digital Twin Self Framework bridges purpose, accountability and innovation, offering a practical path to ethically integrate AI across sectors. This concept is introduced here as part of the author’s ethical AI and leadership research within the Digital Polycrisis framework.

Related Article: What Are Ethicists Saying About AI?

Protecting the Human Element in Digital Transformation

The framework can be particularly useful where power imbalances limit choice. For workers whose interaction with AI systems is mandated rather than chosen, collective approaches provide essential protections. Industry-specific protocols and negotiated agreements can establish clear boundaries for AI management while preserving space for human judgment and skill development.

These approaches identify which decisions should remain human-centered and create guidelines for when algorithmic recommendations should be questioned or overridden. Without such concrete protections, frameworks emphasizing individual digital agency risk overlooking the reality of workplaces where technology decisions typically happen far from those most affected by them.

Learn how you can join our contributor community.