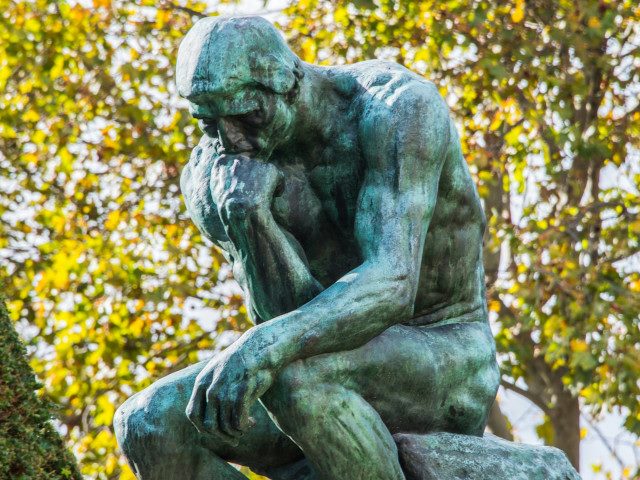

Henry David Thoreau once lamented that “men had become tools of their tools.” In his 19th-century context, the tools were farming machinery and architecture. Fast forward to today, and similar questions on individual control are again at the forefront of philosophical discussions regarding artificial intelligence’s accelerating development and impact.

This article will explore three distinct and timely AI discussion areas within the broader philosophy landscape regarding potential AI utopian futures, AI cultural narratives and beliefs and how AI is affecting philosophy education in universities.

Nick Bostrom and AI Utopias

Nick Bostrom is a Swedish philosopher at the University of Oxford and a leading researcher on existential risk. He is well-known for his perspectives on the dangers of AI and was the head of the Future of Humanity Institute until its recent closing. His academic research is foundational to philosophy regarding future AI. Sam Altman, co-founder and CEO of OpenAI, stated that Bostrom’s first book, “Superintelligence,” was foundational to the topic of AI alignment. More generally, Bostrom’s AI and paperclips problem is a seminal description of the dangers of superintelligent AI.

Bostrom recently described recent advances in AI with an analogy:

“We are on a plane, and we realize that there is no pilot. We are going to try to land this, but we don’t have ground control giving us instructions. … We’ve got some fuel left to pull this off but are running out of time.”

These and many other of Bostrom’s ideas underpin the concerns of those who signed the “Pause AI” letter last year. Now, Bostrom identifies several key actions to mitigate AI risk:

- Regular affirmation by developers that AI is for the benefit of all humans and animals. The centralization of potentially superintelligent AI makes this imperative.

- Increased red-team testing before release. While a stated priority, safety testing still may not be well understood.

- Extend research on alignment to consider the moral statuses of digital minds. While hypothetical, the concept of alignment has gone from marginalized to mainstream.

Bostrom’s perspective is timely, as the next version of OpenAI’s GPT model is expected to be released later this year.

New Research on Utopias

Bostrom recently wrote “Deep Utopia: Life and Meaning in a Solved World” in which he explores potential AI utopias. His “utopic taxonomy” explores various levels of potential outcomes, discussed in this interview:

- Level 1: A government and cultural utopia. This is an overall better organization of existing society. Governments, organizations and communities are highly effective and we avoid the pitfalls of today.

- Level 2: A post-scarcity utopia. This is a more radical version of today, where humans have plenty of what they need materially. You want it? You get it.

- Level 3: A post-work utopia. The production of goods doesn’t require human labor. Robots and other digital entities do all the work. Elon Musk echoed this perspective.

- Level 4: A post-instrumental utopia. The minutiae of life can be automated. For example, instead of exercising one could “take a pill” that would provide the needed biological effects.

- Level 5: A plastic utopia. A more extensive post-instrumental eutopia wherein humans can change themselves in any way using biotechnology, nanotechnology and other advanced augmentation.

Who knows which, if any, of these situations come to pass. It will likely be a mix of utopia and dystopia. Bostrom’s unique perspective often changes the Overton window of acceptable government policies, so expect to see new arguments from AI boosters and detractors in the coming months.

See more: How Might Socrates Have Used AI Chatbots?

New Research on Cultural AI Narratives

Americans have mixed views on AI. In a recent Pew Research study, 52% stated that they are more concerned than excited about AI developments. Concerns revolve around the potential dehumanization of processes, and the negative impact on jobs are at the top. While AI may often be associated with intelligent AI takeovers, such as “Skynet,” existential fears like those of thinkers like Bostrom are currently rarely held.

However, AI development, usage and narratives are not restricted to the U.S., despite Silicon Valley’s massive AI funding. Paris-based Mistral is approaching the quality of OpenAI’s GPT model, China has 40 different models in practice and more global investment is coming. AI model training is considered more art than science, suggesting that cultural contexts may shape future models even more.

Stephen Cave of Cambridge and Kanta Dihal of Imperial College London recently completed a five-year study to understand how other cultures have historically seen and conceptualized AI. Covered at length in their book “Imagining AI: How the World Sees Intelligent Machines,” with chapters 16 and 19 are currently free, Dihal recently outlined some of the key takeaways:

Russia

Historically, Russia viewed an AI akin to an emotionless, omnipotent AI in the service of mankind — not unlike the “Terminator.” The Soviet Union and communist ideology were heavily influenced by the pre-AI philosophy of cybernetics, resulting in an enduring Russian appreciation of machine augmentation. Today, the emphasis is on friendly, soulful and AI aligned with Russian culture.

China

Chinese culture has held a tension between technological advances and philosophies, like Daoism, that push back against overly controlling action. However, like in the Soviet Union, the Chinese communist party’s doctrine has resulted in a more welcoming approach to AI. However, today, given the high-resource aspects of model training, some analysts see conflicts between the needs of AI and China’s existing investment policy.

Japan

Japan has a strong history of positive relationships with robots. A core part of the building approach of various unique robots over the last few decades has been to place an emotional core at the center of any robot developed. As a result, AI is currently seen positively and stories of so-called robot rebellion are rare.

South Korea

Google’s DeepMind’s AlphaGo shockingly defeated South Korean Grandmaster Lee Sedol in the game of Go years ago. AlphaGo shock has led to a catch-up narrative within South Korea, especially given heavy AI investments by its neighbors. South Korea recently announced over $7 billion in funding for new AI chips.

Global Changes Are Continuing

OpenAI is reportedly raising up to $7 trillion, with the United Arab Emirates being a potential key investor. This study didn’t find a consistently clear cultural narrative regarding AI in the Middle East, but future investment, usage and culturally tuned models will increasingly change the narrative landscape.

AI Philosophy is Booming at Universities

University philosophy professors and students have been engaging with AI in a variety of theoretical, educational and practical ways. Examples include:

- Many philosophy courses are becoming more cross discipline (link), and students are delving into topics of whether creativity and curiosity are human-centric (link)

- Professors like Michael Rota have developed their own AI tools to help with philosophical instruction (link)

- Lawrence Shapiro worries less about plagiarism and and more about students critical writing and reading skills (link), while Antony Aumann describes the importance of chatbots as a dialectical and thought analysis companion to course instruction (link)

- The University of Oregon held a roundtable to discuss, among various ideas, the history of the word robot, a consideration of AI vs. human writing and how image generator descriptors like “smile” are rarely culturally consistent (link)

- The definition of “moral agency” and its ramifications for humans are rapidly changing, per Randall Hart (link)

Even more changes will likely take place in the upcoming school year.

Philosophy Shapes Tools. Tools Shape Philosophy

Our tools have always shaped our schools of philosophical thought — and vice versa. Modernism arose with the enlightenment and industrialization, postmodernism coincided with early computerization and metamodernism emerged with the internet. Unfamiliar perspectives can quickly become mainstream depending on cultural conditions. We should expect our collective philosophy and our corresponding view of AI to undergo a similar transformation over the next few years as our intelligent tools continue to advance.

See more: Top 5 Free Prompt Engineering Courses