There is one thing that is certain about AI: It is a hungry beast. It takes power to build and train large language models (LLMs). It takes processing speed to deliver generative AI that can answer complex questions, do in-depth research and — even — hallucinate wild answers to persistent questions at the speed of thought.

All types of AI eat massive amounts of high-speed computing power for lunch — and for breakfast and dinner. That means that at the core of everything, AI is a powerful processor, a chip created from silicon and innovation. The companies that build the fastest and smallest chips, perhaps those that can deliver high-power computing without drinking the planet’s resources, will win the heart — and investment dollars — of this industry. But it takes time and a huge investment to build this technology. And the race is already hot.

AI’s ravenous appetite for compute power is pushing the edge of Moore’s Law and forcing chip designers and chipmakers to innovate in ways they haven’t had to in years. Silicon has been creating wealth for decades. But this is a new gold rush.

Here are some of the top chip companies competing in the AI market.

Table of Contents

- 1. NVIDIA

- 2. AMD

- 3. Google

- 4. Amazon

- 5. Microsoft

- 6. Intel

- 7. Meta

- 8. Qualcomm

- 9. Groq

- 10. Cerebras

- 11. Apple

- 12. OpenAI

- 13. Extropic

1. NVIDIA

NVIDIA is a dominant force when it comes to AI chips. This dominance has shot the company to the top of the stock market and made it one of the world’s most valuable companies (with a valuation that hit $4 trillion in July 2025).

The company’s AI chips dominate the market and are used by everyone from car makers to tech and AI companies. Plus, the pipeline of "AI factory" builds continues to thicken.

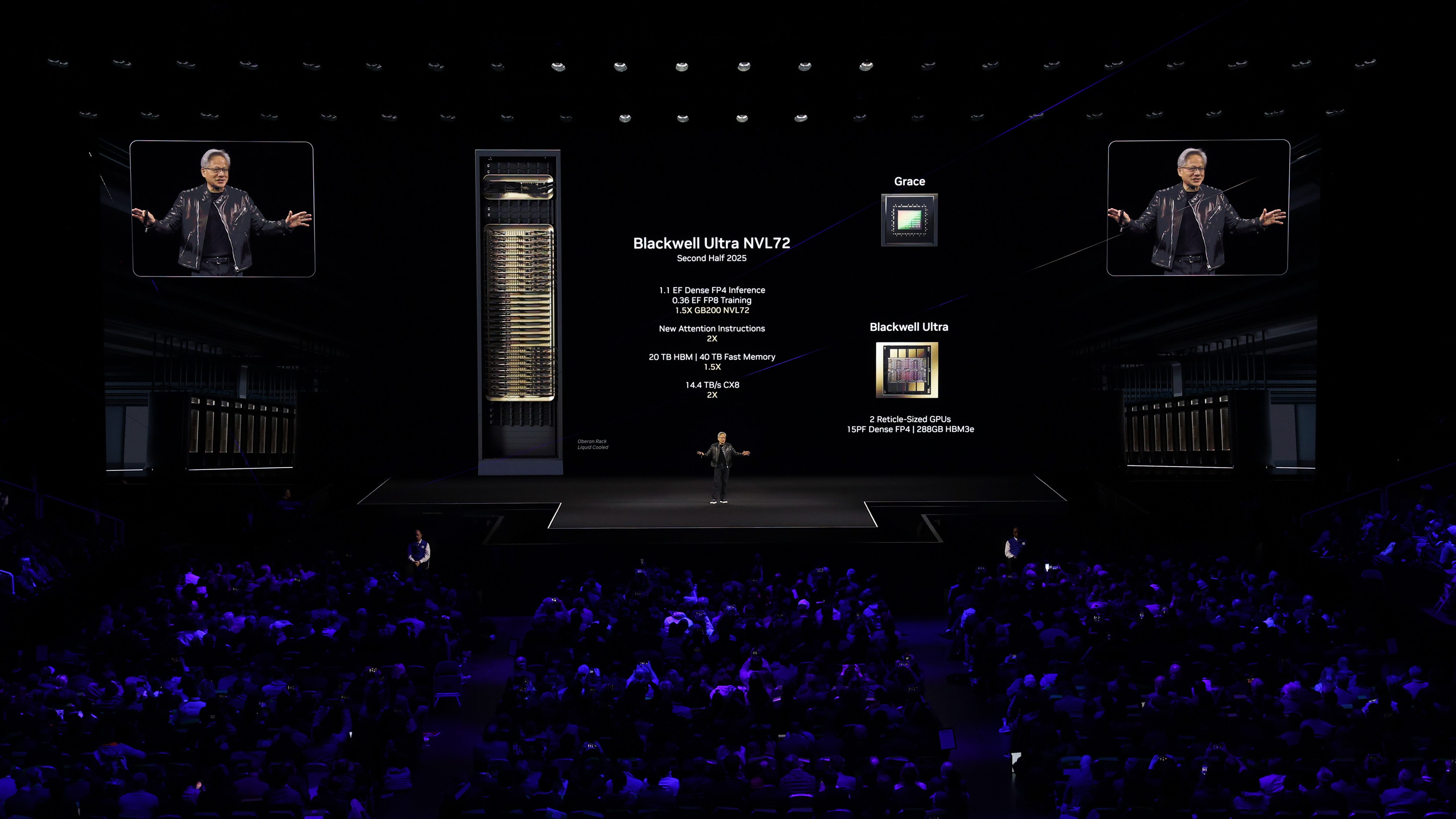

In 2025, NVIDIA announced the NVIDIA GB300 NVL72 rack-scale system, which is powered by the NVIDIA Blackwell Ultra architecture. GB300 systems posted record results in MLPerf Inference v5.1’s new reasoning benchmark, with the GB300 NVL72 showing about a 45% throughput gain over comparable GB200 racks on DeepSeek-R1.

Shipments are beginning through major OEMs, and Equinix is standing up instant capacity for GB300 and B300 DGX configurations, which is a tidy way of saying the ecosystem is aligning around real deployments.

If Blackwell promised a 25x reduction in inference cost and energy, Blackwell Ultra reads like the second act: 72 GPUs and 36 Grace CPUs in a fully liquid-cooled NVL72 rack, tuned for gigantic reasoning models and wired with Quantum-X800 or Spectrum-X fabrics to push output to industrial levels. The shape of the product makes the strategy obvious. NVIDIA is selling scaled systems for scaled models, and the market is responding in kind.

2. AMD

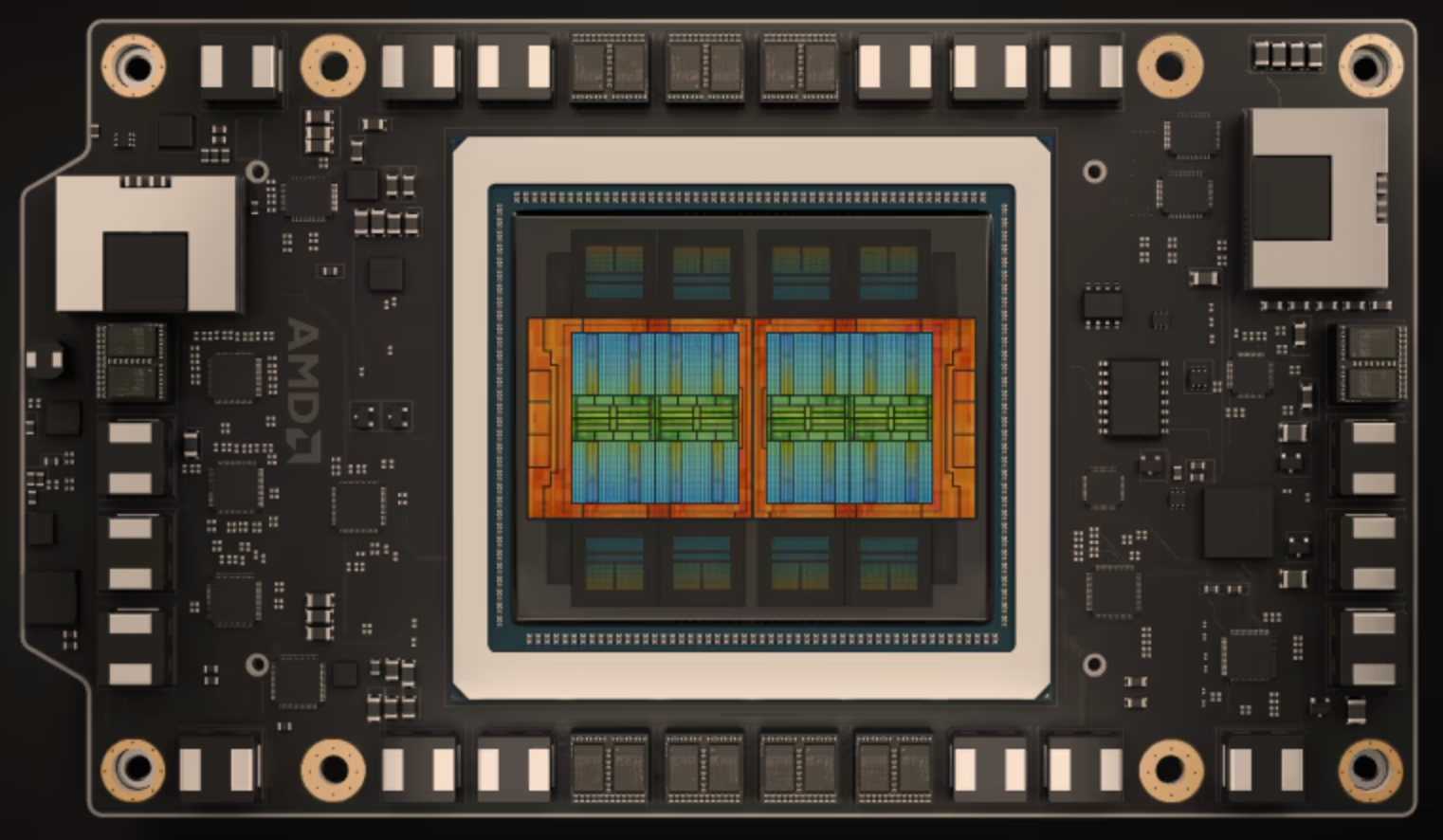

NVIDIA is an AI-chip powerhouse, but long-time chip maker AMD is throwing some serious compute power into the game. Lisa Su, CEO of AMD, calls this a “ten-year AI cycle”, and the company is playing it long.

This year, AMD announced the Instinct MI350 series, built on TSMC’s 3 nm process, with new low-precision formats like FP4 and FP6, plus a roadmap toward the 2026 MI400 “Helios” racks that aim to pull AMD further into hyperscale. Behind the hardware, ROCm 6 is tightening into a credible alternative stack, a software layer that makes training and inference less dependent on CUDA’s gravity.

If you scan the world's fastest supercomputers, AMD silicon is everywhere. Frontier and El Capitan hold the #1 and #2 slots, proof of its ability to scale compute at absurd levels. Marketwise, AMD is still framed as number two, a role that carries pressure and freedom. Each new MI part plays as an invitation for hyperscalers who want a choice, and in that sense the finish line is beside the point. The company is building the stamina to keep running even as the course bends into unknown terrain.

Related Article: Meet the Startups Taking on Big Tech With Smarter AI Chips

3. Google

By the end of 2024, Google’s sixth-generation TPU, Trillium, slipped into general availability like an actor finally stepping out from backstage. The chip was pitched as the workhorse behind Gemini training and then quickly absorbed into the mythology of Google Cloud’s “AI Hypercomputer.”

Trillium raised throughput by a factor of four compared with its predecessor, pulled efficiency up by two-thirds, and pushed memory to 32 GB per chip. The design can scale into pods of 256 units, a machine room’s worth of silicon aimed directly at training models like Gemini 2.0.

Co-designing Gemini 2.5 Flash-Lite with the Trillium TPU was the key to unlocking the lightning speeds of 2.5 Flash-Lite. This Infinite Wiki by @dev_valladares is an incredible demonstration of what is possible with this model. We're so excited to see what you continue to build! pic.twitter.com/xIGVQj8f9K

— Google AI (@GoogleAI) July 24, 2025

The combination of bigger pipes, denser memory and Google’s own AI stack is the reason TPU climbed from a side note to a podium finish in the chip race.

4. Amazon

December of 2024 was Amazon’s turn at the podium. The company unveiled Trainium2 inside Trn2 instances and racks of UltraServers, chips pitched as twice the punch of the original Trainium with a cleaner energy profile. Then, in April of 2025, Anthropic made the announcement feel real: hundreds of thousands of Trainium2 chips would drive Project Rainier, a mega-cluster built to train Claude at a scale that sounds suspiciously like science fiction.

AWS kept layering on details through 2025. A Trainium server carries 16 chips, and four of those combine into an UltraServer that can reach roughly 83 petaflops. Trn2 instances are rentable in EC2, and Claude 3.5 Haiku already runs on the hardware.

Trainium3 is in development, expected to double performance again while cutting energy demand nearly in half. In parallel, AWS also announced Blackwell-based UltraServers, a hedge that keeps NVIDIA in the room even as Amazon builds its own chips.

5. Microsoft

We all think of Microsoft as a software company, but it also delivers some major hardware. The Cobalt 100 VMs moved from announcement to reality in late 2024, and by September 2025, they were running across 29 Azure regions, powering workloads from Teams to Microsoft Defender. Microsoft published results showing efficiency gains, the sort of numbers that quietly shift data center economics at scale.

How Azure Cobalt 100 VMs are powering real-world solutions, delivering performance and efficiency results https://t.co/P32eWB3Uqe pic.twitter.com/tBIlmoR2tO

— Eric Berg - MVP (@ericberg_de) September 24, 2025

The other half of the story is Maia. The original Maia 100 announcement spelled out the vision: a chip designed for Azure itself, feeding directly into OpenAI models and Microsoft’s own AI services. Reports continue to circulate about a Maia 200 in testing, yet the concrete 2025 proof point is the expansion of Cobalt. Together, Maia and Cobalt form a systems approach that lets Microsoft say it owns the stack from silicon through cloud.

Microsoft has reshaped the narrative from “where do we buy chips” to “how do we own the full stack.” Maia is not sold to outsiders, but it drives some of the most watched AI systems on earth, including OpenAI’s frontier models.

6. Intel

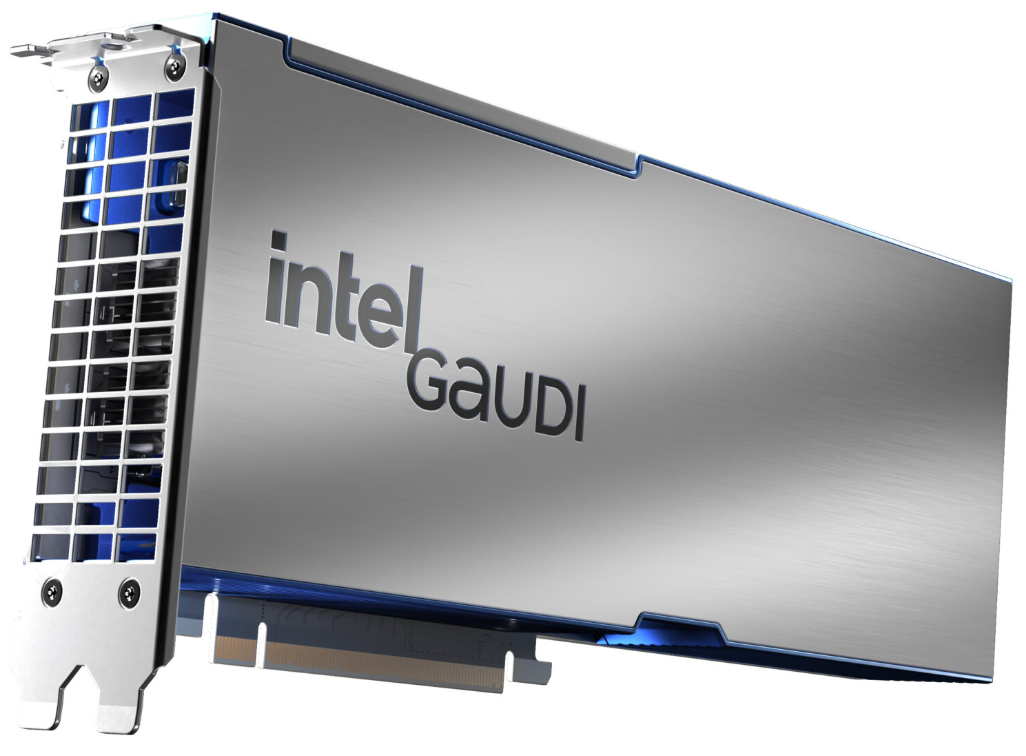

Intel’s centerpiece is Gaudi 3, which is now shipping inside racks from Dell, HPE and Supermicro. The chip delivers training throughput that doubles what Gaudi 2 could manage and brings efficiency numbers meant to entice enterprises that find NVIDIA both scarce and expensive.

Gaudi 3 appeared in full-blown AI Factory systems, the kind of branded infrastructure bundles that look less like servers and more like industrial appliances. Intel presents it as open by design, running PyTorch, TensorFlow and Hugging Face stacks without proprietary gravity. The strategy flows from a simple proposition. Build hardware strong enough to train and infer at scale, keep pricing competitive and rely on OEMs to deliver it into the hands of enterprises that want an alternative to CUDA’s orbit.

There is no pretending Intel holds the cultural cachet of NVIDIA or the velocity of AMD, yet the company’s manufacturing muscle and distribution channels keep Gaudi 3 relevant.

7. Meta

Meta has always lived or died by the feed, and in 2025 that feed runs on homegrown silicon. The company’s second-generation Meta Training and Inference Accelerator (MTIA v2i) showed up at ISCA 2025 in the form of an academic paper, a rare peek into how Facebook, Instagram and WhatsApp keep recommendation models humming at planetary scale.

The chip is tuned for inference efficiency, with a 3.5x performance boost over the first generation, which makes sense given the billions of small decisions Meta’s platforms serve each second. MTIA v2i improves throughput and trims power draw, cutting costs for the endless act of ranking posts, reels and ads.

This new MTIA chip can deliver 3.5x the dense compute performance & 7x the sparse compute performance of MTIA v1.

— AI at Meta (@AIatMeta) April 10, 2024

Its architecture is fundamentally focused on providing the right balance of compute, memory bandwidth & memory capacity for serving ranking & recommendation models. pic.twitter.com/8THnmi3JFD

Meta never built MTIA to sell outside its walls. Its purpose is singular: to make sure the social machinery never slows, even as the models behind it grow more ambitious.

8. Qualcomm

Qualcomm has built an empire on the idea that intelligence belongs in your pocket and in your hands just as much as in the cloud. In 2025 the company extended that logic into the laptop bag. The Snapdragon X Elite and X Plus began shipping inside Microsoft’s Copilot+ PCs, pulling Dell, Lenovo and Samsung along for the ride. What used to be a processor spec now feels like a ticket into a new category of computers, machines that wake up already speaking the language of multimodal AI.

Phones carried the same momentum. In September, Qualcomm announced the Snapdragon 8 Elite Gen 5, the chip that will sit beneath the glass of Android flagships for the next cycle. Its NPU is engineered to juggle chat assistants, image generation, translation and whatever other party tricks developers decide to cram into a handset. The company’s AI Engine SDK serves as the quiet interpreter in the background, coaxing large models to run inside the cramped thermal envelope of a phone.

Related Article: Supercomputing for AI: A Look at Today’s Most Powerful Systems

9. Groq

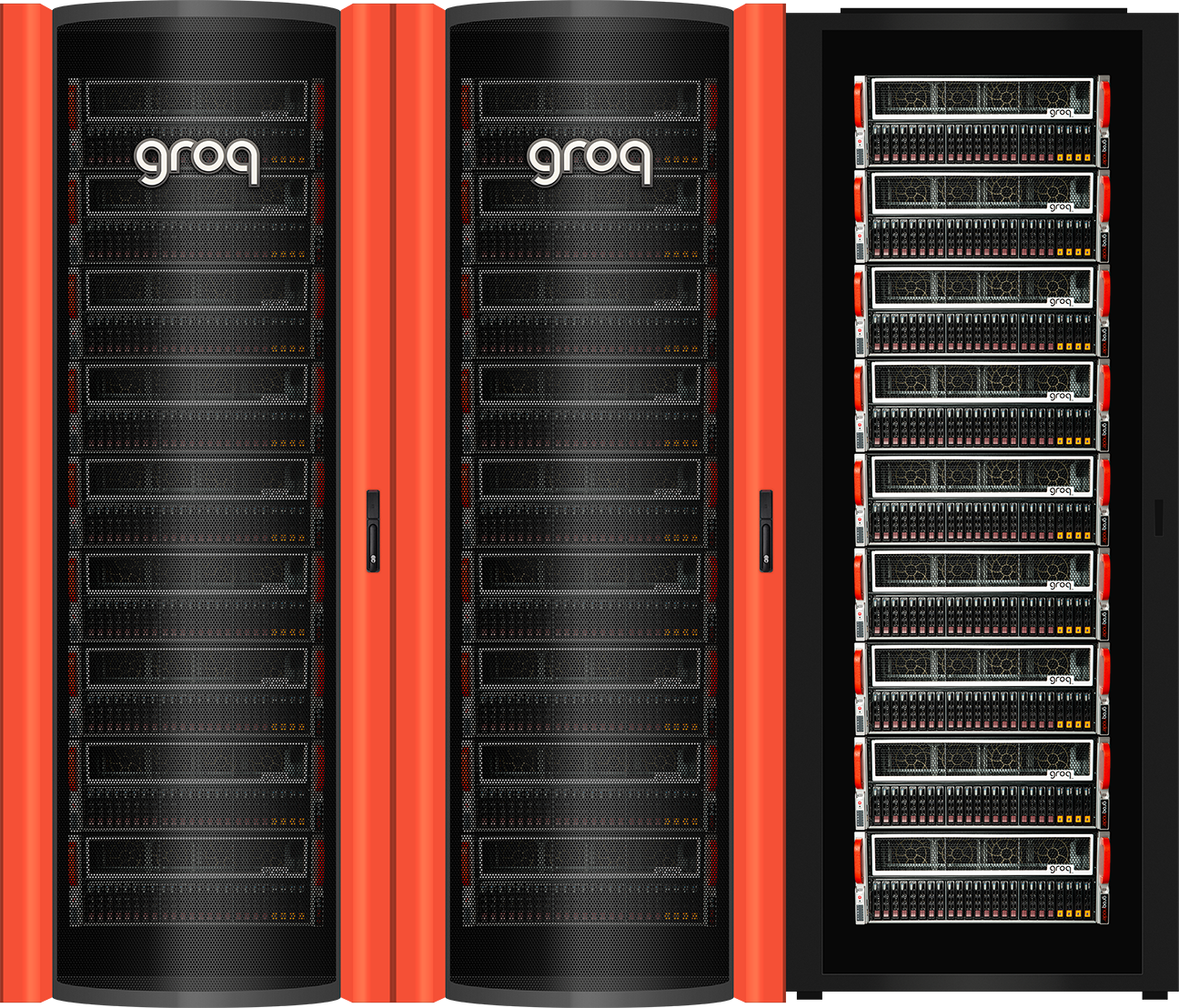

Groq, inc. — not to be confused with Grok the chatbot — is an AI company founded by a group of former Google engineers.

The company’s Language Processing Unit, or LPU, is designed to run large models in real time with deterministic performance that avoids the jitter common in GPU inference. In September 2025, Groq raised $750 million to expand its footprint, a signal that investors believe inference can be its own empire.

The hardware shows up as the GroqRack Compute Cluster, a cabinet-sized appliance that plugs into a data center and immediately starts serving tokens at breakneck speed.

Developers can also tap the company’s GroqCloud API, which turns the LPU into something that feels more like infrastructure-as-a-service than a chip sale. Groq does not pretend to be NVIDIA or AMD. Its pitch is simpler: if you want latency measured in microseconds rather than milliseconds, we have the machine for you.

10. Cerebras

Cerebras has always chased size as a virtue. It revealed the Wafer-Scale Engine 3, the largest chip ever produced, carrying four trillion transistors across 900,000 cores. The design choice is extreme but deliberate. Instead of chopping a wafer into hundreds of dies, Cerebras keeps it whole, creating a slab of silicon that can hold entire models without splitting them across chips.

That approach now powers Condor Galaxy, a distributed AI supercomputer built with G42. The third system, CG-3, pushed the network to 16 exaFLOPs of capacity.

Where NVIDIA scales through clusters of GPUs, Cerebras bets on monolithic silicon and specialized supercomputers. It is a narrower bet in market reach, but one that delivers extraordinary throughput for organizations willing to run their workloads on a machine that feels closer to a single engine than a sprawling cluster.

11. Apple

Apple might be late to the game compared to other chip companies here. But lagging behind everyone else has never stopped Apple from innovating new technologies — or markets.

In October, 2025, Apple officially announced its new silicon, the Apple M5. Built on a 3-nm process, it boasts a significant jump in AI performance, up to around 4x peak GPU compute for AI tasks compared to M4. The M5 features a 10-core GPU — with Neural Accelerators embedded in each core — and enhanced unified memory bandwidth (around 153 GB/s) to better handle on-device AI workloads.

The M5 chip is already being used in new devices, such as the 14-inch MacBook Pro, the updated iPad Pro and the upgraded Vision Pro headset. According to Apple, this chip marks the “next big leap in AI for the Mac.”

Beyond devices, Apple is also building for on-device AI and cloud/edge. The tech company is reportedly developing specialized chips for servers and smart glasses (in addition to Macs) to support its Apple Intelligence platform. The company also indicated it is exploring generative AI / chip-design automation, using AI to help design the silicon itself.

12. OpenAI

The maker of ChatGPT doesn't have anything on the market yet, but it's currently working on developing its own AI chips and systems in partnership with Broadcom. These chips are not for commercial sale — they will be used within OpenAI's own operations to improve efficiency and cut down on the compute demands of its AI models.

"By building our own chip, we can embed what we’ve learned from creating frontier models and products directly into the hardware, unlocking new levels of capability and intelligence," said OpenAI's co-founder and President, Greg Brockman.

The racks, scaled with Ethernet and other connectivity solutions from Broadcom, will meet surging global demand for AI, according to company officials.

OpenAI's first custom chips are slated to be deployed in late 2026.

13. Extropic

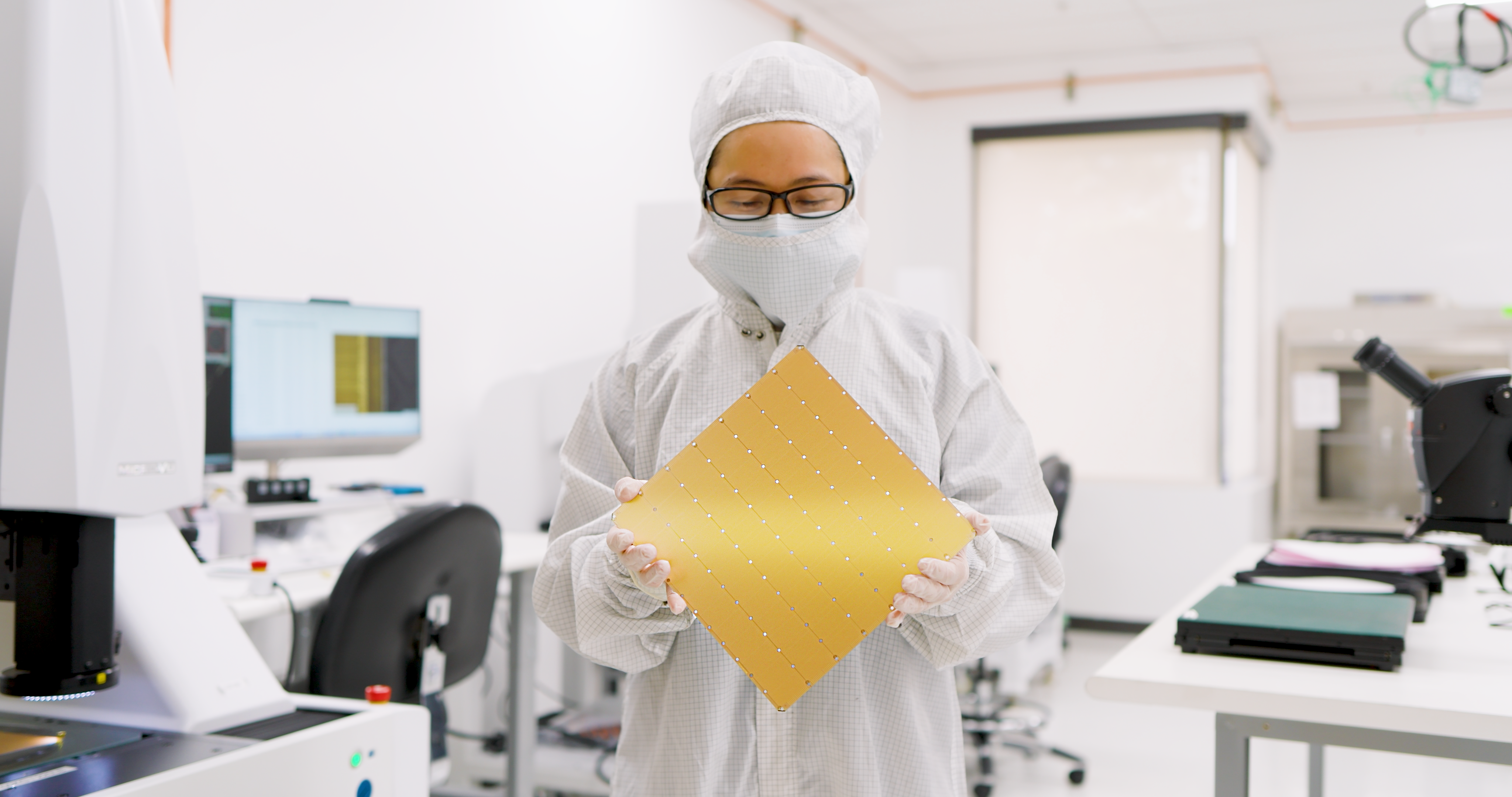

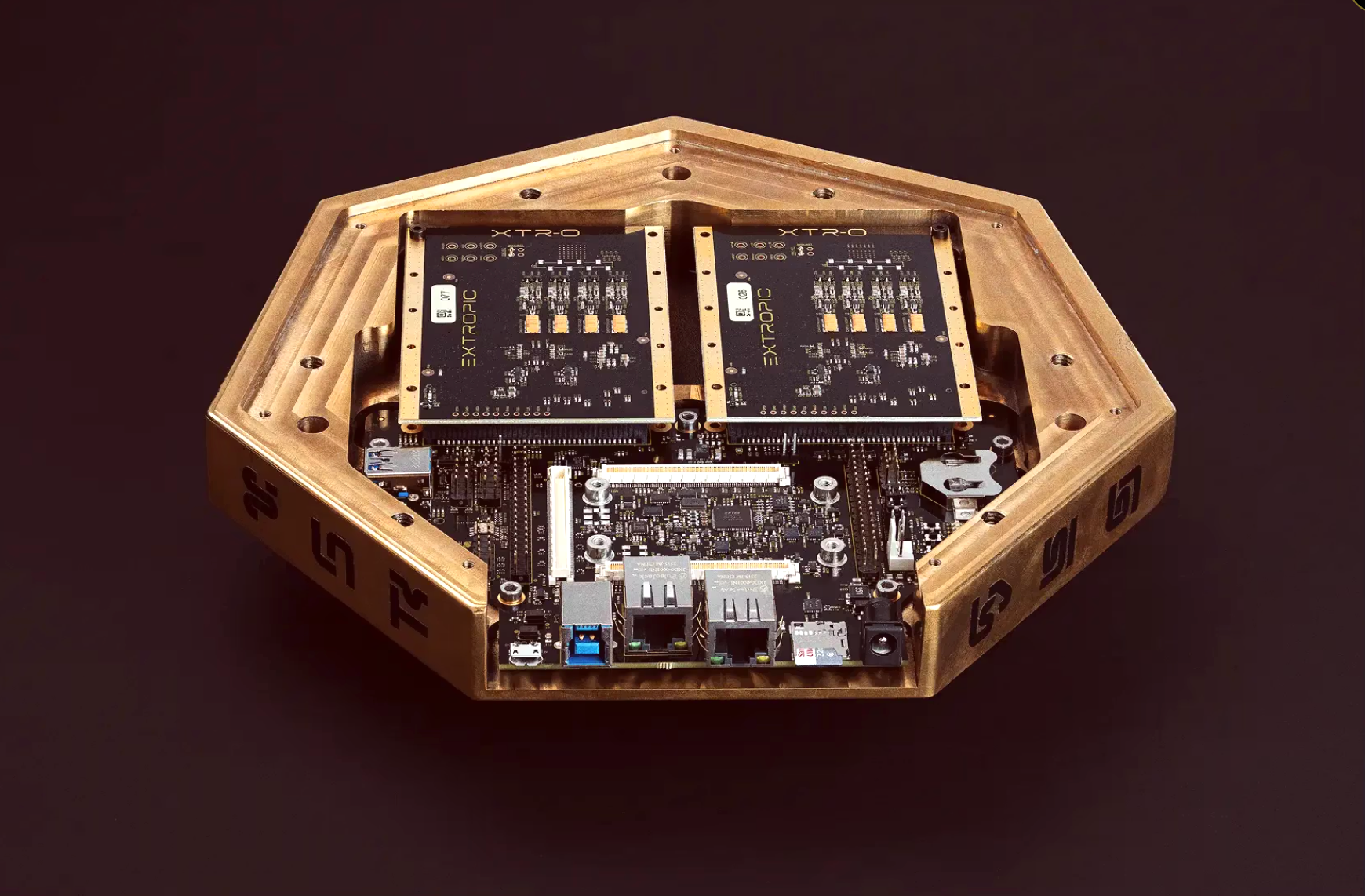

Extropic is taking a much different approach to AI hardware with what it calls the world’s first scalable probabilistic computer, a new class of AI chip that generates probability samples directly, instead of performing energy-intensive matrix math on GPUs.

Its chip, known as the Thermodynamic Sampling Unit (TSU), is built from arrays of transistor-based probabilistic bits, or pbits, which fluctuate between 1 and 0 and can be tuned to represent probability. By combining massive numbers of pbits, TSUs can sample from complex probability distributions with far less energy than digital processors. The goal, according to Extropic, is overcome the top barrier to major AI adoption: not enough electricity.

Extropic also introduced the Denoising Thermodynamic Model (DTM), a generative AI algorithm developed specifically for TSUs. According to the startup, simulations show that running DTMs on TSUs could be up to 10,000× more energy-efficient than current algorithms running on GPUs.

Extropic has produced a hardware proof of technology and a development platform called XTR-0. It has been beta tested by early partners, but is not yet a commercial-scale system.