Meta has announced its latest open-weight language models — Llama 4 Behemoth, Maverick and Scout — each designed to push the boundaries of what open AI systems can achieve across different scales and use cases.

How do these models stack up against competing offerings from OpenAI, Google, Anthropic and others? And will their performance live up to the hype amid scrutiny over benchmark transparency?

Let's dive into what Meta's release strategy reveals about the future of open foundation models and the industries they aim to serve.

A Closer Look at the Llama 4 Lineup

Meta’s latest lineup of large language models (LLMs), referred to as the Llama 4 family, builds on earlier iterations with improvements in performance, scale and deployment flexibility. The series includes three primary variants — Llama 4 Behemoth, Llama 4 Maverick and Llama 4 Scout — each aimed at different enterprise and research needs.

- Llama 4 Behemoth serves as the flagship model, offering the largest parameter count and designed for tasks requiring heavy reasoning, extended context handling and agentic workflows.

- Llama 4 Maverick is presented as a balanced option, targeting environments where efficiency and fast response times are critical without sacrificing too much performance.

- Llama 4 Scout is the smallest model, optimized for edge deployments, lightweight applications and scenarios where low latency is a priority.

While Meta has not revealed exact parameter counts for Behemoth, independent reports suggest a substantial increase over Llama 2's top-end 70 billion parameters. Architectural refinements reportedly include updated attention mechanisms, longer context windows and finer-grained token control, although specific technical details remain limited. Llama 4 models are offered under an open-weight licensing model that allows researchers and developers to access and deploy them under certain restrictions, predictably prohibiting large-scale commercial competition with Meta itself.

Early impressions emphasize just how massive Llama 4’s models are compared to earlier open-weight LLMs. Independent experts are already excited about the sheer scale of Behemoth’s size and Scout’s unprecedented context length.

Ilia Badeev, head of data science at Trevolution Group, told VKTR that for the Behemoth model, it’s the parameters. “The name of the model does justice to it — it is a massive behemoth in terms of parameters. Scout’s context window is great for large prompts. Let’s say you want to summarize a book — it’s perfect for that."

Badeev pointed out that Llama 4 Behemoth’s two trillion parameters and Llama 4 Scout’s ten million token context window represent major advances. While Behemoth is ideal for deep, complex tasks such as scientific research, Scout's ability to handle entire books within a single prompt makes it highly suitable for summarization and other large-context applications.

Related Article: OpenAI Is an App Company Now

Llama 3 vs Llama 4: What's Changed?

Llama 4 delivers major enhancements over Llama 3 across core areas of performance, governance and usability.

| Category | Llama 3 | Llama 4 |

|---|---|---|

| Reasoning Ability | Strong but inconsistent on multi-step tasks | Improved multi-step reasoning and long-context performance |

| Efficiency | Higher compute demands relative to performance | Better inference speed and resource optimization |

| Multilingual Support | Limited non-English fluency | Expanded multilingual datasets and regional context understanding |

| Instruction Tuning | Good, but struggled with complex or nuanced prompts | Sharper direction following and tone/style sensitivity |

| Safety and Alignment | Basic alignment; some hallucination risk | Enhanced safety techniques and hallucination reduction |

Early impressions from Meta highlight substantial gains in reasoning accuracy, response consistency and multi-language capabilities compared to previous versions. Reactions from parts of the open-source community have been positive, with some praising Llama 4’s blend of accessibility and high-end performance. However, questions around transparency and benchmarking standards have also emerged, reflecting the broader tension between open-weight availability and evaluation rigor.

Where Llama 4 Outperforms Its Predecessor

Early assessments suggest that Llama 4 offers notable improvements over the Llama 3 generation, particularly in model performance and usability.

Stronger Reasoning

Meta expanded the training scale, reportedly incorporating larger and more diverse datasets, longer context windows and additional reinforcement learning phases. These changes appear to support stronger reasoning capabilities, with Llama 4 models handling multi-step problems and nuanced instructions more reliably in initial testing compared to earlier releases.

Architecturally, Llama 4 continues to use a Transformer backbone — the same foundational design found in models like GPT and Claude. However, early technical reviews suggest that Meta has refined how the model pays attention to information over long conversations. These improvements aim to help Llama 4 remember and reason through longer prompts or dialogues more effectively, a key requirement for agent-based workflows and complex multi-step tasks.

Greater Efficiency

From a technical perspective, the most meaningful improvement is the boost in efficiency, claimed Gabriel Muñoz, AI solutions architect at VASS Intelygenz.

"The new Llama 4 release strategy reflects a broader industry trend toward offering a series of models, giving users critical adaptability depending on specific use case needs and constraints," said Muñoz, adding that while multimodal capabilities are increasingly common across the industry, offering adaptable model options based on user needs is becoming a critical differentiator in practical deployments.

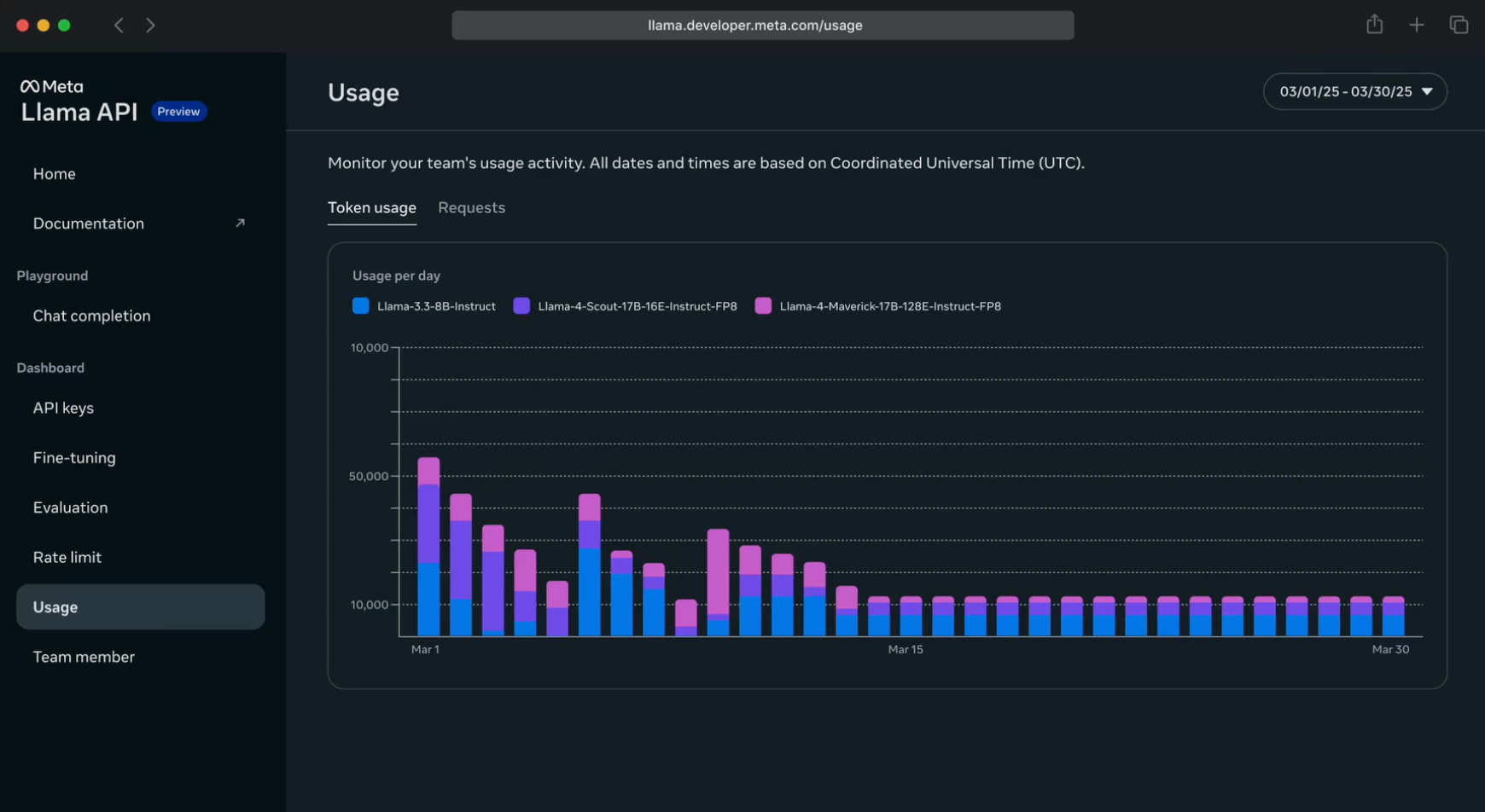

At its LlamaCon 2025 event, Meta previewed the Llama API, positioning it as the easiest way to build with Llama models.

According to Meta VP Manohar Paluri, users retain “full agency” over their models, whether hosted on Meta's infrastructure or ported elsewhere. The tech company also noted that Llama 4 has now surpassed 1 billion downloads and is being used in real-world applications across industries, from support call analysis at AT&T to mission planning by astronauts.

Improved Multilingual Abilities

In terms of language flexibility, Meta has expanded multilingual training, exposing Llama 4 to broader linguistic datasets and fine-tuning performance across dozens of languages. This has resulted in more fluent non-English generation and improved understanding of regional contexts — addressing a known weakness in earlier models.

Upgraded Instruction Tuning

Instruction tuning (i.e., teaching AI models to better understand and follow user instructions) also saw improvements. Meta incorporated more human preference data and adversarial prompts into Llama 4's training cycles, sharpening the model’s ability to follow complex directions while remaining aware of tone, style and task boundaries.

Expanded Safety Measures

Safety measures were also enhanced, with new alignment techniques aimed at reducing harmful outputs and limiting hallucinations, particularly in critical use cases such as healthcare or legal advice generation.

Llama 4 vs GPT-4, Claude and Gemini

Llama 4’s release reflects Meta’s efforts to close the performance gap with top proprietary models in the generative AI market, particularly in areas like long-context reasoning and multilingual capabilities. While OpenAI’s GPT-4, Anthropic’s Claude, Google’s Gemini and emerging models like DeepSeek remain dominant forces, early evaluations suggest that Llama 4 offers competitive — and in some areas, surprisingly strong — performance across a range of benchmarks.

In head-to-head comparisons, GPT-4 still leads slightly in advanced reasoning, code generation and fine-grained knowledge recall. However, Llama 4 Behemoth has shown notable gains in multi-turn dialogue, summarization accuracy and handling of long-context tasks, closing much of the quality gap observed with previous Llama generations. Benchmarks for code generation suggest that while Llama 4 may trail GPT-4 Turbo in precision, it performs competitively with Gemini 1.5 and often outpaces earlier Claude models, especially in structured programming tasks.

Multilingual natural language processing (NLP) has been a standout area for Llama 4. Thanks to its expanded multilingual training datasets, Llama 4 outperforms many proprietary models on language tasks beyond English, making it an appetizing choice for global enterprises and multilingual research initiatives.

Early technical evaluations suggest that Llama 4 outperforms Google's Gemini in summarization and document understanding tasks, particularly for long documents where context retention and logical flow are critical. However, GPT-4 and Claude Opus still hold slight advantages in complex technical summarization and edge-case reasoning.

Market perception of Meta’s progress has been largely positive. Llama 4 is viewed as a credible open-weight alternative for businesses and researchers who want access to cutting-edge capabilities without the closed licensing restrictions of OpenAI or Google’s ecosystems. While it may not completely surpass the top proprietary models across all benchmarks, Llama 4 narrows the gap considerably — and in some areas, offers practical advantages, especially for developers seeking transparency, customization and deployment flexibility.

"Open-source models are fantastic enablers," explained Muñoz. "They allow for adaptation and refinement of models based on specifications like data privacy, security, adaptability, monitoring and infrastructure isolation requirements." At the same time, he added, many enterprises still opt for closed-source APIs because of the easier management of deployment, billing and ongoing model support, especially when internal resources are limited.

One of Llama 4’s most significant competitive advantages lies not only in its capabilities but in its licensing model. "If the business needs full control of the model, that’s where Llama truly shines," said Badeev. "And it’s not just open-source; it’s open-source for commercial use, which is a very important distinction." Llama 4’s open commercial licensing makes it especially attractive to enterprises that prioritize data control, security and the ability to run models in isolated environments, Badeev noted. He believes this will continue to add pressure to closed models like GPT-4 and Claude Opus.

Related Article: Gemini 2.5 Expands Google’s AI Capabilities for Business

Choosing the Right Llama for the Job

Meta’s Llama 4 family is intentionally diversified to meet a range of deployment requirements, making it easier for businesses, researchers and developers to select the right model for their specific needs. Each variant — Behemoth, Maverick and Scout — has distinct strengths that were tuned for different environments and use cases.

| Model | Primary Strength | Best Use Cases | Typical Deployment |

|---|---|---|---|

| Llama 4 Behemoth | High-capacity reasoning and long-context understanding | Scientific research, R&D simulation, healthcare insights | Cloud or large on-premises clusters |

| Llama 4 Maverick | Balanced performance and efficiency | Enterprise applications, customer service, decision-support tools | Cloud, hybrid or mid-range servers |

| Llama 4 Scout | Lightweight, fast inference for edge environments | IoT devices, mobile apps, smart consumer tech | On-device, edge or low-power deployments |

Llama 4 Behemoth

Llama 4 Behemoth is designed for research-intensive workloads, agentic systems and applications requiring deep reasoning, long-context handling or complex decision chains. Its larger model size and extended attention span position it for tasks such as scientific research synthesis, advanced R&D knowledge discovery and agent-based simulation environments. However, its scale may also require substantial computational resources, which could influence deployment decisions in sectors such as healthcare and education, where nuanced reasoning and long document processing are critical.

"Behemoth is best for solving complex problems, like mathematics — tasks where you, as a human, would really need to sit and think deeply," explained Badeev.

Llama 4 Maverick

Llama 4 Maverick offers a balance between power and efficiency, targeting enterprise environments where latency, cost and versatility are important considerations. Businesses could deploy Maverick in hybrid AI systems — such as customer service chatbots, internal knowledge assistants or decision-support tools — where strong reasoning capabilities and fast response times are both needed. Its efficient inference also makes it potentially suitable for industries like financial services, insurance and compliance, where timely analysis plays a critical role.

Llama 4 Scout

Llama 4 Scout is the lightweight, edge-optimized model intended for device-based applications and resource-constrained environments. It supports intelligent functionality on mobile apps, IoT devices and embedded systems without requiring constant cloud connectivity. Scout’s design may appeal to use cases in consumer tech, smart home applications and frontline service tools that prioritize rapid, localized responses.

"It is remarkable that [Scout's] new context window is huge, allowing assistant-based use cases to maintain a good user experience supported by the long context history of the user interaction," said Muñoz, who added that the model's ability to operate efficiently on a single H100 GPU opens up significant deployment possibilities for edge computing, isolated environments, and resource-constrained applications.

"Scout’s incredible context length is perfect for answering questions, like a chatbot or AI agent. With specs like Scout’s, you don’t need retrieval-augmented generation (RAG) anymore," noted Badeev.

Meta offers multiple deployment options for the Llama 4 family, including cloud-based, on-premises and edge environments. This range enables businesses to align model selection with operational needs, whether that involves running research models in the cloud, deploying AI systems internally for greater data control or enabling lightweight AI features on consumer devices. While Llama 4’s flexibility could broaden its applicability across industries, successful deployment will depend on factors such as infrastructure capabilities, security requirements and use-case complexity.

Why Llama 4’s Benchmarks Are Raising Eyebrows

Despite the excitement surrounding Llama 4, the release has not been without controversy. Shortly after Meta published its benchmark results, some members of the open-source AI community and independent researchers raised concerns about the fairness and transparency of reported benchmark data.

Some technical observers speculated that these challenges may stem from rushed development cycles and incomplete optimization of Llama 4’s Mixture of Experts (MoE) architecture.

In the LocalLLaMA subreddit, one user suggested that while MoE architectures are well-suited to today's hardware constraints and offer strong cost-to-performance ratios when properly trained, Llama 4's results hint that Meta may not have fully capitalized on these advantages due to timeline pressures.

"My best guess for what went wrong is that this project really might have been hastily done. It feels haphazardly thrown together from the outside, as if under pressure to perform. Things might have been disorganized such that the time needed to gain experience training MoEs specifically, was not optimally spent all while there was building pressure to ship something ASAP."

Concerns about benchmark contamination and the need for standardized evaluation practices are becoming a major focus in the AI community. "Until [benchmarks] are standardized, skepticism will remain, potentially impacting user trust and real-world adoption," said Muñoz. He explained that at his business, model evaluation is tied to clearly defined business objectives and acceptance criteria, with rigorous real-world validation through shadow testing, combining automated metrics and human review processes to ensure models meet both technical and business standards.

While benchmarks remain a standard method for evaluating models, some technical experts argue they are increasingly insufficient for assessing real-world capabilities. "To be perfectly honest,” Badeev emphasized, “I generally don’t care about benchmarks. The problem with benchmarks is that the datasets are public, so you can always adjust your model to perform better on these specific datasets — but this can harm general-use performance." He stressed the importance of assessing models based on parameters, context capacity, speed and how they generalize to unpredictable scenarios (rather than benchmark scores alone).

This debate reflects a broader conversation emerging around accountability in LLM releases. As models become more powerful and widely deployed, transparency about evaluation methods, training data composition and benchmark scope is becoming a critical trust factor — not only for researchers but also for enterprises considering AI adoption at scale. Going forward, the community appears increasingly aligned around the need for standardized, open and verifiable benchmarking practices that prevent selective reporting and ensure that model capabilities are presented accurately and responsibly.

The Road Ahead for Meta and Open-Weight Models

The release of the Llama 4 family represents a significant step in Meta’s ongoing efforts to expand its role in the competitive AI market. Early evaluations suggest the models have narrowed some performance gaps with proprietary systems while preserving the accessibility advantages of an open-weight framework.

As open-weight AI models continue to evolve, the success of deployments like Llama 4 will depend not only on technical capabilities but also on how well they address transparency, governance and performance consistency in practical settings.

Frequently Asked Questions

Meta Llama 4 is a new family of open-source large language models (LLMs) released by Meta, which includes the models Llama 4 Behemoth, Llama 4 Maverick and Llama 4 Scout.

Both Llama 4 Scout and Llama 4 Maverick are available for download on llama.com and Hugging Face. Llama 4 Behemoth is still training and is not yet available for use.