Key Takeaways

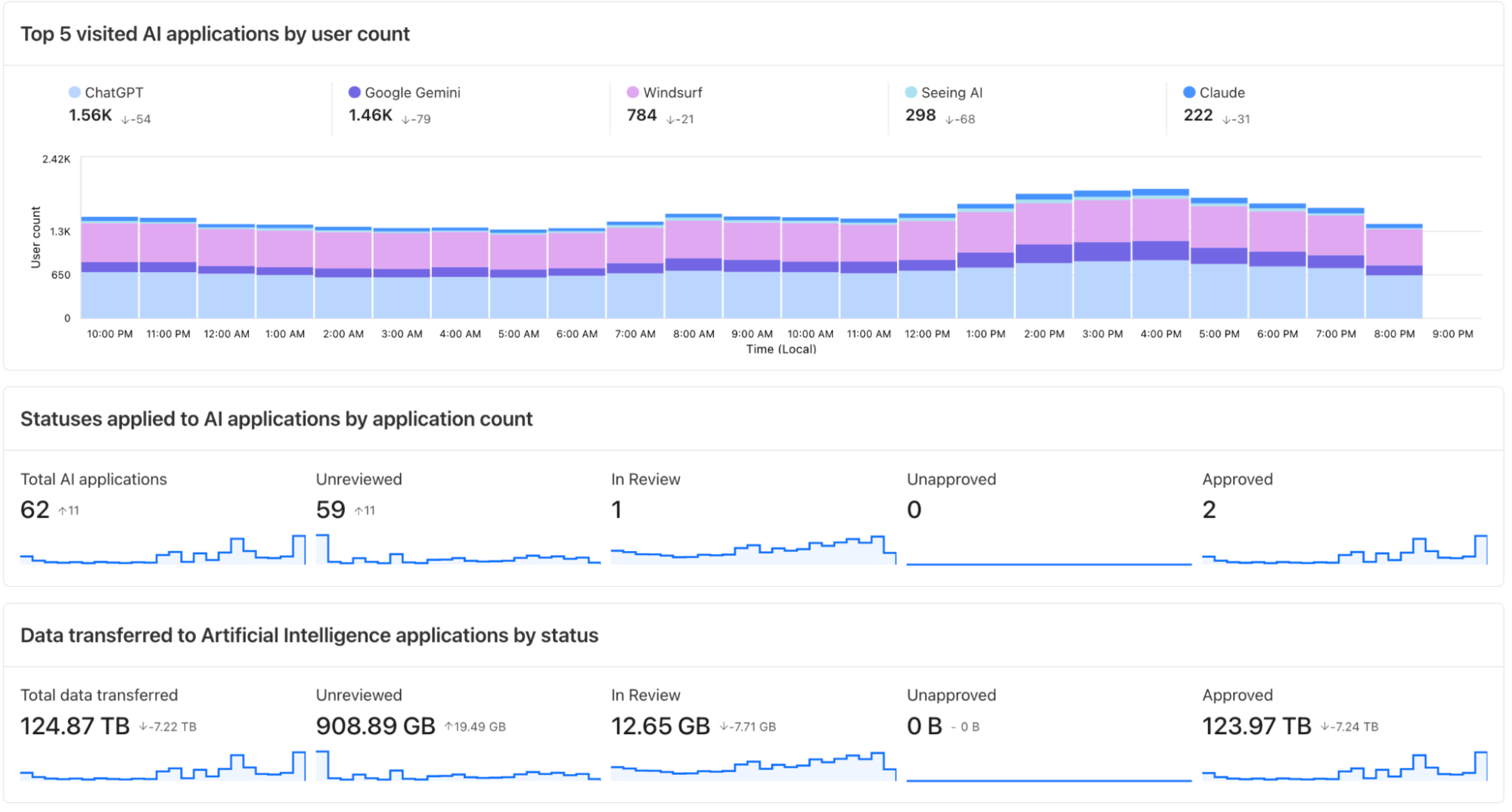

- AI activity visibility. Security teams gain granular visibility into employee interactions with generative AI.

- Shadow AI protection. Enforce strict Zero Trust policies to block unapproved AI tools and applications.

- Data leak prevention. Centralized control helps enterprises scale AI adoption safely and proactively manage sensitive information.

On August 25, 2025, Cloudflare launched AI Security Posture Management (AI‑SPM), a new suite of Zero Trust capabilities within its existing Zero Trust platform.

The new features aim to address security risks that emerge as employees across departments increasingly use AI tools without proper oversight. According to company officials, these risks include accidental disclosure of confidential information in chatbots and deployment of AI applications without security team involvement.

All features are now available, allowing organizations to implement controls while maintaining productive AI use throughout their workforce.

AI Security Is Now a Top Boardroom Priority

According to a 2025 Netwrix survey, 60% of organizations already run AI in production, and nearly a third have rebuilt parts of their security architecture to counter AI-driven attacks.

However, traditional defenses are falling short against evolving threats.

Traditional signature-based defenses are proving inadequate as AI-powered threats evolve rapidly, blending automation and adaptability. Experts say organizations are shifting to behavioral analytics and machine learning-based anomaly detection, which can spot unusual patterns in real time.

Automated threat hunting, AI-driven remediation and continuous monitoring are now essential, but many companies still struggle to reskill staff and update infrastructure quickly enough to keep up.

Related Article: AI Cyber Threats Are Escalating. Most Companies Are Still Unprepared

Regulatory Pressure Is Driving Governance Changes

Data security and privacy remain the top risks, with 55% of leaders citing them as the biggest barriers to AI adoption.

Regulatory frameworks like the EU AI Act and the US NIST AI Risk Management Framework are pushing organizations to adopt governance and transparency protocols for AI workloads. Boards and cyber insurers now demand proof that AI systems are governed as tightly as payment data or PII, making responsible deployment practices like rigorous testing, human oversight and clear data management plans essential for digital trust.

Organizations must balance innovation with risk, embedding continuous testing, audit trails and regulatory adherence into AI workflows while educating staff on new threat vectors. Those who get governance right will have a clear competitive advantage.

The AI Security Challenge

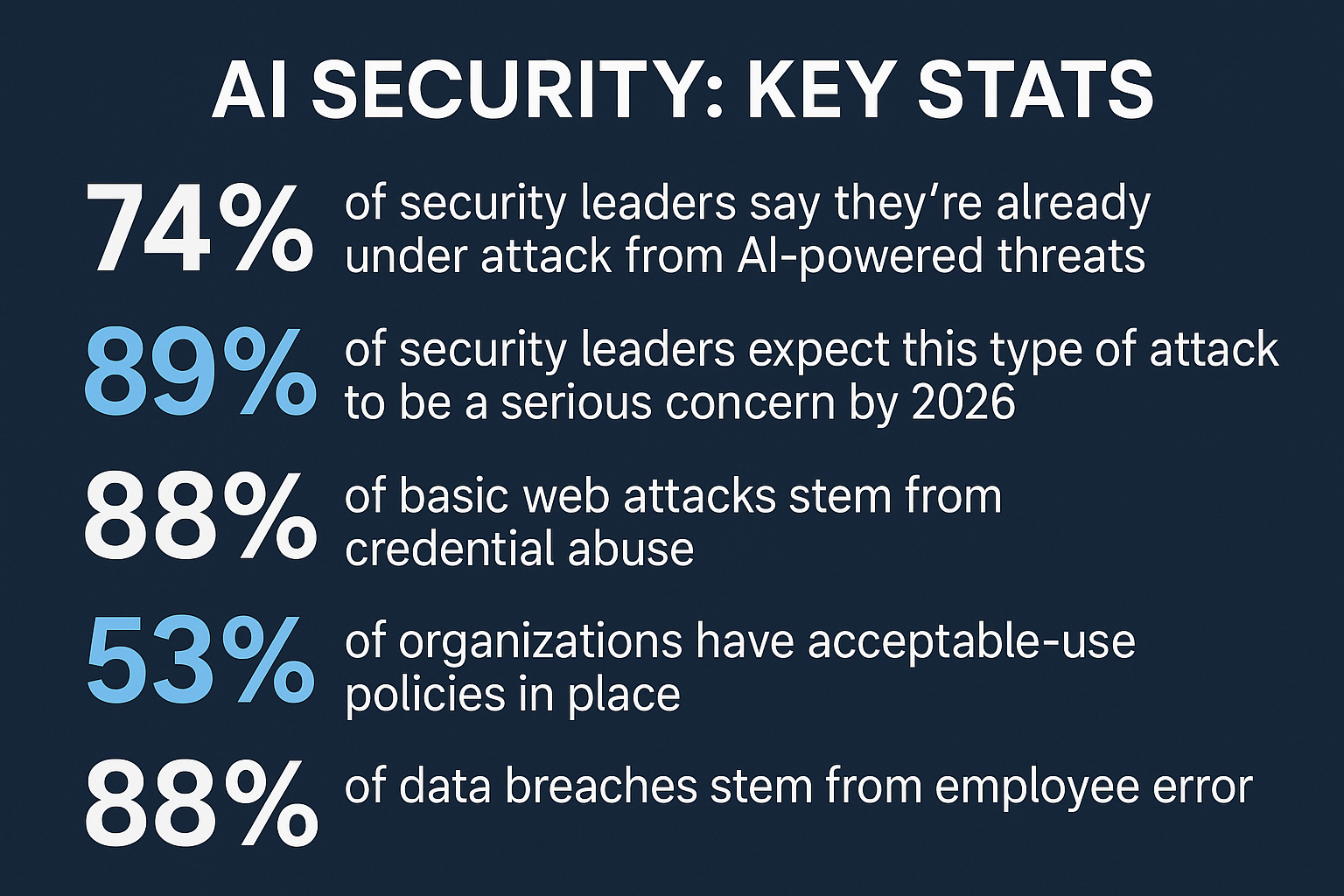

Organizations face an escalating battle against AI-powered cyber threats, with 74% of security leaders believing they're already under attack and 89% expecting serious concerns by 2026.

According to industry reports, AI empowers less skilled attackers to launch sophisticated campaigns that can bypass traditional defenses. Credential abuse now powers 88% of basic web application attacks, while AI-generated threats can rapidly evolve to evade signature-based detection.

Organizations struggle with AI security implementation due to inadequate data governance and protection frameworks. Without proper controls, sensitive information can be exposed when using external AI services. Many companies lack acceptable use policies, with only 53% having guidelines in place despite employee error causing 88% of data breaches.

Security experts recommend implementing "Zero Trust for AI" approaches that treat every interaction as untrusted, alongside lifecycle security extending beyond production environments. Forward-thinking organizations are now conducting AI-specific red team exercises and implementing continuous monitoring to detect anomalous AI usage patterns.

"The world's most innovative companies want to pull the AI lever to move, build and scale fast, without sacrificing security. We are in a unique position to help power that innovation–and help bring AI to all businesses safely."

- Matthew Prince

CEO and Co-Founder, Cloudflare

AI Security Feature Breakdown

| Feature | Description |

|---|---|

| Shadow AI Report | Reveals which generative AI applications employees access and how |

| Cloudflare Gateway | Applies AI access policies at the network edge — blocks unauthorized usage |

| AI Prompt Protection | Detects risky prompts that may leak or expose sensitive data |

| Zero Trust MCP Server Control | Aggregates and manages AI model calls through a centralized dashboard |

| AI Security Posture Management | Delivers comprehensive awareness and control over organizational AI usage |