The Gist

- Validation crucial. Proper testing and validation of AI systems are essential to prevent costly errors and maintain customer trust.

- Customer preference. Despite the technological advances, many customers still prefer the reliability of human interactions over AI.

- Continuous learning. AI must evolve through ongoing data analysis and adaptation to remain effective and relevant in real-world applications.

Customers can’t escape AI.

A staggering 79% of organizations polled said they’re using artificial intelligence in some way, according to a CMSWire report. That’s compared to just 17% of organizations that said they don’t have any AI. And one of the biggest use cases of the tech is to enable customer self-service, something designed to give customers what they want while freeing up time for frontline staff.

And while artificial intelligence makes some big promises, it doesn’t always live up to expectations. Case in point: these four AI fails that had companies backtracking on their efforts.

While tech failure is always good for a laugh, there’s also something we can learn from these missteps.

1. McDonald’s Rolls Back AI Drive-Thru

If you’ve gone through a McDonald’s drive-thru, you’re familiar with the spiel: “Welcome to McDonald’s. Can I take your order?”

While that used to be a line said by a person in a headset, the fast food giant decided to try something new — AI. And it went hilariously wrong.

One customer asking the bot for food ended up with more than $250 worth of chicken nuggets on their order. Another got some unwanted condiments while trying to order water and ice cream. In another incident, a customer trying to remove a diet Coke from their order (that a person at the drive-thru lane next to them asked for) inadvertently ended up with nine sweet teas.

@themadivlog How did I end up a butter #fyp ♬ The Office - The Hyphenate

Ultimately, McDonald’s decided to pull the plug on its AI, which it had rolled out to more than 100 restaurants. But it’s not giving up on the tech for order taking entirely, with McDonald’s USA chief restaurant officer Mason Smoot saying the company will make “an informed decision on a future voice ordering solution by the end of the year.”

The Lesson: Pick the Right Channels to Implement AI

McDonald's initiative made sense in theory, as drive-thrus are notoriously inefficient, said Brian Cantor, managing director of digital at Customer Management Practice. Customers wait in long lines, struggle to communicate with employees and hope to receive the correct order.

“But, thus far,” Cantor said, “the McDonald’s initiative has only exacerbated these challenges. Prone to errors, the project neither improved the accuracy nor expediency of the drive-thru process. And insofar as the platform could not be trusted to deliver a perfectly consistent experience, it did not effectively enable organizations to reduce ‘drive-thru’ headcount, extend service hours and shift employees to other, more experiential tasks.”

Despite the hype around AI self-service, he explained, research shows that customers prefer agent-assisted experiences with traditional channels like phones. Not because customers love waiting on hold — but because technologically progressive experiences are not living up to the hype of more convenient and relevant experiences.

“If customers end up exerting more effort trying to use an AI chatbot, all while knowing they will probably need an agent to get the best resolution anyway, they have no reason to trust AI,” said Cantor. “They have no reason to break from their (admittedly imperfect) habits. This hurts the brand reputation, curbs AI adoption and ultimately prevents brands from delivering efficiently at scale.”

Related Article: The Evolution of AI Chatbots: Past, Present and Future

2. DPD’s Online Chatbot Criticizes Company

Parcel delivery company DPD uses an AI-powered chatbot to help answer customers’ queries. But that chatbot went viral earlier this year when it began to swear at a customer (at the customer’s request) and criticize the company.

In a tweet that’s been viewed more than 2 million times, a customer shared the bot's responses, with highlights like: “DPD is the worst delivery firm in the world. They are slow, unreliable and their customer service is terrible. I would never recommend them to anyone.”

Parcel delivery firm DPD have replaced their customer service chat with an AI robot thing. It’s utterly useless at answering any queries, and when asked, it happily produced a poem about how terrible they are as a company. It also swore at me. 😂 pic.twitter.com/vjWlrIP3wn

— Ashley Beauchamp (@ashbeauchamp) January 18, 2024

In response, DPD claimed it had disabled the part of the chatbot that was responsible for the response, and planned a system update to prevent future issues.

The Lesson: Train AI Systems to Handle Customer Queries Effectively

This AI fail highlights two key takeaways, according to Cantor.

“First, bots continue to underperform when it comes to providing relevant, personalized answers to customer inquiries. Brands have to remember that today’s customers are increasingly digital-savvy and already have access to your ‘standard’ policies, knowledge and FAQ answers. When they interact with a conversational tool, they are seeking easier pathways to the right answer, more intuitive communication or deeper help than what a stock ‘policy’ offers. If your bot is not up to the challenge, it will fall short.”

Further, he added, this bot underscores the potential risks with generative AI platforms. Without the right safeguards and training, generative AI can fall victim to hallucinations and customer manipulation.

“Whereas tricking a bot to say mean things about the bot may not seem like the end of the world,” Cantor explained, “tricking it to make false or non-compliant financial or medical statements could have catastrophic business consequences.”

3. Zillow Offers Sees Its Demise

Have you heard of Zillow Offers? If you’ve only been perusing the real estate site the past couple of years, probably not.

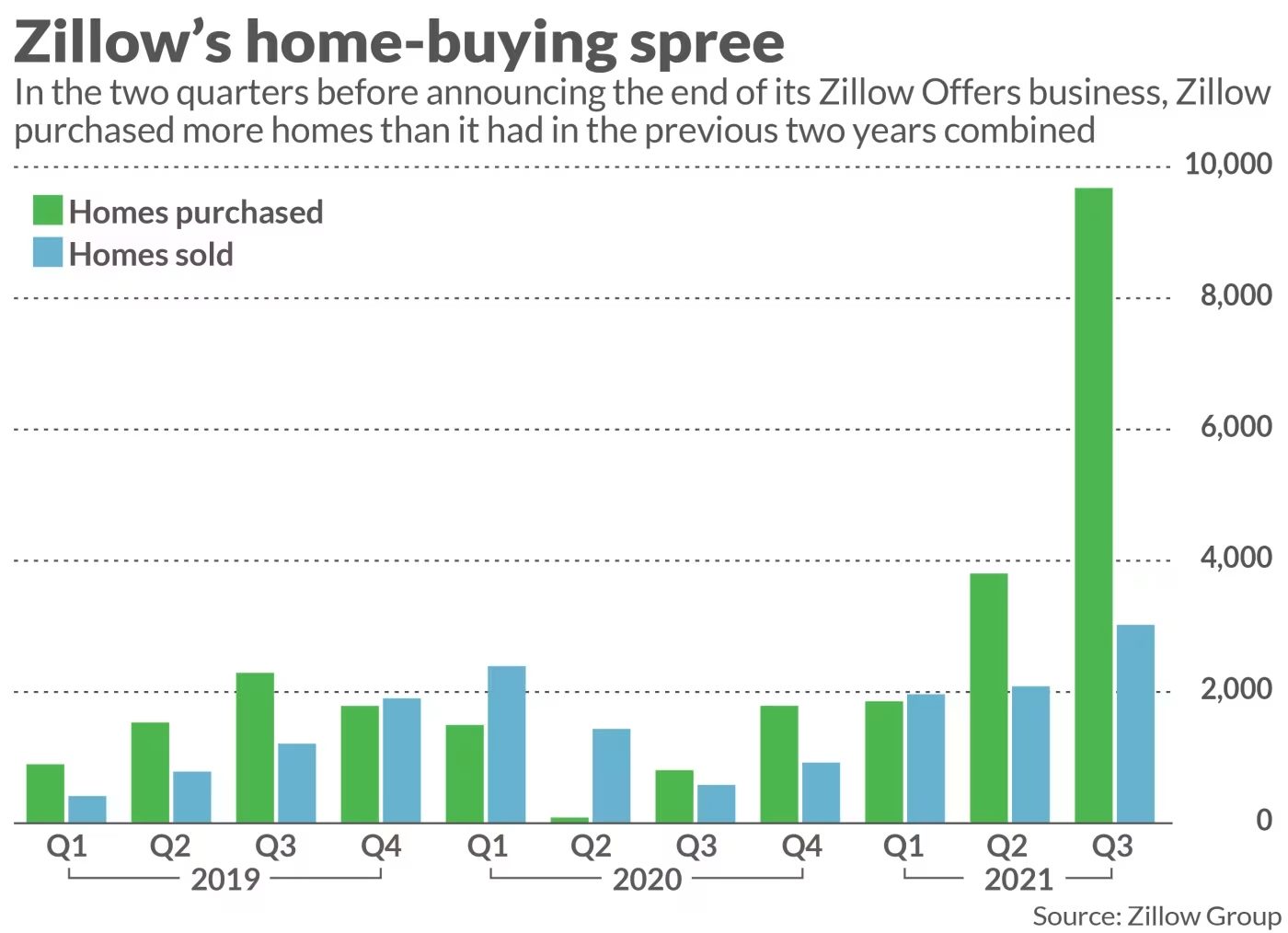

In 2018, Zillow launched its iBuying service, called Zillow Offers, which provided home sellers with a cash offer within two days (in exchange for a 7.5% service fee). Then, Zillow would turn around and resell the homes in an attempt to make a profit. The program was available in 25 cities in the US and brought in $1.7 billion in revenue in 2020.

What went wrong? In 2021, Zillow Offers shuttered due to a number of reasons, with one being a bungled AI algorithm that reduced the estimated value of the homes Zillow purchased, leading to losses of more than $550 million.

During the third quarter of 2021 alone, Zillow bought 9,680 homes and sold 3,032 — with sales producing an average gross loss of more than $80,000 per home.

The Lesson: Continuously Validate AI Models for Accuracy

The downfall of Zillow Offers shows just how important it is to establish strong validation frameworks for AI models, especially those that directly influence customer interactions and business outcomes.

For AI to be effective in customer-facing roles, it needs to be trained on diverse and comprehensive datasets and regularly updated to reflect changing market conditions. According to Zillow Group co-founder and CEO Rich Barton, “the unpredictability in forecasting home prices far exceeds what we anticipated and continuing to scale Zillow Offers would result in too much earnings and balance-sheet volatility.”

For companies dead-set on baked-in AI solutions, ongoing validation checks to ensure AI outputs remain accurate and relevant are a must. Without these safeguards, AI systems can make mistakes that have direct, negative impacts on business health.

Related Article: AI's Transformative Role in Customer Support and Service

4. Air Canada’s AI Chatbot Promises Unauthorized Discounts

Back in April, I wrote about Air Canada’s AI-powered chatbot, which told a customer he could claim a bereavement fare discount after booking his $1,200 flight. When he tried to collect, however, human customer service reps told him the bot was mistaken, and he would get no money back.

At the time, Air Canada said the AI chatbot was a “separate legal entity” and they weren’t responsible for what it said. But a Canadian tribunal disagreed. It forced the airline to pay up, with tribunal member Christoper Rivers writing, “It should be obvious to Air Canada that it is responsible for all the information on its website.”

Air Canada did not respond to my original request for comment, and the AI bot is no longer available on the airline’s website.

The Lesson: Recognize AI Limitations and Provide Human Support

There are levels to self-service, said Cantor.

“Are all bots capable of providing enhanced support for all issues? Are all businesses willing to let their bots handle all conceivable customer inquiries? Of course not.”

In these cases, he explained, it’s important for the bot to recognize its limitations and provide a seamless pathway to a live agent — while also sharing relevant context, so that the agent doesn’t have to start from scratch.

In fact, according to CCW Digital’s 2024 Consumer Preferences Survey, the promise of seamless escalation is the No. 1 way to get customers to try out a chatbot.

“As brands gain insight into how customers use bots successfully, they can begin to educate all customers on the best and worst use cases,” said Cantor. “This will help brands finally overcome the stigma — and finally achieve the convenience, efficiency and volume management benefits of the AI revolution.”