Enterprise AI adoption is everywhere. But here’s the truth: most companies are still stuck in outdated ways of thinking. The focus has been overly technical, obsessed with crafting prompts to control AI outputs. We haven’t paused to ask:

Should humans adapt to AI or should AI adapt to us?

Right now, most organizations fall into one of two camps:

- They treat AI like code: built for precision, relying on structured, optimized prompts. Let’s call that the “rigid” approach.

- They use AI as a collaborative tool, expecting it to evolve with human communication, not dictate it.

And here’s the real issue: most are still stuck in the first mindset, when the future clearly calls for the second.

The Limitations of Traditional Prompt Engineering

Prompt engineering, writing precise inputs to guide AI, has its place. But let’s be honest: it’s a workaround. It favors those with technical skillsets, not those with critical thinking or creative insight.

Where Rigid Prompting Falls Short:

- Exclusionary by design: Not everyone can master this “language.”

- Kills efficiency: Time spent crafting perfect prompts is time not spent moving work forward.

- Strips AI of potential: These prompts constrain rather than expand AI’s utility.

The demand for prompt engineers has declined sharply. And, according to LinkedIn News, the rapid proliferation of unique AI models made it impossible to create one universal prompt engineering playbook. That skill, once hot, is already cooling.

What Forward-Thinking Companies Are Doing

Some enterprises have already embraced the shift. They’re not training people to communicate like machines; they’re designing AI to respond to how people already communicate.

- JPMorgan Chase: Their large language model-powered assistant supports 200,000+ employees preparing financial documents — no prompt gymnastics required.

- Microsoft & Schaeffler: Natural-language factory assistants reduce diagnostic delays by 50%, proving that context-aware interaction can streamline industrial workflows.

Related Article: The Truth About Prompt Engineering Jobs

The Shift We’re Living Through

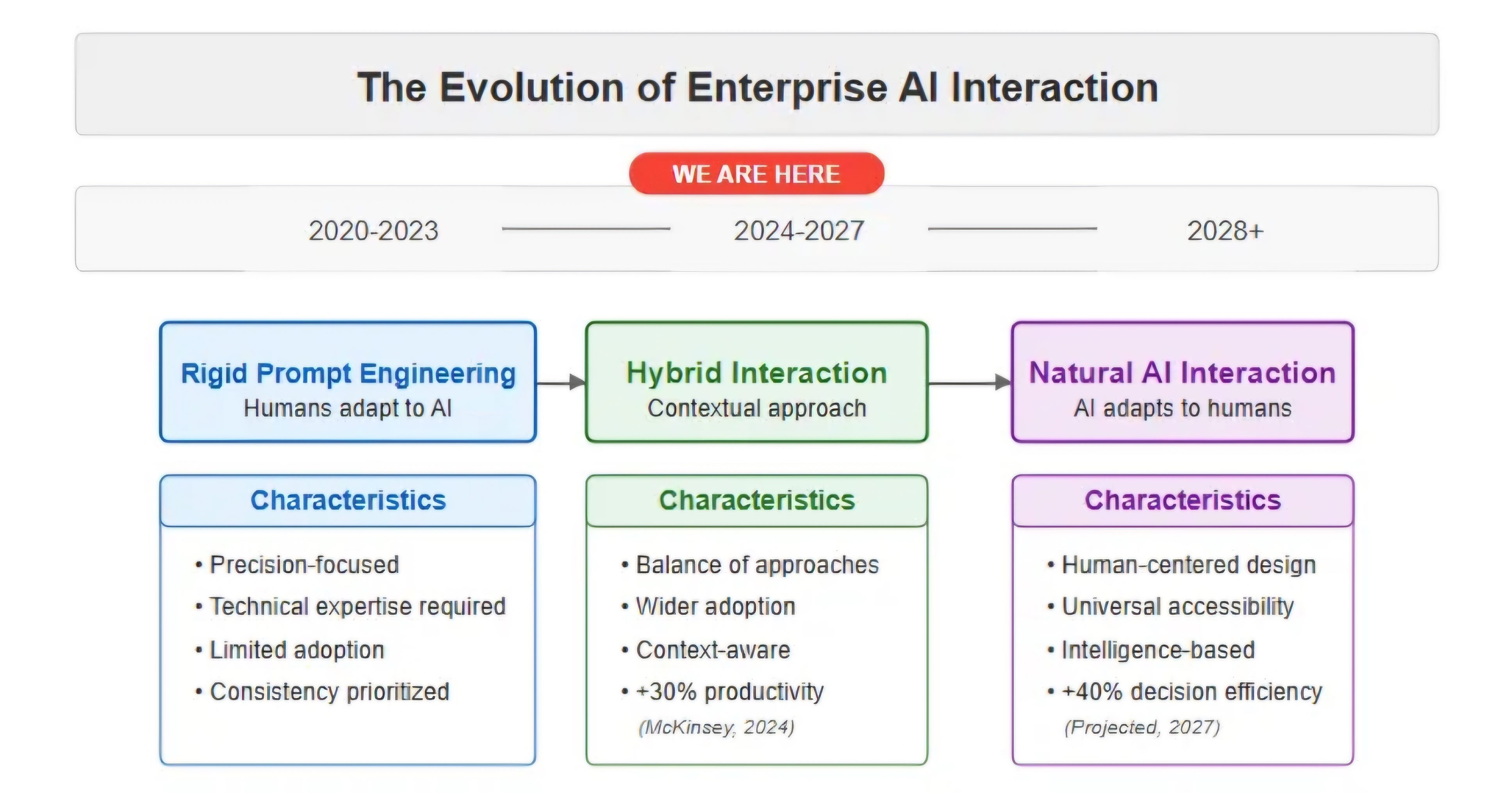

The graphic above shows our three-phase journey:

- Phase 1 (2020-2023): "Rigid prompt engineering" forced humans to speak machine language.

- Phase 2 (2024-2027): This is where we are now, a hybrid approach that balances structure and natural communication.

- Phase 3 (2028+): Where we're headed is "Natural AI interaction" — systems designed to work with how we naturally think and communicate. That shift may not be comfortable but is projected to drive a 40% improvement in decision efficiency, backed by Gartner's forecast on AI agents and McKinsey's data showing human-centered AI adoption delivers measurable productivity gains.

Beyond HITL: The Human Scaffolding Approach

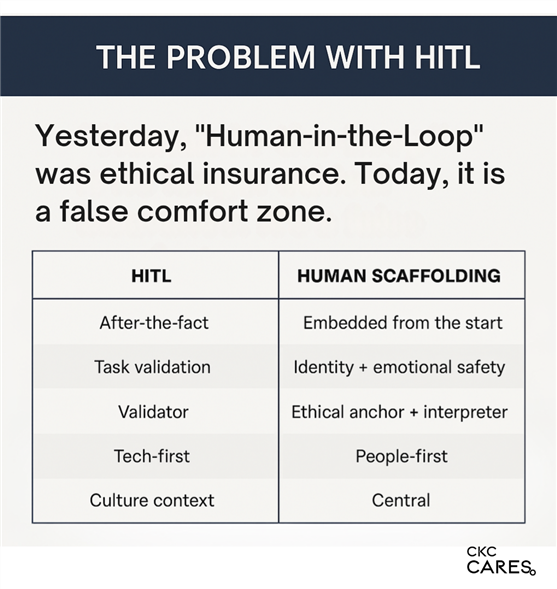

Traditional Human-in-the-Loop (HITL) approaches have become a false comfort zone for enterprises. While they include humans in the process, they typically act as after-the-fact validators rather than being embedded from the start. CKC Cares’ Human Scaffolding HITL approach focuses on the human involvement at every stage by design and default.

Human Scaffolding transforms the relationship between humans and AI from a validation-based approach to one that preserves human identity, emotional safety and ethical grounding. This approach positions humans central to the technology's purpose and function instead of as a "tool" for quality control.

Why Human Scaffolding Matters to the Business

Companies that enable more natural AI interaction are already gaining ground.

Tangible Benefits

- Better decision-making: When tools understand unstructured input, you get real intelligence, not just search results.

- Higher internal adoption: Teams use AI more when they can speak normally.

- Strategic lift: AI becomes an asset that empowers, not a tool that frustrates.

Common Concerns

"Natural interaction is inconsistent."

Yes, it can be; so are most structured prompts. The solution isn’t rigidity — it’s smarter, context-aware design.

"It’s hard to measure."

It’s not. Companies like Microsoft and OpenAI are already measuring success through usability, adoption and reduced time-to-value.

"It’s risky."

All AI use can be risky. Rigid prompts do not erase that. But interaction models that understand context help reduce misunderstanding and increase trust.

Getting the Balance Right

There’s still a place for structured prompting, especially where risk is high and precision matters:

- Financial reporting

- Legal documentation

- Medical protocols

But most of the day-to-day? Collaboration, brainstorming, drafting — those thrive with natural interaction.

Related Article: How Model Context Protocol Is Changing Enterprise AI Integration

Future-Proofing Leadership

This is a leadership one.

- Design systems that amplify human intelligence

- Lead teams that expect technology to adapt, not the other way around

- Build cultures that prioritize communication, not conformity

AI is redefining leadership, not replacing it.

Engineering Trade-Offs (And How to Handle Them)

- Precision vs. Accessibility

- Technical Challenge: Language models exhibit greater variance in outputs when given less structured inputs.

- Solution Architecture: Implement parameter-controlled temperature settings that adjust based on the criticality of the task. Deploy context-aware guardrails that become more restrictive in high-risk scenarios while remaining flexible elsewhere.

- Implementation Example: Multi-modal validation systems that cross-reference natural language requests against structured data schemas before execution.

- Build vs. Buy

- Technical Assessment: Custom LLM training requires significant compute resources (typically 300+ GPU days for fine-tuning at enterprise scale) and specialized ML engineering talent.

- Architecture Decision Framework: Evaluate base models against domain-specific requirements, considering parameter efficiency, inference costs and latency requirements.

- Hybrid Approach: Use vendor-provided foundation models with enterprise-specific retrieval-augmented generation (RAG) systems to balance out-of-the-box capabilities with proprietary knowledge.

- Resource Load

- Computational Considerations: Natural prompting tends to increase token usage, often by around 30-40%, due to richer language, added context and follow-up interaction.

- Optimization Techniques: Implement sliding context windows, strategic token pruning and caching frequently used contexts to reduce computational overhead.

- Resource Allocation Strategy: Distribute compute resources dynamically based on interaction complexity, with simpler requests using smaller, faster models.

- Regulatory Reality

- Make It Traceable: Create layers that translate natural conversations into auditable decision trails.

- Two Streams, One Process: Run both user-facing and compliance pipelines simultaneously; avoid either/or.

- Know When It's Drifting: Build in real-time detection for when language requests start crossing compliance lines.

How to Start (Without Waiting)

- Run an Interaction Audit

- Identify where rigid systems slow people down

- Look for low-risk areas where natural interaction can shine

- Rethink Skills

- Train people to collaborate with AI, not command it

- Build literacy, not dependency

- Pilot with Purpose

- Start small and measure everything: time saved, satisfaction, output quality

- Choose Tools That Learn

- Use vendors who prioritize human-centered adaptation

- Measure What Actually Matters

- Track usage and adoption

- Analyze performance trends

- Listen to your team — are they excited, empowered or exhausted?

Enterprises stuck in prompt precision are solving yesterday’s problems. The organizations that thrive tomorrow will be the ones designing today for how humans naturally think, work and lead.

Learn how you can join our contributor community.