Imagine asking your go-to AI assistant to summarize a complex legal document. It generates a response that sounds correct, but when a legal expert reviews it, critical nuances are missing — subtle distinctions in legal terminology have been overlooked, altering the meaning entirely. Despite its fluency, the AI lacks true comprehension, failing to connect concepts beyond statistical patterns.

This scenario is not uncommon. A Texas attorney was recently sanctioned $15,000 for submitting court filings with fabricated legal citations generated by AI. The attorney, relying on an AI-powered research tool, assumed the citations were legitimate, only to discover that the AI had "hallucinated" legal precedents that did not exist — leading to a serious professional violation and reputational damage.

The Hallucination Problem

The Texas case highlights a fundamental flaw in AI-driven natural language processing (NLP). AI models can generate convincing yet inaccurate information, particularly in fields requiring precision, such as law and academia. AI chatbots frequently hallucinate citations, sometimes offering real but irrelevant sources or citing materials they have not actually processed — especially if the content is paywalled or unavailable in their training data. Even when summarizing text, hallucination remains a risk.

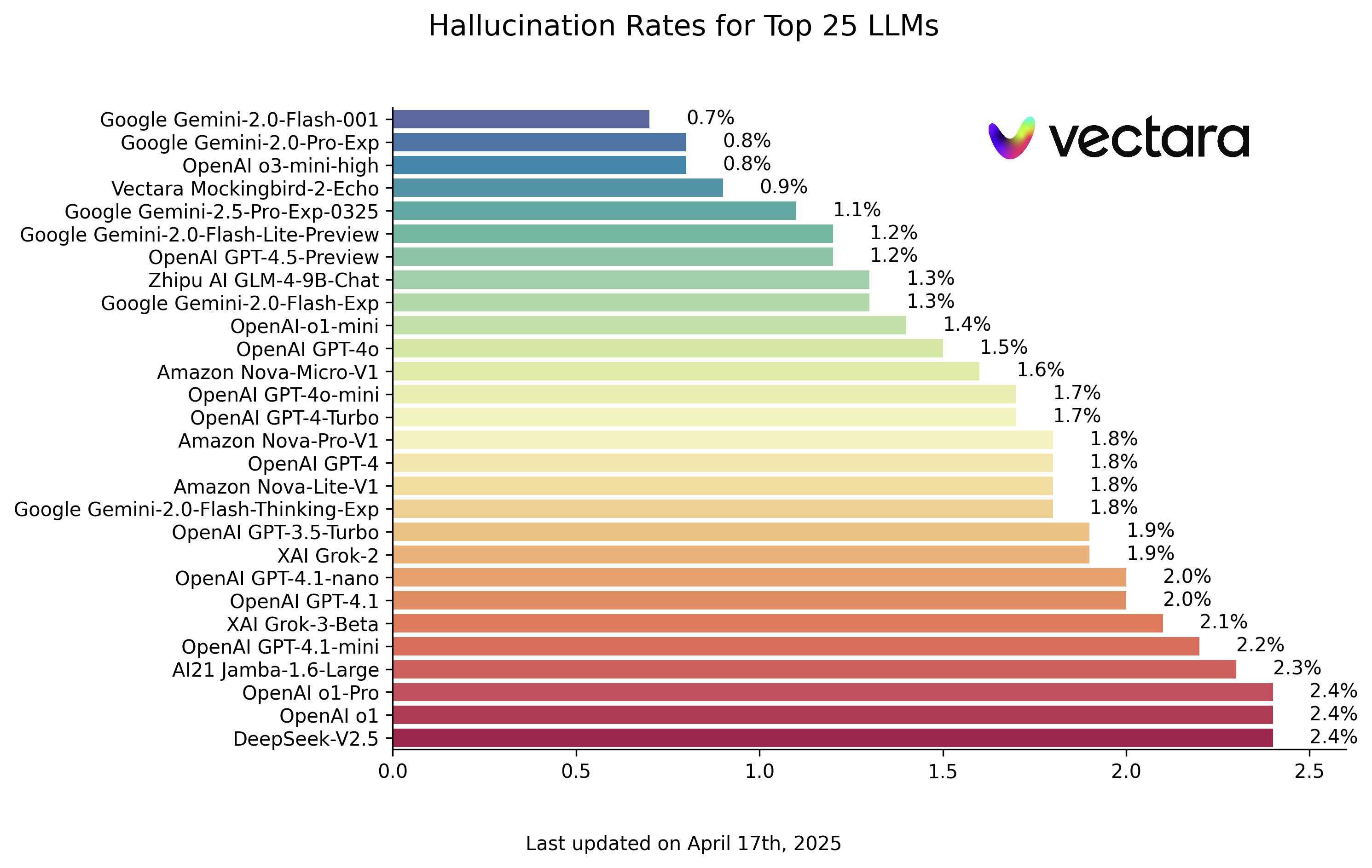

The Hallucination Leaderboard, which tracks AI accuracy, shows OpenAI-o1 has a 2.4% hallucination rate while Google Gemini-Pro performs worse at 7.7%. These ratings reveal the ongoing struggle with NLPs and factual reliability, contextual accuracy and knowledge verification, reinforcing the need for more structured reasoning models in NLP applications.

In my recent study on natural language processing and neurosymbolic AI, co-authored with Dr. James Hutson, we explore how the integration of neural networks and symbolic AI (NeSy AI) is revolutionizing NLP. This hybrid approach merged the pattern recognition capabilities of deep learning with the structured reasoning of symbolic AI, which promoted a more nuanced and contextually aware language model. Our findings suggest that incorporating rule-based inferences and memory activations can significantly enhance accuracy.

As the field continues to evolve, the question is no longer whether AI can process language effectively, but whether it can truly comprehend meaning, reason contextually and apply knowledge beyond statistical patterns.

Related Article: Using Active Learning to Improve AI Models in Real-World Scenarios

AI Still Fails at True Language Understanding

AI models still exhibit serious limitations when it comes to semantic ambiguity, contextual awareness and reasoning. Traditional NLP approaches, like those used in GPT and BERT models, rely on massive datasets and statistical correlations, which, while effective for pattern recognition, fall short when deeper understanding and reasoning are required.

For instance, consider Named Entity Recognition (NER) — a critical NLP task that identifies names of people, organizations and locations in text. Conventional deep learning models excel in extracting named entities but often misinterpret ambiguous references or struggle with domain-specific contexts. A standard neural network model may recognize "Washington" as a location but fail to distinguish whether it refers to Washington State, Washington D.C. or President George Washington without additional reasoning capabilities.

Similarly, deep learning-based neural machine translation models frequently generate fluent translations but fail to preserve meaning in complex sentences or idiomatic expressions. These shortcomings highlight why purely neural approaches are insufficient for comprehensive language understanding — they lack the ability to apply structured reasoning, logical inference and contextual disambiguation.

How Neurosymbolic AI Blends Learning and Logic

To overcome these challenges, researchers have turned to Neurosymbolic AI (NeSy AI) — an approach that integrates deep learning with symbolic reasoning. Unlike purely neural methods, NeSy AI not only learns from data but also applies structured logic and rule-based inference, allowing it to better handle abstract reasoning and complex language tasks.

Our research explores how NeSy AI enhances NLP by incorporating memory-activated reasoning mechanisms that improve NER accuracy, semantic disambiguation and contextual adaptation. In particular, we found that hybrid architectures like BiLSTM + CRF (Bidirectional Long Short-Term Memory with Conditional Random Fields) significantly outperform traditional deep learning models when applied to NER tasks.

This hybrid approach provides several advantages:

- Improved Contextual Understanding: NeSy AI analyzes entire sentence structures, reducing errors caused by isolated word predictions.

- Enhanced Semantic Disambiguation: By incorporating symbolic reasoning, NeSy AI can infer missing contextual details and resolve ambiguity more effectively.

- Better Adaptability: Hybrid models can adapt to specialized domains, such as legal and medical NLP applications, where precise terminology and reasoning are crucial.

Related Article: How Model Context Protocol Is Changing Enterprise AI Integration

Key Applications of Neurosymbolic AI in NLP

The integration of Neural and Symbolic AI is already demonstrating real-world impact in several key areas:

- Named Entity Recognition (NER): Traditional deep learning models often struggle with contextual ambiguity in entity recognition. NeSy AI models incorporate structured knowledge bases to differentiate entities based on context, improving accuracy in legal, medical and scientific texts.

- Question Answering (QA) Systems: Standard AI models rely on statistical associations rather than true understanding. NeSy AI enables fact-based reasoning, allowing AI assistants to provide more accurate and explainable answers to complex queries.

- Semantic Search and Information Retrieval: Search engines powered by NeSy AI can go beyond keyword matching, using reasoning-based inference to return more relevant, context-aware results.

- Conversational AI and Chatbots: Symbolic reasoning allows AI chatbots to understand intent, context, and implied meanings, leading to more natural, human-like interactions.

Neurosymbolic AI's Future: Innovation With Caution

While NeSy AI presents a promising step forward, its development comes with technical and ethical challenges. Integrating neural and symbolic methods requires significant computational resources, and maintaining interpretability and transparency remains a major concern. Additionally, as AI systems become more autonomous, the need for ethical safeguards and fairness audits grows more pressing.

To ensure responsible AI development, institutions must:

- Expand diverse and representative training datasets to prevent model biases.

- Implement explainability tools to ensure transparent decision-making in NLP applications.

- Establish governance policies that define ethical AI usage in education, healthcare and legal systems.

Neurosymbolic AI marks a significant advancement in natural language processing, addressing the fundamental limitations of traditional deep learning by merging data-driven learning with structured reasoning. The research confirms that integrating neural networks with symbolic AI enhances NLP applications, providing greater accuracy, adaptability and contextual awareness.

The question we must now ask is not whether AI can understand language, but rather how we can ensure it does so fairly, accurately and ethically. For those interested in exploring this topic further, our full research article explores methodologies and implications of Neurosymbolic AI in NLP.

Learn how you can join our contributor community.