OpenAI just released Study Mode, their latest educational AI tool. I watched some slick promotional videos showcasing "personalized learning" and "higher-order thinking." The marketing is impressive. The underlying pedagogy is educational malpractice.

I analyzed three separate Study Mode interactions from OpenAI's own promotional materials. What I found should concern every educator who cares about genuine learning. I’m not cherry-picking these as failures. These are the actual examples OpenAI chose to showcase their educational vision.

When Students Ask "Why Does This Matter?"

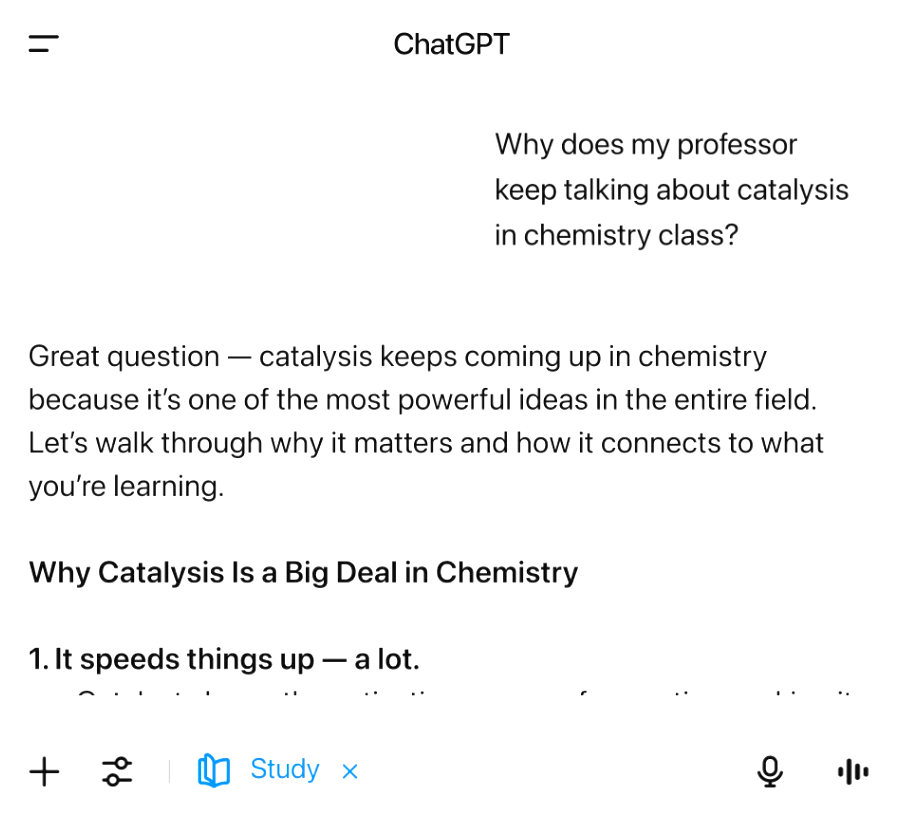

In one Study Mode interaction, a student asks ChatGPT: "Why does my professor keep talking about catalysis in chemistry class?"

This is a motivation question. The student isn't confused about catalysis mechanics. They're questioning its relevance. They need to understand why this concept matters before diving deeper into technical details.

ChatGPT's response? A technical deep-dive into equilibrium reactions and activation energy: "Catalysts affect the rate of reaction, not the position of equilibrium. Let's dig into that and build from what you already know. Quick Check: What do you remember about equilibrium?"

This response demonstrates complete blindness to student motivation. Research in Self-Determination Theory shows that students need to understand relevance and purpose before engaging with content. When a student expresses confusion about why they're learning something, piling on more technical content increases cognitive load and deepens disconnection.

A skilled teacher would have addressed the relevance question first. They would connect catalysis to everyday experiences, explaining why it appears throughout chemistry. This validates the student's confusion before adding to the complexity.

Related Article: AI's Trojan Horse: Are We Automating Medical Education or Lobotomizing It?

The Quiz Trap

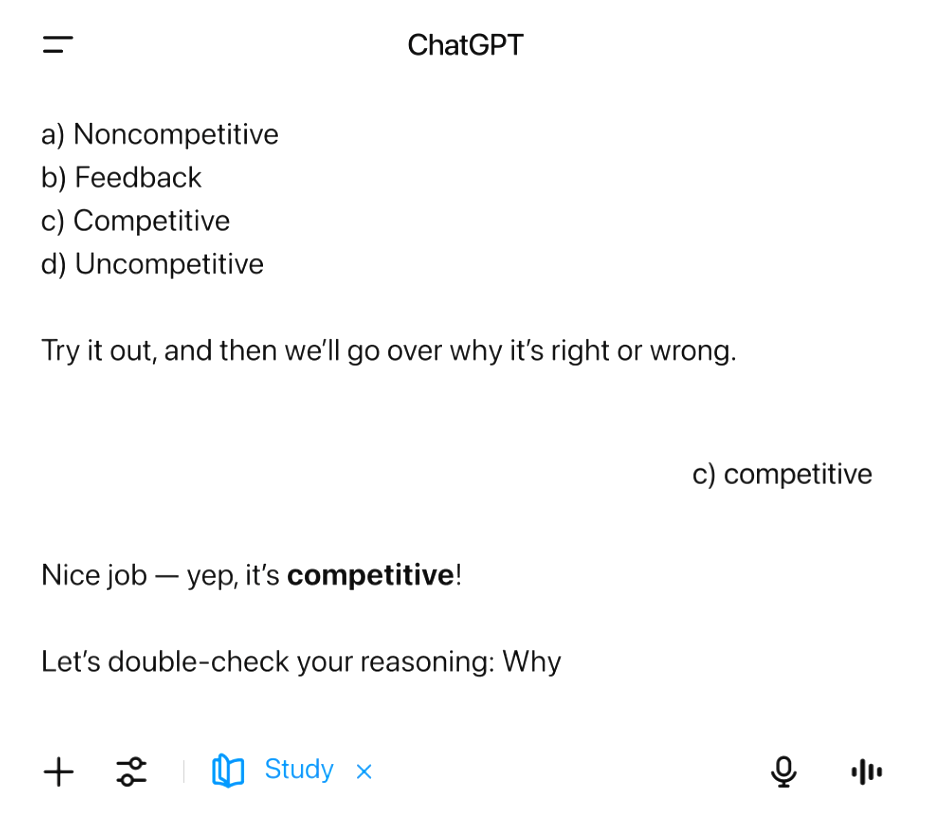

The second interaction reveals another fundamental misunderstanding. When a student says "quiz me," ChatGPT immediately generates a multiple-choice question about enzyme inhibitors:

"Which type of inhibitor binds to the active site and can be overcome by adding more substrate? a) Noncompetitive b) Feedback c) Competitive d) Uncompetitive"

The question appears rigorous but lacks any connection to the student's current learning context or conceptual understanding.

OpenAI might push back and argue this demonstrates good pedagogy because it does follow up with checking for understanding. But look closer at what's actually being assessed. The follow-up question asks the student to explain why their answer is right according to established biochemical principles. The student provides the expected reasoning ("because it increases the chance of the substrate to bind"), receives validation and moves on.

Genuine comprehension checking would ask when competitive inhibition is useful in drug design? How does this principle explain why some medications become less effective over time? Can you think of a situation where you'd want to encourage rather than prevent competitive inhibition?

These questions require students to apply concepts, make connections across domains and grapple with real-world complexity. Instead, Study Mode rewards students for demonstrating they can reproduce textbook explanations. This is the kind of shallow learning that makes humans replaceable by algorithmic systems.

Procedures Without Purpose

The third interaction shows ChatGPT helping with finance homework, walking a student through Net Present Value calculations:

"Present Value of Year 1 = 15,000 / (1 + 0.08)¹ Step 1: What's the present value of the first year's $15,000? Let's start with: 15,000 / (1.08)¹ Can you calculate that for me and tell me what you get?"

This looks like effective tutoring until you realize what's missing: conceptual understanding. ChatGPT jumps straight into procedural steps without establishing why this matters in business decision-making or what these calculations actually represent. Students learn to execute operations without understanding their purpose. If this is what students are trained on, they become vulnerable to being replaced by AI systems.

The Deeper Problem

These interactions reveal critical blind spots. OpenAI's systems cannot distinguish between technical questions and relevance questions. When students express confusion about purpose, Study Mode responds with more content complexity rather than motivational scaffolding.

The system also prioritizes correct answers and step-by-step execution over conceptual understanding and meaningful connections. Students learn to perform understanding rather than develop it.

These are major technical issues, because they represent a fundamental misunderstanding about how learning works. Education isn't content delivery optimized for engagement metrics. It's the messy, human process of building understanding through sustained inquiry, intellectual struggle and meaningful connection.

OpenAI is positioning themselves as education experts while demonstrating profound ignorance of basic learning principles. Their $23 million partnership with the American Federation of Teachers gives them unprecedented influence over educational policy and practice.

Related Article: 5 AI Case Studies in Education

Educator Agency

This matters because students are natural pattern recognizers. If AI systems reward the performance of learning over actual learning, students will optimize for AI approval rather than genuine understanding. They'll learn to game educational algorithms instead of developing the adaptive intelligence needed for complex problem-solving. We're training a generation to defer to algorithmic judgment precisely when human thinking matters most.

Educators, not tech companies, define what good educational technology looks like. OpenAI should invite experienced teachers and learning scientists into their development process, not just to test product launches but during design decisions. They need to understand that engagement isn't learning, that motivation precedes complexity and that genuine understanding requires struggle. That can't be done with a chatbot.

We, as educators or business leaders, can’t rely on OpenAI to make these choices. We cannot allow companies with no educational expertise to shape learning policy through marketing campaigns and corporate partnerships. Students need to be able to think independently, ask meaningful questions and combine human judgment with technological tools.

Study Mode's promotional materials reveal a company that mistakes educational theater for actual learning. That's not just a bad product. It's a threat to human intellectual development. Clear away the marketing noise and give students something worth thinking about. Everything else follows naturally.

Learn how you can join our contributor community.