We’ve all heard the AI horror stories.

The airline chatbot that told a customer about a bereavement fare category that didn’t exist — and had to honor it. A lawyer who used ChatGPT to create a brief, and the system invented legal precedents — which the lawyer didn’t check before submitting to the judge. And Google’s Bard chatbot saying during a demo that the James Webb telescope took the first photo of a planet outside the solar system, which was off by almost two decades — a blunder that caused a $100 billion drop in shares for the tech giant.

Stories like these give enterprises the fidgets about AI.

“LLM hallucinations or fabrications are still a top concern among all types of organizations,” said Tim Law, research director for artificial intelligence and automation for IDC. “In discussions with enterprises, it is always mentioned as a top concern in mission-critical business functions, and especially in domains like healthcare, financial services and other highly regulated industries. Hallucinations are still an inhibitor for adoption in many enterprise production use cases.”

How AI Hallucinations Happen

Like parrots, AI systems don’t know what they’re saying or drawing. Generative AI works with a sophisticated prediction system that basically guesses what sounds right — a more complex version of the Google autocomplete parlor game. So like college students faced with a blue book for an exam they didn’t study for, the AI system does the best it can by producing something that sounds plausible.

That’s by design: AI’s creativity and versatility are what makes it useful — within reason. “I think these systems — if you train them to never hallucinate — they will become very, very worried about making mistakes and they will say, ‘I don’t know the context’ to everything,” Jared Kaplan, co-founder and chief science officer of Anthropic, told the Wall Street Journal. “A rock doesn’t hallucinate, but it isn’t very useful.”

But while Kaplan has a point, enterprises want to trust what AI systems produce. “The creativity and versatility of generative AI (GenAI) are a double-edged sword,” according to one Gartner report. “Though they serve as powerful features to generate attractive outputs in certain scenarios, their excessive pursuit in GenAI design and implementation introduces a higher likelihood of GenAI behavioral risks and undesired outputs such as hallucinations.”

Related Article: The AI Accuracy Crisis: How Unreliable LLMs Are Holding Companies Back

How Can Companies Prevent Hallucinations?

Preventing AI hallucinations requires a multi-pronged approach — from vendor-level safeguards to enterprise prompting strategies.

What Vendors Are Doing

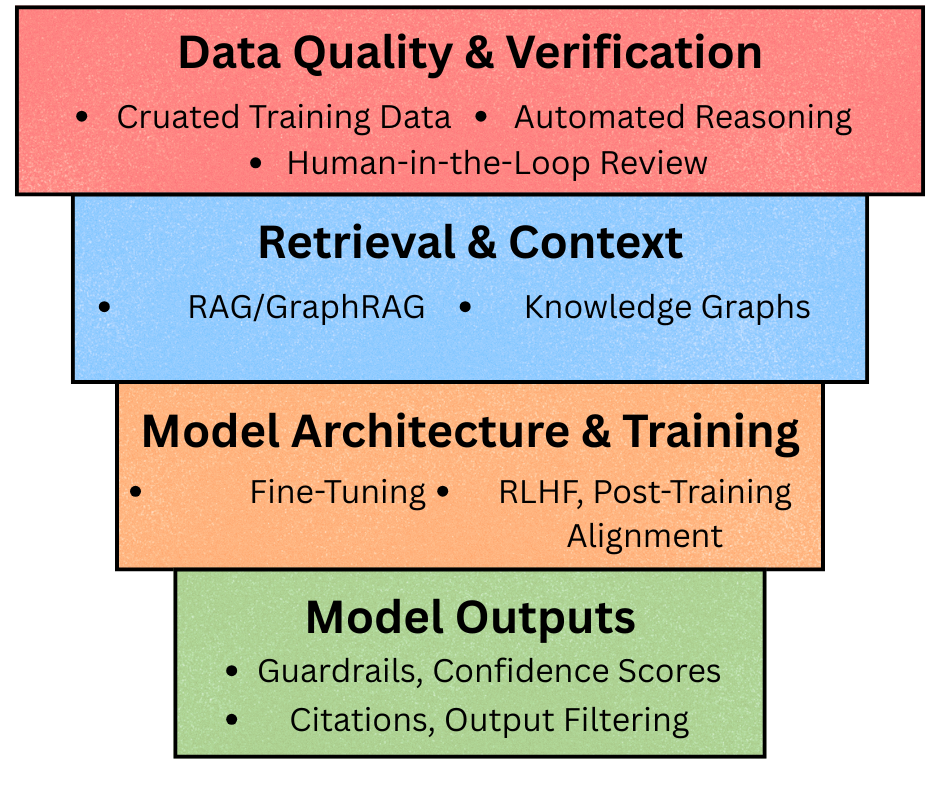

Companies like Anthropic, Google and Microsoft are working to keep their AI systems from being too creative. “Vendors are investing heavily at all levels of the technology stack to address the issue,” Law said. “New tools and techniques for identifying, filtering, remediating and ultimately preventing hallucination are being introduced at a rapid pace. They are attacking it from many perspectives including data, retrieval, model architecture, model training and post-training techniques, advanced reasoning and orchestration.”

Moreover, AI platforms are increasingly grounding AI in an enterprise context, said Darin Stewart, research vice president in Gartner's software engineering and applications practice. “Knowledge graphs, both explicitly defined and LLM-generated, provide additional explicit and structured information to the LLM and provide a means to validate the generated output,” he explained. “In addition, confidence scores and citation links are increasingly common.”

Amazon, for example, is using automated reasoning, or logical proofs, to predict a computer’s behavior. Automated reasoning, which the company added to its Amazon Bedrock Guardrails in December 2024, uses mathematical, logic-based algorithmic verification and reasoning processes to verify information generated by a model.

Google is adding fine-tuning — purpose-built AI systems for specific industries such as healthcare — with products such as MedLM. Fine-tuning involves training a pre-existing AI model using a company’s own data to make its responses more accurate and relevant, allowing the model’s outputs to be anchored in trusted, domain-specific information.

What Enterprises Should Do

According to Gartner, enterprises first need to decide how creative they want their AI to be. Sometimes, hallucinations are acceptable and even desired. For example, Nobel Prize winner David Baker used AI hallucinations to help create new kinds of proteins.

| AI Category | Use Cases |

|---|---|

| Creative & Versatile | General purpose chatbots, autonomous agents |

| Creative & Specialized | Creative writing, art |

| Versatile & Less Creative | Helpdesk chatbots, enterprise knowledge insights |

| Specialized & Less Creative | Grammar checkers, translators |

Gartner categorizes AI tasks into four types:

- Both creative and versatile, such as general purpose chatbots and autonomous agents

- Creative and specialized, such as creative writing or art

- Versatile but less creative, such as helpdesk chatbots and enterprise knowledge insights

- Specialized and less creative, such as grammar checkers and translators

Enterprises can also consider systems such as retrieval-augmented generation (RAG), which, like fine-tuning, gives AI systems access to more reliable information. Other companies, like Microsoft, are also looking at enhancing RAG with knowledge graphs, which it calls GraphRAG.

And, in the same way that companies add guardrails to AI system inputs to prevent, for example, racist and violent queries, both IDC and Gartner recommend that companies add guardrails to AI system outputs as well.

“Risk and compliance teams, working with AI developers and data scientists, need to ensure that these risks are well understood and are managed in an enterprise governance framework,” Law said, adding that technical and policy guardrails help prevent hallucinations from exposing enterprises to potential liabilities. “They also need to work with vendors who are committed to trustworthy AI and are investing in techniques to reduce these risks."

Third-party vendors such as Aporia, Arize, AWS Bedrock Guardrails, Databricks, Guardrails AI, IBM, Microsoft Guidance, Nvidia Nemo and Whylabs offer guardrail products, Gartner noted. However, it warned, “Guardrails are not a silver bullet to ensure your GenAI solution’s behavior and accuracy. Guardrails may not be able to detect all possible and unforeseen inputs or outputs.” In fact, some guardrail products use AI themselves.

Finally, enterprises should examine their prompting, Stewart said, adding that every prompt must exhibit three basic qualities:

- Clarity

- Context

- Constraints

“The request must be clearly and concisely expressed with no extraneous wording,” he explained. Contextual information, such as through RAG, must be supplied to inform and ground the request. “And any necessary constraints or limitations must also be made explicit.” Other techniques such as personas, seed terms and reasoning style also help, he added.

“Interacting with a LLM is an iterative process,” said Stewart. “Don’t hesitate to provide additional information, instructions or guidance to the LLM and ask it to revise or refine its response. Also, challenge the LLM’s reasoning or assertions if they do not seem right. Ask it to describe the reasoning and resources that went into producing its response.” This latter technique is also known as chain-of-thought prompting.

Other Anti-Hallucination Techniques

- Chain of verification, where the model drafts a response, plans verification questions to fact-check it, answers those questions independently so that answers are not biased by other responses and generates its final verified response

- According-to prompting, requiring the AI system to provide a source for its information

- Iterative prompting, or asking a series of small, direct questions instead of one open-ended one

Researchers have found using these techniques reduces hallucinations. And it should go without saying that humans need to check references and verify the accuracy of whatever the AI system comes up with.

If You Lead AI Astray, It’ll Follow

It boils down to, don’t ask questions in a way that encourages AI to hallucinate.

Parents, for example, are advised not to ask guilty toddlers “Who ate the cookies?” because that encourages the toddler to make up a story about it rather than telling the truth. Similarly, if you ask an AI system about a world where humans and dinosaurs coexist, the AI system will confidently — and inaccurately — tell you all about it, because it calculates that’s what you want to hear.

“Hallucinations are all but unavoidable with the current state of the technology, but they can be minimized with both technical and manual interventions,” Stewart said. “While hallucination mitigation methods are improving, they will never fully eliminate the possibility of confabulation.”