As generative AI systems become integral to so many facets of work and home life, one prompting technique has the ability to make AI models more context-aware, adaptable and human-like in tone: persona prompting.

Table of Contents

- What Is Persona Prompting?

- Why Persona Prompting Matters

- Key Use Cases for Persona Prompting

- 3 Best Practices: How to Craft an Effective Persona Prompt

- Risks and Ethical Considerations

- Persona Prompting as a Brand and Governance Tool

What Is Persona Prompting?

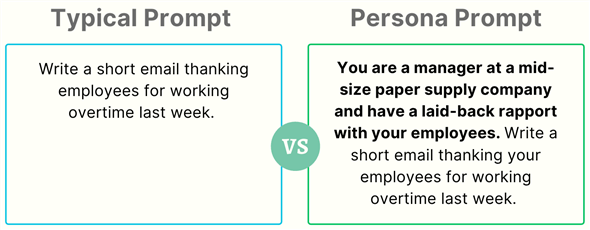

Persona prompting is a specific type of prompt engineering that includes telling the AI model "who" it is.

For instance, a typical prompt may ask for a short email thanking employees for working over time. A persona prompt would add more detail, telling the model to "act as a manager" when writing that email.

Persona prompting can work with any type of identity or perspective, such as:

- A cybersecurity analyst

- A die-hard vegan

- A math tutor

- A storyteller

- A growth marketer

- Philosopher

And the list goes on and on.

Related Article: Prompt Engineering: Techniques, Examples and Best Practices

Why Persona Prompting Matters

When you use persona prompts, that persona or identity acts as a cognitive filter that guides not only what the AI model says, but how it reasons and communicates.

“Persona prompting isn’t just about giving the model instructions; it’s about giving it a role to play,” said IEEE senior member Shrinath Thube. “It brings tone and perspective into the response, not just task accuracy."

This prompting style also injects a layer of personality, tone and situational reasoning into AI-generated text. Where instruction-based prompts might produce technically correct but sterile results, persona prompting lets users control the voice and intent behind those results. That difference becomes crucial in scenarios where tone or professional framing matter as much as factual accuracy.

“[Persona prompting] shines wherever tone matters,” Thube said. “You want the AI to sound empathetic, calm or motivating — not just get the answer right but say it the right way for the audience.”

Key Use Cases for Persona Prompting

Customer Service and Support

Assigning the model a role like “calm, empathetic service representative” ensures consistent tone across diverse customer interactions. The persona maintains professionalism even when handling frustration or emotional topics.

Education and Training

A persona such as “patient math tutor” or “Socratic mentor” changes how the model teaches. It uses probing questions or scaffolding techniques appropriate to that teaching philosophy, boosting engagement and comprehension.

Professional and Technical Communication

Setting a persona as “Fortune 500 executive,” “legal advisor” or “cybersecurity analyst” helps the model produce structured, jargon-appropriate and audience-aligned writing.

Cross-Functional Simulation

Organizations can model interactions between personas — say a CISO and a CFO — to simulate decision-making discussions or policy reviews from multiple expert viewpoints.

Related Article: Chain-of-Thought (CoT) Prompting Guide for Business Users

3 Best Practices: How to Craft an Effective Persona Prompt

What steps go into crafting an effective persona prompt?

1. Be Specific

“A strong prompt doesn’t just say, ‘You are a marketing expert,’” he noted. “It says, ‘You are a senior marketing strategist known for concise, data-driven recommendations that balance creativity with ROI.’”

That specificity helps the model align its reasoning and vocabulary to the expected professional behavior.

2. Find a Good Balance

Thube advised avoiding both extremes, saying the sweet spot is a balance between over-specifying and being simple. He noted that a good persona prompt will:

- Set the tone: i.e., warm but direct

- Give context: i.e., speaking to a stressed customer

- Focus on the goal: i.e., help them understand with ease.

“Too much detail, and the model can get stuck. Too little, it becomes generic.”

3. Stay Consistent

Consistency also matters. To maintain coherent behavior across long interactions, persona prompts should be reinforced — either by restating the role periodically or embedding it in system instructions.

Risks and Ethical Considerations

Persona-driven AI can be powerful — but it also comes with risks.

The main ethical risk, according to Ashmore, is misrepresentation. “Users may over-trust outputs because a persona sounds authoritative, not because it’s accurate." For example, an AI adopting a “medical expert” or “financial advisor” persona might project confidence while offering flawed advice.

Thube agreed that transparency is critical, advising developers to clearly signal that it’s an AI and not assume roles that require human accountability.

Other risks include cultural mismatches — where tone or phrasing suited to one region feels inappropriate elsewhere — and emotional overfamiliarity, as users grow attached to empathetic AI personas.

To mitigate these issues, both experts recommend:

- Strong disclosure policies

- Bias audits

- Limits on emotionally charged or authority-based personas

Related Article: 14 Top Prompt Engineering Certifications

Persona Prompting as a Brand and Governance Tool

Beyond individual tasks, persona prompting can serve as a lightweight form of brand governance.

Organizations can encode tone, vocabulary and behavioral norms into persona prompts. For example, a customer success representative for a premium financial services firm who speaks with warmth, precision and discretion. That identity ensures AI-generated messages consistently reflect brand voice, reducing the need for heavy-handed editing or templating. Thube likens it to a “brand voice guide” for AI.

“If your company tone is bold and witty or calm and formal, the persona prompt helps the model stay on-brand in emails, chats or scripts, even across multiple use cases,” he said.

Still, both experts stressed that persona prompting doesn't replace ongoing governance. Organizations must regularly test for tone drift, bias and compliance to ensure that generated content remains appropriate.