The Gist

- AI momentum. Rapid advancements in AI have been led by tech giants and OpenAI's ChatGPT.

- Public trust. Despite the adoption of AI across industries, public trust in the technology has declined.

- Potential AGI. Artificial general intelligence, speculated to perform any human task, could potentially be revealed in the next year.

In the last six months, artificial intelligence has taken center stage, driven by advancements from tech giants like Google and Microsoft in AI-enhanced search and chat functionalities. These developments were built upon the momentum initiated by OpenAI's ChatGPT, which made waves in the AI community following its announcement on Nov. 30, 2022.

Since the introduction of OpenAI's large language model, numerous generative AI applications have been developed, many of which are based on or inspired by the work that OpenAI pioneered. Contrary to the speed of adoption of AI by businesses across multiple industries, public trust in AI has openly declined. As a recent CMSWire article pointed out, rather than being excited about this technology, customers are apprehensive. This article will look at the reasons why customers may view AI with apprehension, and how brands can help to alleviate the distrust of AI.

Who Is Worried About AI? And Why?

Tim Colbert, strategy and content director for the marketing agency Adduco Communications, told CMSWire that the perception problem with AI is three-fold. "First, is popular culture, where our technological creations end up turning on human beings. Second, ChatGPT seemed to come out of nowhere for many and suddenly it looked like a threat to how we teach our kids, do our jobs, and live our lives. Third, it underscores the fears of Big Tech as witnessed by the growing mistrust of the social media giants," explained Colbert.

Consumers are concerned that AI is coming for their jobs, creating the content they read, watch and see, and potentially becoming sentient, with a primary goal of taking over humanity.

It’s not just consumers that are worried. In March, Elon Musk and thousands of AI leaders signed an open letter urging AI organizations to pause new developments in AI, citing “risks to society.” In May, Sam Altman, CEO and co-founder of OpenAI, spoke with members of Congress to regulate AI due to the inherent risks that are posed by the technology. It’s more than just movies such as "The Terminator" that have fueled fears of sentient or harmful AI. In August 2022, it was revealed that over half of AI researchers believe that there is a 10% chance that AI could cause the literal extinction of humanity.

More recently, AI industry leaders and researchers signed a statement warning of the "extinction" risk posed by AI. The statement said that “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war,” and was signed by Altman, the Godfather of AI Geoffrey Hinton, executives and researchers from Google DeepMind and Anthropic, Microsoft’s Chief Technology Officer Kevin Scott, security expert Bruce Schneier, and the musician Grimes, among others.

There has also been a lot of press about whether or not generative AI and large language models are capable of sentience, largely based on conversations with users in which the user attempts to make the AI “hallucinate” or make things up, which is largely due to limitations in the AI's understanding of a particular topic. There have also been some unsettling instances where the Bing AI chat seemed to go off the rails, comparing an AP journalist (who had asked it to explain previous mistakes) to Hitler and referring to them as “short, ugly, with bad teeth” and telling them that "You are one of the most evil and worst people in history."

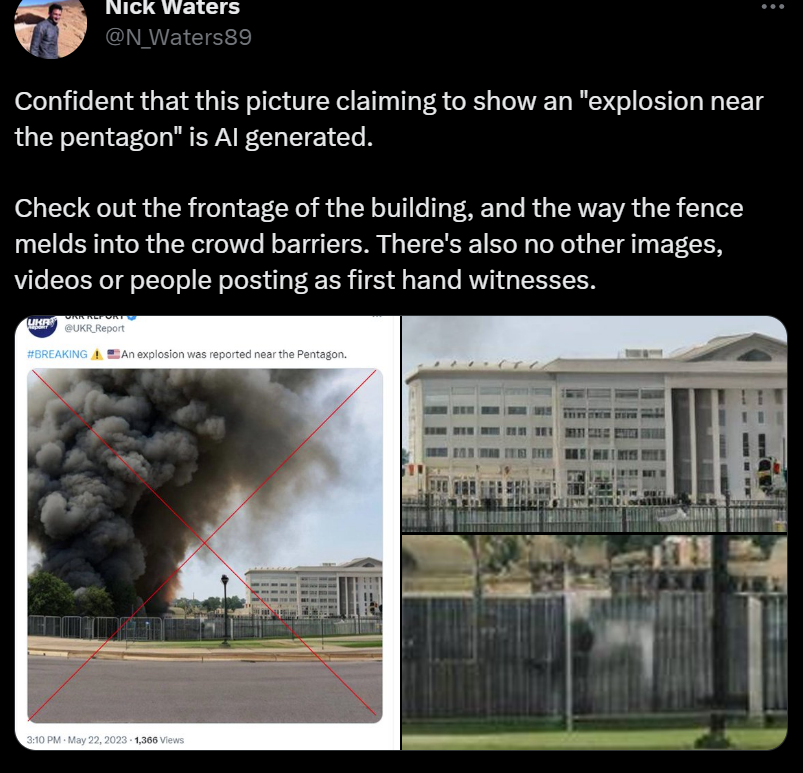

The use of AI to create images, voice and videos of famous people, many of which are presented as actual pictures and videos, are known as deepfakes. It is possible to create a video of a famous person or politician saying reprehensible things, in their own voice, and such a video would be difficult to tell from the real thing. Additionally, bad actors can use AI to write fictitious news stories that they then post on social media, often with calamitous results. In one recent instance, an AI-generated image of an explosion near the Pentagon that was posted on Facebook and Twitter spread misinformation and created a stock market plunge.

The Concept of Artificial General Intelligence

There are predictions that within the next year, AI organizations such as OpenAI may reveal that they have created Artificial General Intelligence (AGI), which is defined as “the representation of generalized human cognitive abilities in software so that, faced with an unfamiliar task, the AGI system could find a solution.” AGI could essentially perform any task that a human is capable of, and likely they’d do it better. It has been speculated that ChatGPT-5, which is predicted to be released in the latter part of 2023, could potentially be AGI. Once we have created AGI, it won’t be long until we are likely to produce “superintelligence,” which is defined as a “hypothetical agent that possesses intelligence far surpassing that of the brightest and most gifted human minds.”

Although all this conjecture sounds like something out of a science-fiction movie, (and it is), AI has the potential to either transform society or destroy it, so consumers are not entirely wrong to be apprehensive about such a disruptive technology.

“It is vital that we do not fear AI but instead, continue to focus on human oversight of these systems, AI as an amplifier of humanity and not a replacement of it, and to work toward building systems that are reliable, responsible and trustworthy for the humans who use them,” said Vishal Sikka, CEO and founder of Vianai Systems. Sikka is in a position to understand the complexities of AI, given that Vianai Systems is all about the development of human-centered AI for the enterprise.

Although much of this is speculative, AGI holds the potential to transform the world by tackling some of humanity's most complex challenges. It will be likely to have the ability to devise innovative strategies to combat climate change, create solutions to end global poverty, and determine novel treatments for persistent diseases such as cancer, heart disease and AIDS. By potentially outpacing human capabilities in these fields, AGI could possibly contribute to conflict resolution, potentially reducing the incidence of war.

Beyond problem-solving, AGI could act as an enhancer of human creativity. By providing us with new tools and insights, it could be the catalyst for breakthroughs in fields ranging from art to engineering, fueling an unprecedented surge in innovation.

AGI also has the potential to revolutionize our entertainment and educational experiences. It would have to have the ability to create hyper-realistic simulations and interactive games that push the boundaries of our imagination. Additionally, it could create immersive learning experiences that could transform the way we learn, making education more engaging and personalized. AGI could also drive exploration in ways we've yet to conceive. By designing and controlling advanced simulations, we could virtually venture into unexplored frontiers, from the deepest oceans to far-flung galaxies.

Although change is inevitable, many people are sensitive to change in their lives and will push back against what they feel are changes that may affect their job, their purpose in life, and society in general. Combined with the speed with which AI has evolved in the past year, consumers can hardly be blamed for being concerned about the future of AI.

Related Article: Transforming Ecommerce With Artificial Intelligence & Machine Learning

The Use of AI Actually Alleviates Uncertainty

Although AI has been used by businesses for decades, until recently consumers have not had the opportunity to interact with the technology themselves. Generative and conversational AI are now able to be used by the masses, and many people who do not consider themselves to be technically apt are dipping their collective toes into the waters of AI, seeing what it is capable of, how it can be used in their lives, and what the impact of AI may be for them personally. This is a very good thing, as it helps people to understand that AI is not going to overthrow humanity, and it can in fact have a positive impact on our lives.

AI is currently at the stage that the internet was at when AOL became mainstream. Initially, people saw AOL as a site for news, chatting, exchanging images and software, and staying in touch with one’s friends and family. Eventually, AOL became a portal through which one could begin to explore other websites, games and other unique applications. Finally, other such “portals” such as Genie and Compuserve, became available and the initial phase of the internet came to be. AI is likely to evolve in a similar fashion, starting with conversational AI, moving to generative AI, and continuing to improve and become more useful in our daily lives.

Generative AI models such as Bing, Bard and ChatGPT can effectively be used to:

- Create images, text and blog content.

- Do research.

- Generate legal documents.

- Write stories, poetry and even lyrics.

- Create business plans.

- Be a travel agent.

- Create an education curriculum.

- And many more tasks.

A recent Pew Research report indicated that although 58% of Americans have heard of ChatGPT, only 14% have used it for entertainment, to learn something new, or for their work. The more generative AI is used by the public, the more they will feel comfortable with the use of AI by the brands they do business with.

To help alleviate the negative connotations of AI, businesses need to reiterate the positive aspects of the technology. "First is to highlight all the good things that AI can do and is doing. Countless companies use AI to combat human trafficking, ensure communication systems, promote medical innovations, and the list goes on," said Colbert. "These stories need to be told. Second, draw analogies. Technological disruption has been going on since the birth of the steam engine, and this is the next exciting chapter of progress. Third, transparency. Every new innovation comes with upsides and downsides. It is more than OK to talk about potential downsides of AI."

Related Article: 5 AI Applications for Marketers to Streamline Work

Transparency in AI Is Vital

There has already been much discussion about the importance of transparency in AI. Explainable AI (XAI) is a type of AI that can more easily be understood by humans because the mechanisms that are involved can be explained. Additionally, Ethical AI can be seen as beneficial and positive because it was designed with ethics and morality in mind.

Unfortunately, the majority of generative AI applications are complicated to the point that it is extremely challenging to explain their inner workings to someone that is not in the field of AI. In a recent CMSWire article, the methodology of how AI generates images is explained in terms that can be mostly understood, especially when paired with this comprehensive AI glossary. That said, the inner workings of AI models are likely to become more complicated and difficult to explain, so rather than explaining how they work, brands should focus on being transparent about how they use AI, what it will do to improve the customer experience, and what it will not do.

Deepika Adhikari, technical expert at The Nature Hero, the go-to destination for tech enthusiasts, told CMSWire that businesses that use AI should prioritize open communication with their stakeholders and customers. "They should clarify how AI is applied, why it exists, and what advantages it might have. Available communication aids in demystifying the technology and fostering trust," said Adhikari. Additionally, AI systems should be programmed to justify their choices and deeds. “Businesses can address concerns about biases or a lack of transparency by clarifying the underlying workings of AI systems. This increases consumer trust and gives them a better knowledge of how AI is employed.”

Final Thoughts on AI's PR Problem

The apprehension surrounding AI is not unfounded, given the rapid advancements and the potential impact it could have on jobs, privacy and even existential risks. AI indeed has a PR problem, but it's one that can be solved through transparency, ethical development, public engagement and a focus on the positive potential of AI.