Google this week announced its latest AI update — Gemini 2.5 — which marks a strategic leap for enterprise-grade AI, offering substantial improvements in reasoning, multimodal comprehension and efficiency.

Gemini 2.5 introduces core enhancements that make it better suited for complex business workflows, data synthesis and real-time decision support. With deeper integration into Google Workspace and AI development tools, Gemini 2.5 positions itself as a more formidable workhorse for enterprise productivity, automation and generative performance at scale.

What’s New in Gemini 2.5

Gemini 2.5 introduces a set of technical upgrades that signal a more mature and enterprise-ready phase for Google’s large language models (LLMs). While not branded as a full-number release, this iteration offers key advancements in reasoning, multimodal understanding and latency that make it more capable of handling real-world, business-critical tasks.

The standout improvement in this release is Gemini 2.5’s enhanced reasoning ability. According to Google, the model now performs better on complex, multi-step prompts — including code generation, math and logic-intensive queries. This positions it as a stronger tool for tasks such as data analysis, enterprise search, software development and legal or financial modeling.

In terms of multimodal functionality, Gemini 2.5 continues to support text and image inputs but delivers improved synthesis when handling longer, mixed-format content. It processes greater token lengths more efficiently and demonstrates stronger coherence across varied inputs — a major step forward for teams working with unstructured data, reports or knowledge bases.

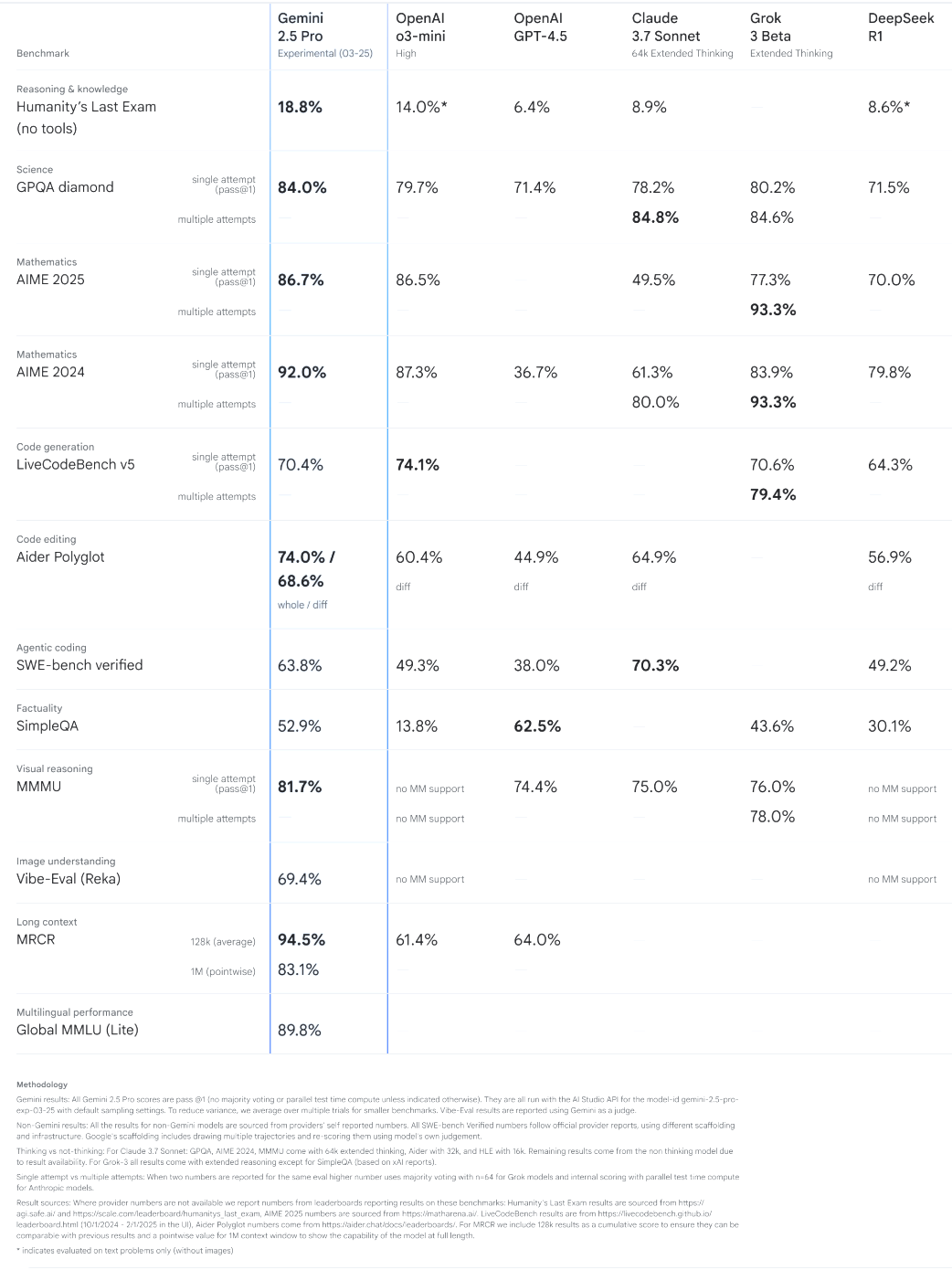

Performance has also been a focus. Google reports reduced latency and improved stability across longer sessions, while maintaining cost-efficiency when scaled in cloud environments. That means enterprises can expect more responsive AI with lower compute strain — which is especially important for high-volume applications or embedded use cases across support, marketing and product teams. Google also released new benchmarks with this release, including new MRCR (multi-round coreference resolution — the ability of an AI model to track and correctly interpret references to people, objects or ideas across multiple exchanges in a conversation) evaluations.

While Gemini 2.5 isn’t yet widely available for direct public use, Google is testing it with select developers in Vertex AI (Google Cloud’s machine learning platform) and plans broader rollout soon. For enterprises that previously found Gemini promising but not production-ready, this release may prove to be a turning point.

Related Article: Evaluating Gemini 2.0 for Enterprise AI: Practical Applications and Challenges

Gemini 2.5 Feature Breakdown

| Category | Functionality & Benefits |

| Reasoning Power | Improved handling of multi-step prompts, logic-heavy tasks and code generation |

| Multimodal Output | Enhanced synthesis of text and image outputs; better coherence across mixed-format content |

| Token Efficiency | Greater capacity for long-form content and document analysis with faster processing |

| Latency & Performance | Reduced lag and increased stability for high-volume enterprise use |

| Workspace Integration | Tighter functionality in Gmail, Docs and Sheets, with context-aware drafting and summarizing |

| Vertex AI Integration | Easier deployment, model tuning and governance for enterprise-scale applications |

| AI Studio Access | Supports Duet AI and connects with third-party apps via Google ecosystem |

Gemini’s Enterprise Focus Comes Into View

Gemini 2.5 takes a definitive step toward enterprise-grade AI by aligning its capabilities with the demands of high-volume, mission-critical business workflows. Its enhanced reasoning and improved consistency make it better suited for tasks that require precision, such as interpreting layered documents, generating accurate summaries or providing recommendations based on dynamic inputs.

One of the standout improvements lies in Gemini 2.5’s ability to process real-time data for on-the-fly decision support. Whether used in customer service environments to assist live agents or in operations dashboards to identify anomalies and trends, the model’s responsiveness and contextual awareness now better reflect enterprise expectations for speed and accuracy.

This iteration also shows growing maturity in vertical-specific roles — powering intelligent support agents that understand internal knowledge bases, sales copilots that surface personalized insights and marketing tools that adapt messaging based on user behavior. Gemini 2.5 isn’t just answering questions — it’s interpreting, anticipating and recommending, making it a more reliable AI assistant across departments.

Tighter Ties Across Google Workspace and Cloud

Gemini 2.5’s rollout is accompanied by deeper integration across Google’s most widely used platforms, making it more accessible and impactful for both developers and enterprise users. Now embedded more tightly within Google Workspace, Gemini 2.5 enhances applications like Gmail, Docs and Sheets with stronger reasoning and multimodal understanding. This enables users to draft, summarize and analyze content with greater accuracy and contextual relevance. For enterprise teams, this means smoother workflows and AI that can assist in real-time, within the tools they already use.

At the infrastructure level, Gemini is now fully deployable through Vertex AI. This enables businesses to integrate Gemini 2.5 into custom applications with less friction, using enterprise-grade tools for data governance, model tuning and scalable deployment. With support for grounding and retrieval-augmented generation (RAG), Gemini in Vertex AI is positioned to power everything from internal knowledge agents to customer-facing chat experiences.

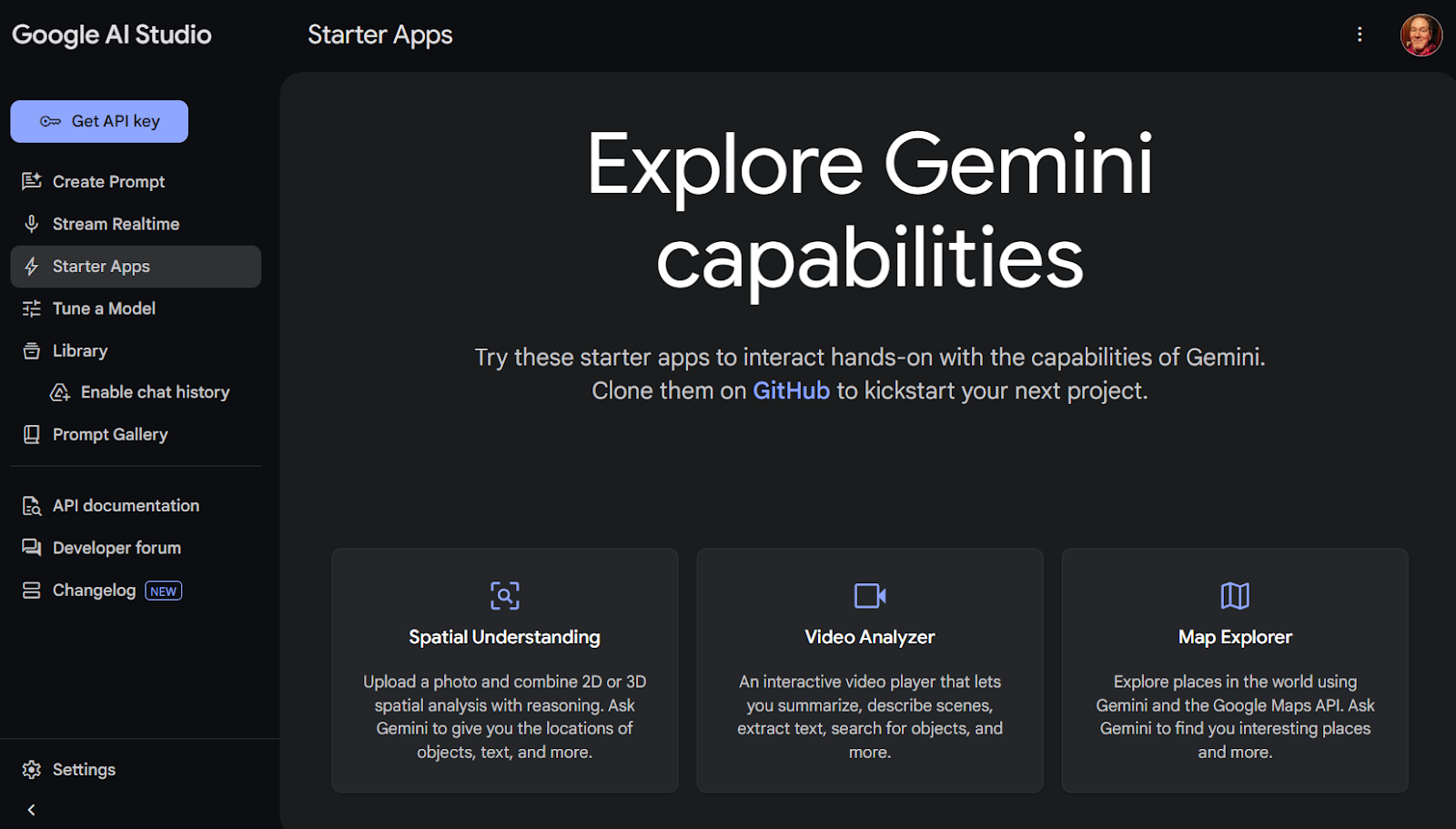

Developers can also access Gemini 2.5 in Google’s AI Studio, where it can be fine-tuned and prototyped for specific business needs. This opens the door for faster testing and rollout of use-case-specific AI assistants, from legal and compliance tools to automated support agents.

Finally, Gemini 2.5 brings new potential for collaborative AI experiences when paired with Duet AI and Google’s broader app ecosystem. Whether integrating into customer support platforms, CRM systems or third-party SaaS apps, Gemini 2.5 provides the flexibility and performance necessary to drive enterprise AI adoption at scale.

Related Article: Is Your Data Good Enough to Power AI Agents?

How Gemini 2.5 Measures up to Rivals

Gemini 2.5 positions Google as a more formidable player in the highly competitive LLM space, going head-to-head with OpenAI’s GPT-4o, Anthropic’s Claude 3.5 and Grok from xAI. While all of these models offer strong generative capabilities, Gemini 2.5 makes its mark through a blend of enhanced reasoning, multimodal fluency and tight ecosystem integration — especially within Google’s cloud and productivity stack.

Where GPT-4o continues to dominate in raw general-purpose performance and custom GPT functionality, Gemini 2.5 closes the gap with enterprise-aligned features including grounded outputs, extended context windows and easier deployment via Vertex AI. Claude’s strength in contextual memory and safety-focused alignment is well-documented, but Gemini counters with unmatched integration into real-time data sources and a robust framework for document-based reasoning, particularly within Docs, Sheets and Gmail.

One of Google’s standout features is low latency and cost-efficiency at scale. Thanks to infrastructure improvements and model optimization, Gemini 2.5 can deliver fast responses while maintaining quality — an important consideration for businesses integrating AI into high-volume workflows. For developers and enterprises already using Google Cloud, the ease of deploying Gemini within existing environments offers a smoother path to ROI compared to switching to standalone LLM providers.

In terms of momentum, Google is aggressively aligning Gemini 2.5 with enterprise adoption. From native Workspace integrations to developer-friendly tools like AI Studio and broader support across Android and ChromeOS, Google is taking advantage of its platform ubiquity to make Gemini the most accessible and versatile AI model in its class.

As the AI race continues, Gemini’s strength may lie not just in model performance — but in Google’s ability to embed it into the daily tools and infrastructure enterprises already rely on.

Where Google’s AI Strategy Goes From Here

Gemini 2.5 marks a notable step forward in Google’s push toward enterprise AI adoption. With improvements in reasoning, multimodal capabilities and tighter integration across its productivity and cloud platforms, the model is clearly designed with business use in mind. While competition from OpenAI and Anthropic remains strong, Google’s strategy of embedding AI into tools many organizations already use could give Gemini an edge as adoption accelerates.