The Gist

- Vision reasoning unlocks new possibilities Meta’s Llama 3.2 models can now reason over image and text, giving marketers new ways to enhance customer experiences.

- Small models, big impact Meta’s 1B and 3B models are optimized for edge and mobile, bringing AI performance directly to compact devices without losing power.

- LlamaStack streamlines AI development Meta’s LlamaStack introduces APIs that simplify customizing and deploying AI models across multiple platforms, making development easier for marketing teams.

Meta has introduced yet another advancement for its AI model Llama. Meta’s latest, Llama 3.2, adds a processing feature, vision reasoning, that rivals OpenAI’s reasoning announcement just a few weeks ago.

The introduction comes after Meta’s announcement for Llama3 in April. More than another reminder of the intense competition for marketplace dominance, Llama 3.2 represents the rapid innovation in model processing among the current AI leaders. Marketers looking to build AI assistance for customer experience should take note.

The Latest Big Llama Development: Going Small

Ever since the introduction of Llama2, Meta has been building interest among professionals developing its AI-based services using open source large language models.

Meta’s last update, Llama 3.1, introduced a large model, the 405B as well as other performance improvements. This time Llama 3.2 introduces two small models that deliver performance at the other end of the scale — the small scale.

The two small models are 1B and 3B models. The 1B and 3B models are designed to be lightweight, text-only models that fit onto edge and mobile devices. They bring an advanced capability to LLM development that developers can insert in applications for a tablet or smartphone. They both support a context length of 128K tokens, an important spec as models provide more multimodal capability at a smaller package.

Related Article: Did Meta Just Top ChatGPT With Its Release of Llama 3's Meta AI?

A New Vision: Vision Reasoning

The mid-sized models, the 11B and 90B models, receive the most significant feature advancement. They are the first Llama models to support vision tasks through vision reasoning. Vision reasoning is the ability to “reflect” over the image media that appears within a prompt request. This means the Llama models can understand and then reason through combining both image and text prompts, but with a slight bias towards images, to manage response accuracy.

For marketers, it means gaining a model that help identify items in an image and compare that with a prompt text to provide better service responses involving an AI-powered chatbot.

The uniqueness in LLama’s reasoning comes from Meta placing a heavy development focus on the image encoder in the prompt response process. Meta trained a set of adapter weights that integrate the pre-trained image encoder into the pre-trained language model. This makes the agentic capabilities of the Llama 3.2 models able to quickly reason how documents with image media or information have a relevant influence on a given prompt response.

This reasoning is a similar capability to what OpenAI touted in its OpenAI o1 models. In contrast, OpenAI o1 models were trained with an emphasis on reinforcement learning to perform complex reasoning. To imagine a simple analogy, think of the reasoning differences between Llama3.2 and OpenAI o1 as two vats of gumbo, each cooked with slightly different seasonings and amounts of the “holy trinity” to create the final dish.

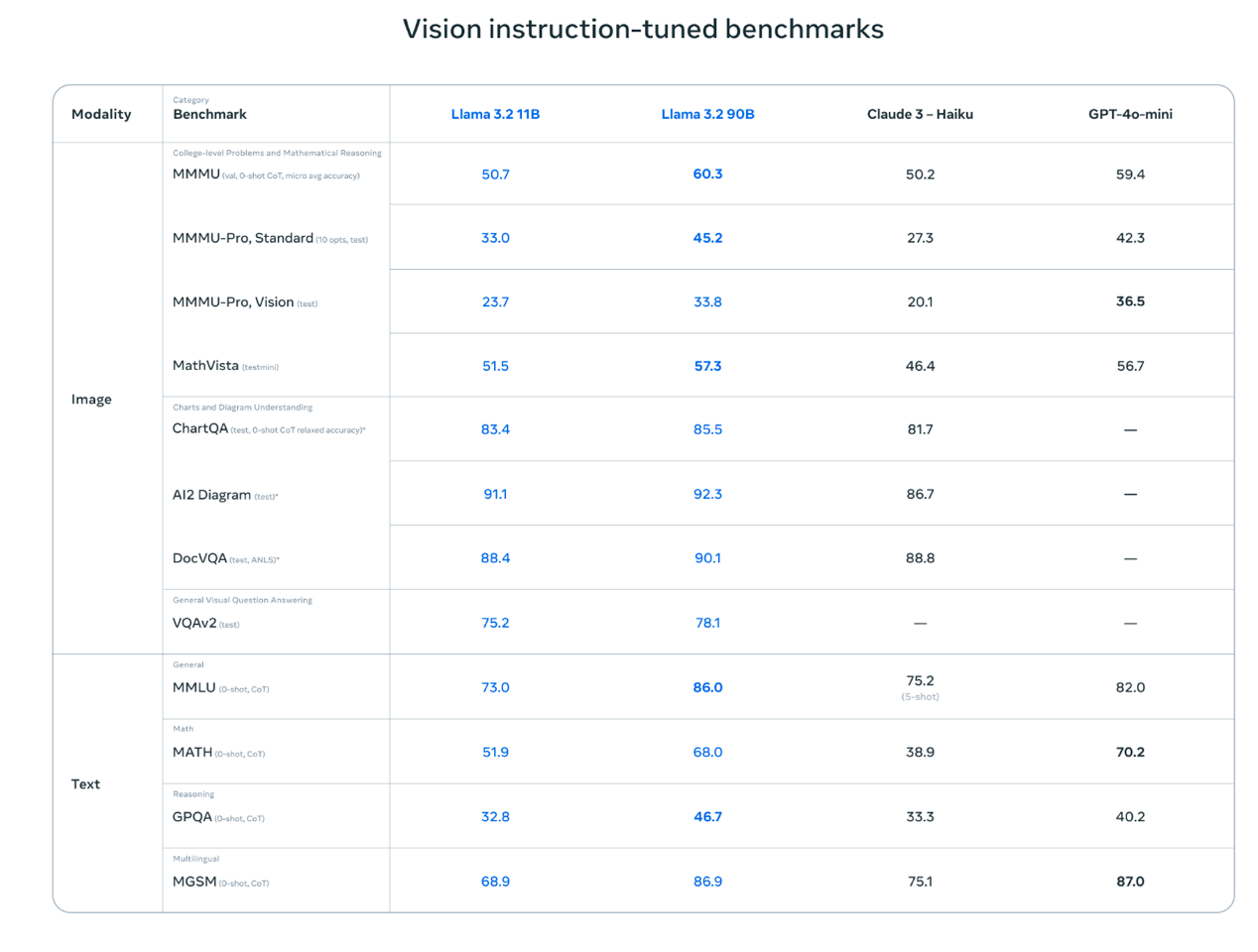

To deliver the vision reasoning capability, Meta had to create a new architecture for the 11B and 90B models that supports image reasoning. According to Meta, the models have performed well when compared against comparable sized models from Claude and the GPT-4o mini.

Building the Right Stack for Your AI Development

Meta has long emphasized a strategy to develop an AI application ecosystem. To that end, Meta introduced Llama Stack, a distribution of APIs that simplifies how developers work with Llama 3.2 models in different environments. The purpose of the Llama Stack is to establish a standardized interface for customizing Llama models and building agentic applications based on the models.

This means the APIs are designed to enable a number of services of interest to marketing teams building an agentic application using Llama. The services are available for a variety of platforms, such as on-premise, cloud and on-device. They can also handle specialized services such as turnkey deployment of retrieval-augmented generation (RAG).

Llama 3.2 is now available on a number of AI development platforms, such as HuggingChat and in Meta’s assistant, Meta AI. Marketing teams working with developers can choose an environment to explore model development according to their needs.