Key Takeaways

- If AI isn't a core operational expense, you're already dangerously out of touch.

- Smart enterprises are using 5+ AI models, playing the field for best-in-class performance on each task. Are you?

- Forget model training; long context windows and new reasoning capabilities are solving complex, agentic use cases.

- AI-native speed and cutting-edge quality are now trumping established trust. Your old advantages are eroding fast.

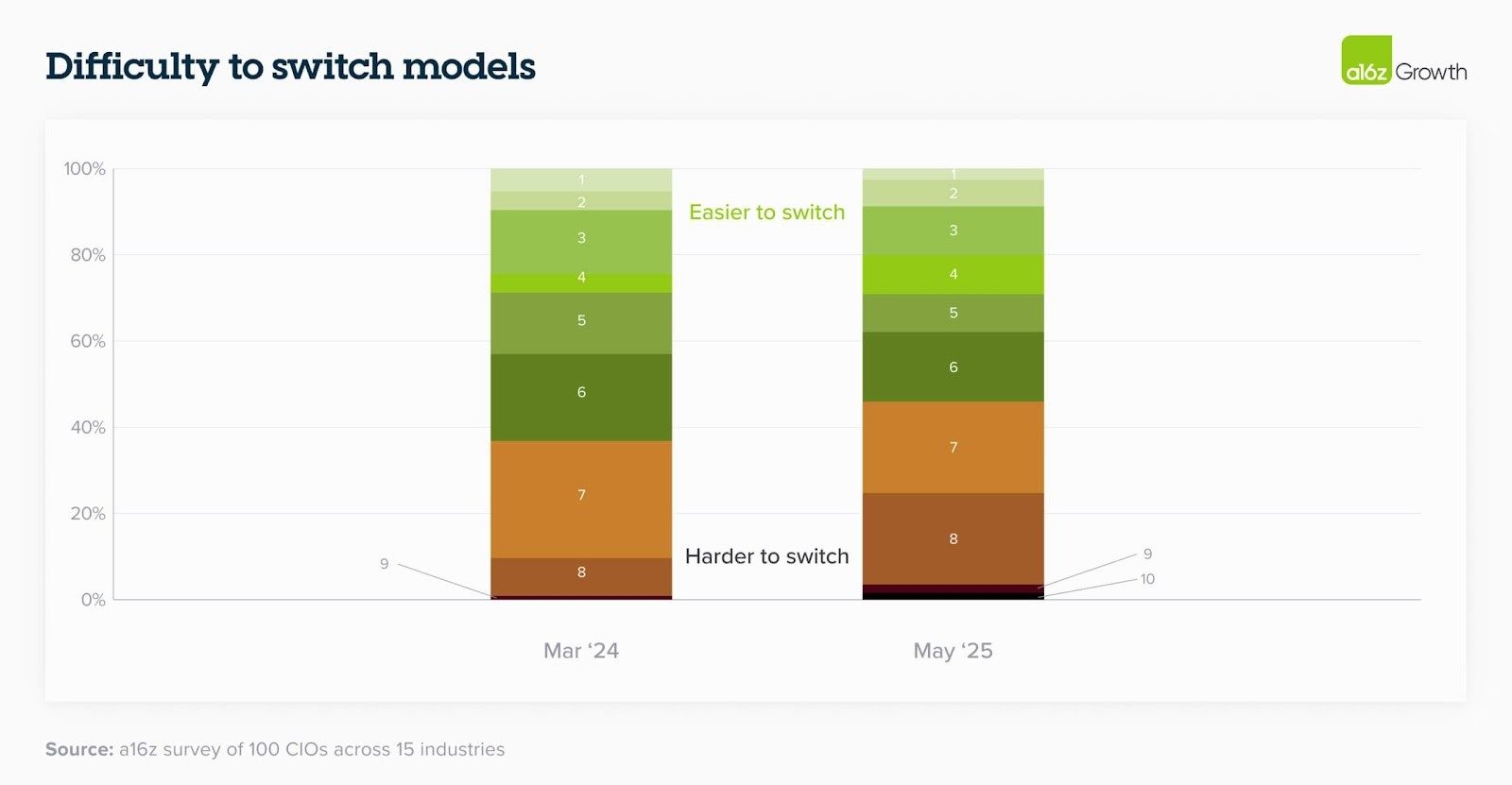

- As AI gets complex, switching costs rise. Choose your agent ecosystem wisely — your future depends on it.

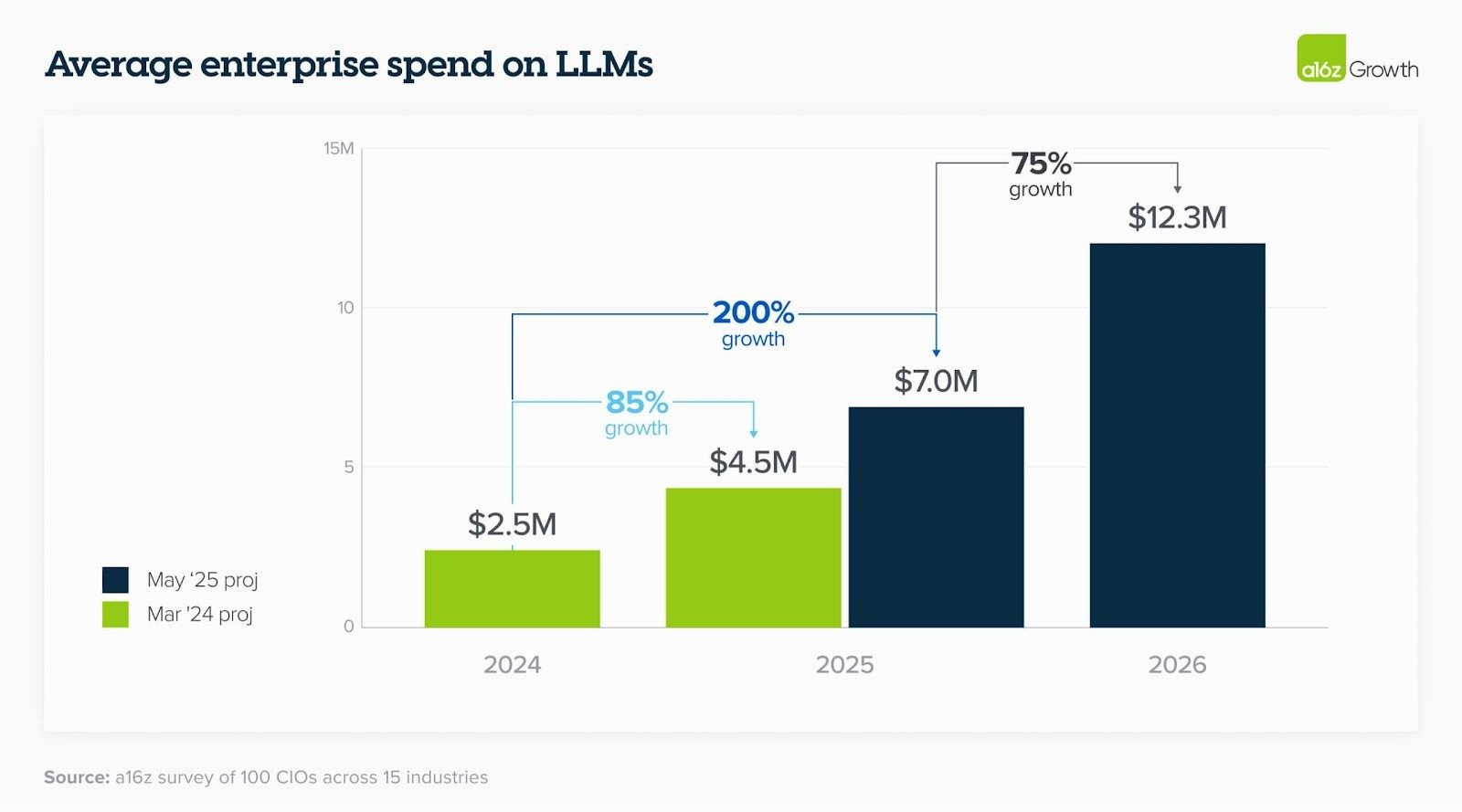

Remember when AI was a line item in your "innovation budget," a curious experiment for the R&D folks? Those days are gone forever. Andreessen Horowitz's latest "16 Changes to AI in the Enterprise" of 100 CIOs across 15 industries report is a blaring siren: AI spending isn't just skyrocketing; it's shifting from speculative play to core, permanent budget lines.

If your enterprise still treats AI as a side project, you're not just behind; you're becoming irrelevant. The landscape has been remade in just a year. It's time for a brutal reality check.

I. The AI Gold Rush: Budgets Explode, Driven by Real Use Cases (and Agents)

The numbers from A16Z are staggering. Enterprise leaders expect an average 75% growth in AI spend over the next year, and this isn't wishful thinking — it's driven by the discovery and implementation of new, valuable use cases. Internal applications were just the start; customer-facing GenAI is where the larger spending is now headed. This echoes what PWC found: a whopping 88% of organizations are increasing AI budgets due to agents, with many seeing 10%+ bumps.

This spending is graduating from innovation slush funds to permanent budget lines. Last year, innovation budgets covered ~25% of GenAI spending; now, it's a mere 7%. Reallocated central IT budgets (up to 39%) and business unit budgets (up to 27%) are now footing the bill. This isn't experimentation anymore; it's operationalization. It reflects a profound shift: organizations are no longer asking "if" AI, or even "if" agents, but are now deep in the "how," building infrastructure for an AI-driven future they see as inevitable.

Related Article: The Hidden Infrastructure Costs of Enterprise AI Adoption

II. The Sophisticated Enterprise: Multi-Model Strategies and a Nuanced Approach

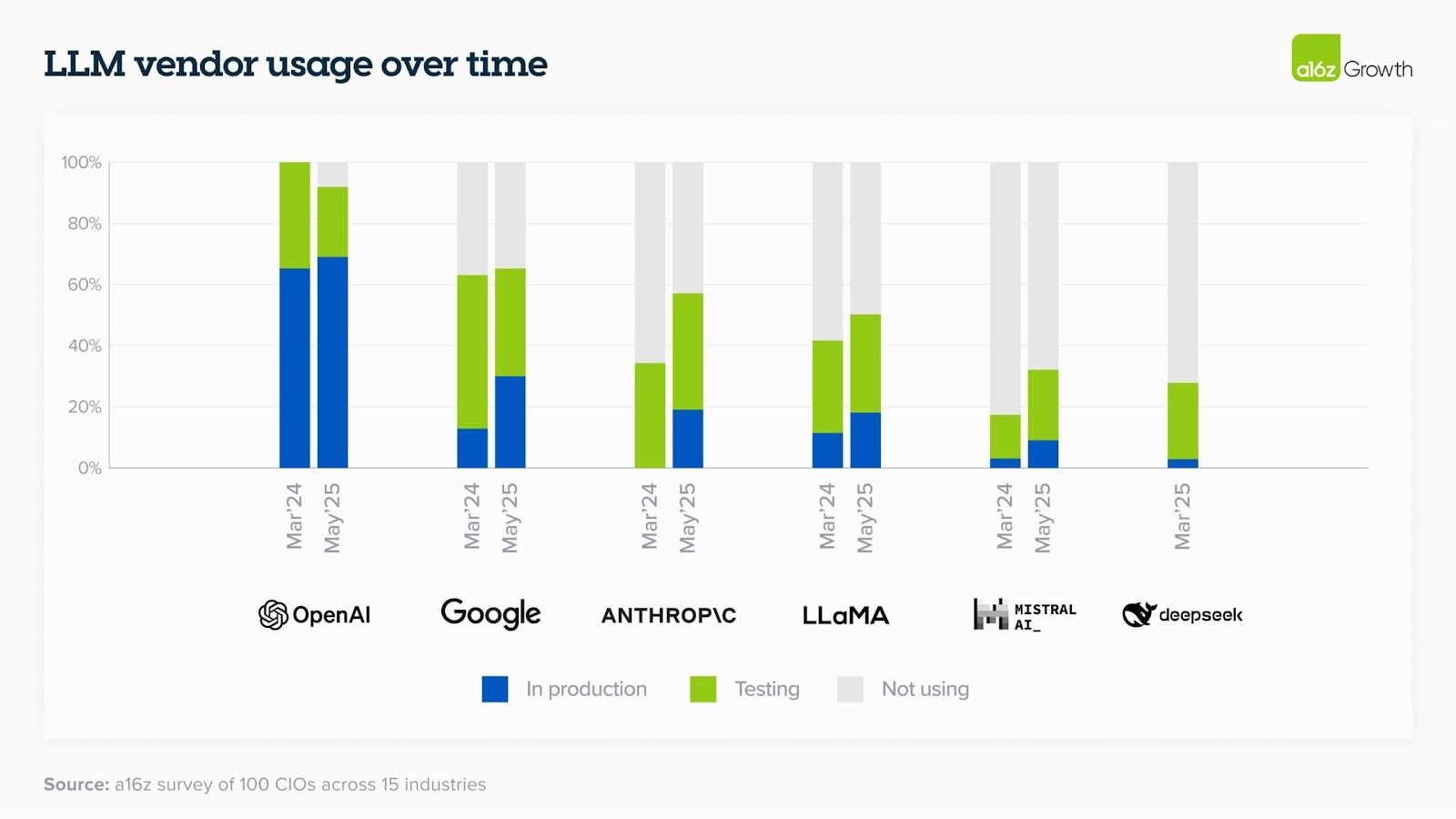

Forget the idea of enterprises locking into a single AI provider. A16Z reveals a surprising level of sophistication:

- Consolidation Around Leaders, But With Nuance: While OpenAI leads in market share, Google and Anthropic have made strides. Interestingly, OpenAI users deploy a wider range of their models (including non-frontier ones) in production (67%) compared to Google (41%) or Anthropic (27%), who see more concentration at their highest-end offerings.

- Multi-Model Is the Norm: Businesses are behaving like savvy consumers, using different models for different tasks. You might use Anthropic's Claude for coding, OpenAI's GPT-4.5 for writing and Google's Gemini for strategy. A stunning 37% of enterprises are already using five or more models. Model differentiation is key.

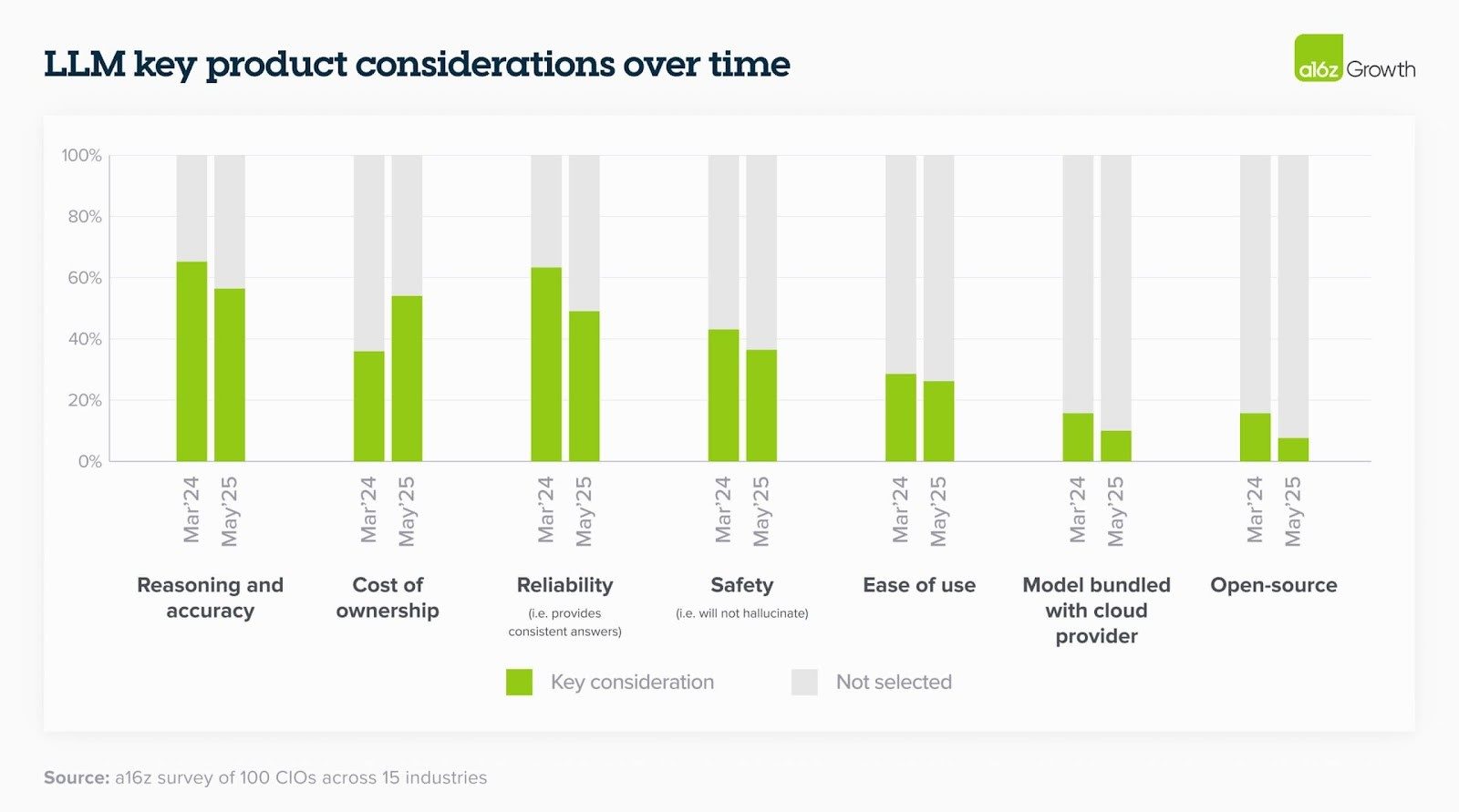

- Google's Enterprise Traction & The Cost Factor: Google is gaining share in larger enterprises, leveraging not just trust but a strong performance-to-cost ratio. As AI use becomes ubiquitous, cost of ownership is re-emerging as a major buying consideration, moving beyond the "innovation stage" where performance was paramount. Closed-source models, with falling prices, are often preferred over open-source for their ecosystem benefits, though large enterprises do adopt open-source (Llama, Mistral) for control and customization.

III. The Shifting Sands of AI Development: Fine-Tuning Fades, Reasoning Rises

The way enterprises interact with and build upon AI models is also evolving rapidly:

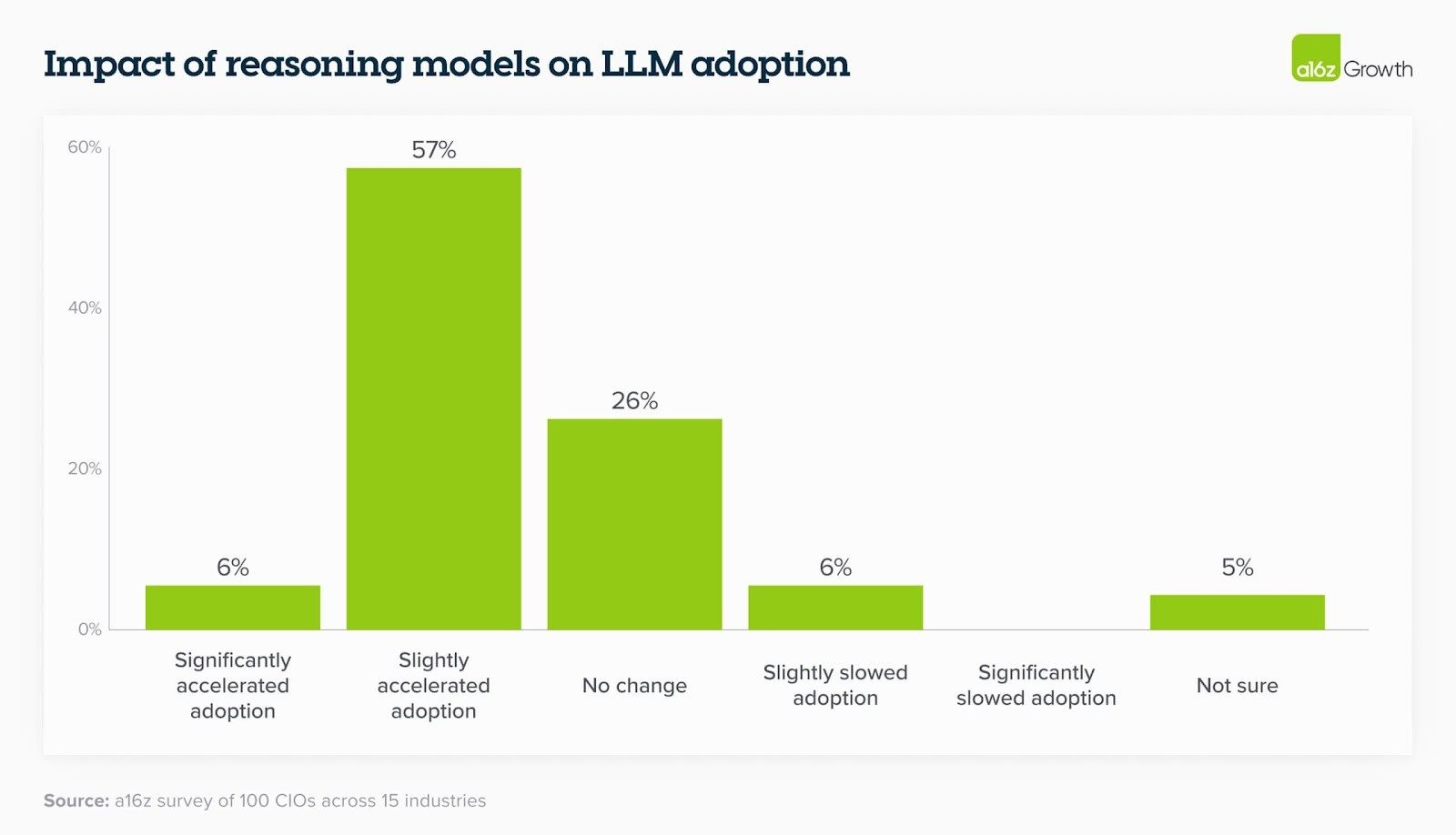

- Excitement for Reasoning Models (and Agents): While still early in testing, enterprises are highly enthusiastic about reasoning models (like OpenAI's o3) and the agentic capabilities they unlock. These aren't just about doing old tasks better; they "allow us to solve newer, more complex use cases," opening entirely new avenues for value creation. Every model improvement isn't just incremental; it solves previously impossible applications.

- Fine-Tuning's Decline: Last year, fine-tuning models with proprietary data was a hot topic. Now, as model capabilities (especially context windows) improve, many find it less necessary. Simply "dumping data into a long context" often yields almost equivalent results with off-the-shelf models, a significant shift with financial implications for the fine-tuning startup ecosystem.

- Hosting Preferences Evolve: Trust is building. Last year, enterprises preferred accessing models via established cloud providers (AWS, Azure, GCP). Now, they're increasingly comfortable hosting directly with model providers like OpenAI and Anthropic to get "direct access to the latest model with the best performance as soon as it's available." The demand for state-of-the-art capabilities is overriding previous caution. This is even leading to internal revolts, like Amazon employees reportedly demanding access to best-in-class tools like Cursor over internal offerings.

IV. The Moat Paradox: Switching Costs Rise as Agentic Workflows Deepen

As AI moves from simple, one-shot tasks to complex, multi-step agentic workflows, a new form of lock-in emerges: switching costs are getting higher.

While enterprises initially designed for model interchangeability, the intricate dependencies in agentic systems make swapping out a core model a far more disruptive proposition. Every agent platform is now competing to capture a larger share of these interconnected use cases, knowing that interoperability, while desired, will be hard-won.

V. Build vs. Buy: A Bifurcating Landscape

Last year, many enterprises were building their own AI solutions due to a lack of mature vertical applications. That's shifting. A16Z sees a "market shift towards buying third-party applications" as the ecosystem of AI apps matures. Vertical and functional agents are the hottest startup trend.

However, this isn't a simple swing. A fork is likely:

- "Off-the-Shelf-ish" for Common Use Cases: Many everyday enterprise needs (e.g., CPG customer service) will be well-served by high-quality third-party agents requiring some customization and data integration.

- "Roll Your Own" for High-Value, Regulated Industries: Heavily regulated or high-value sectors (finance, healthcare) will likely continue to build adapted, proprietary agentic solutions, especially with the rise of "agents that build other agents" (e.g., from companies like Emergence). These firms will focus on building the internal infrastructure to provision agents dynamically.

Related Article: AI Agents at Work: Inside Enterprise Deployments

VI. Pricing Puzzles and Ubiquitous Use Cases

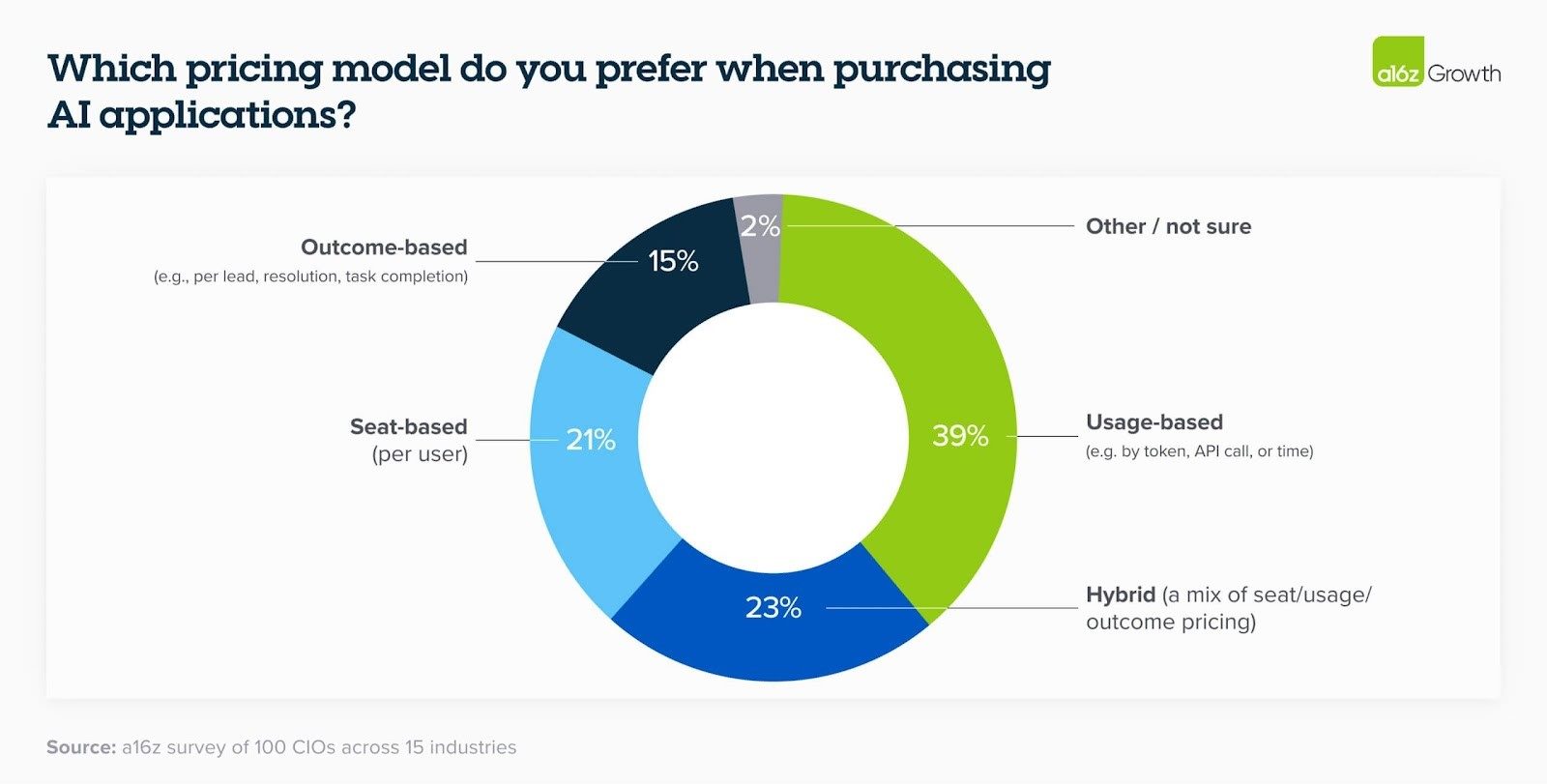

The old per-seat software model is struggling in the AI world. Outcome-based pricing is intriguing but fraught with challenges like unclear outcomes and unpredictable costs (only 15% of CIOs prefer it). Usage-based models (39% preference) seem to be a more comfortable interim solution.

Meanwhile, some AI use cases are becoming table stakes (the baseline capabilities that are now required to compete effectively). Software development has seen a "step change," jumping from <40% to >70% of enterprises having it as an in-production use case in one year, driven by high-quality apps, increased model capabilities and no-brainer ROI. Enterprise search, data analysis and data labeling also saw big gains.

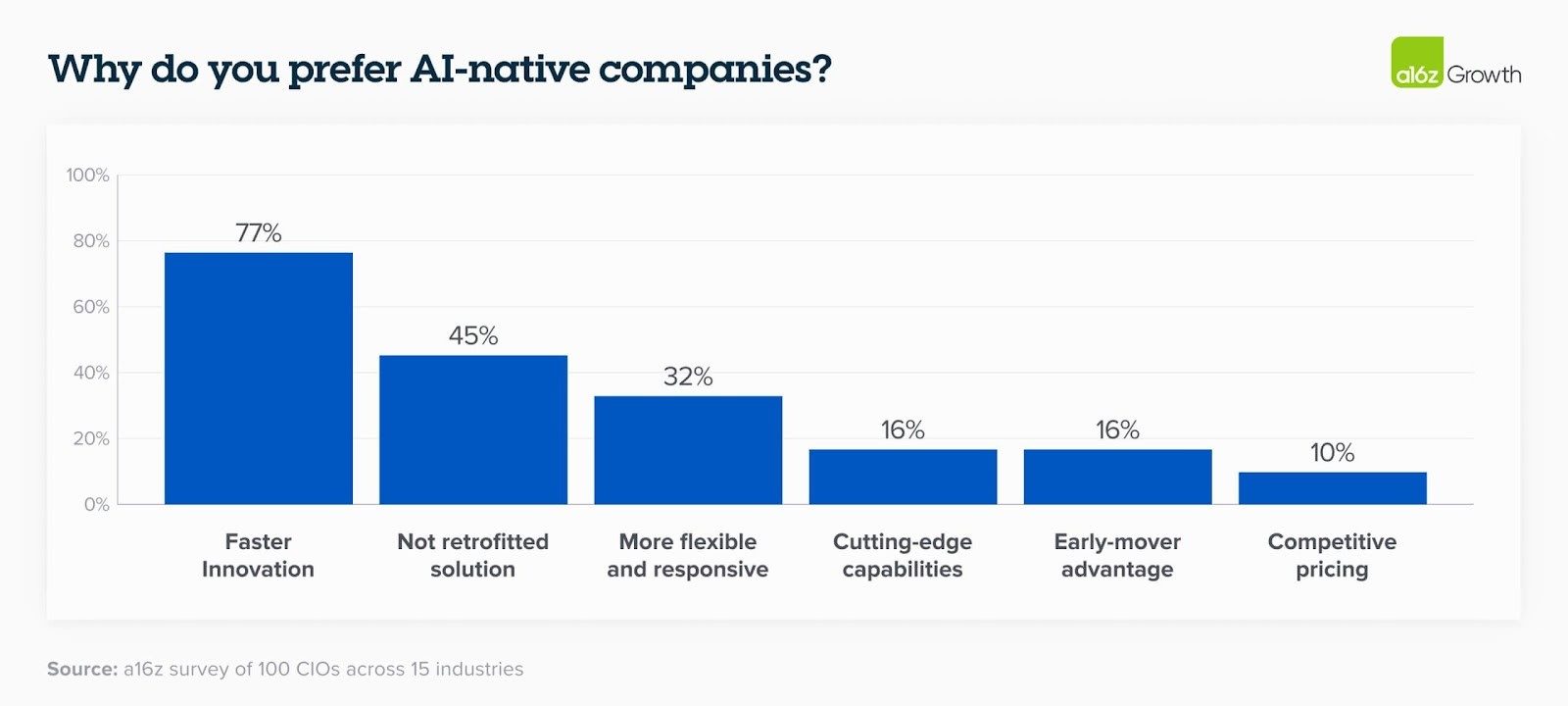

VII. The Incumbent's Dilemma: AI-Native Speed Challenges Established Trust

A year ago, incumbents (large tech providers, established enterprises) seemed to have a massive AI advantage: trust, distribution and capital. That advantage is now being challenged. A16Z's final, trend: AI-native quality and speed are starting to outpace incumbents.

Enterprises increasingly prioritize access to the best, state-of-the-art models as fast as possible. In areas like software development, the difference between first-gen AI coding assistants and new agentic coding platforms (Cursor vs. GitHub Copilot) is stark. Enterprises favoring AI-native companies cite their "faster pace of innovation" as the overwhelming reason. The old moats are eroding.

Final Thought: Acceleration Is Not a Strategy, It's a Prerequisite

The overarching story from A16Z's 2025 enterprise AI report is one of relentless acceleration. Enterprises are adapting with surprising speed, mirroring consumer behavior in their multi-model adoption and demand for cutting-edge capabilities. This isn't just about adopting AI; it's about rewiring your organization to operate at AI speed. The shift to agents is not a distant future; it's ongoing and demands your immediate, strategic focus. If you're not already deeply engaged in understanding and implementing these changes, you're not just falling behind — you're risking being lapped by a future that has already arrived.

Frequently Asked Questions

That data remains incredibly valuable for Retrieval-Augmented Generation (RAG) by providing specific context to off-the-shelf models through long context windows, and for internal benchmarking and validation of AI outputs.

Beyond direct licensing/usage fees, consider compute costs, data preparation/integration efforts, internal talent/training required, security/compliance overhead and the potential cost of errors or inaccurate outputs from less mature models.

Engage directly with internal tech leads/AI teams for tailored briefings, participate in executive AI workshops, follow curated industry analyses (like A16Z's) and encourage pilot projects that demonstrate these nuanced differences in real business problems.

Empower small, autonomous, cross-functional teams with direct access to state-of-the-art AI tools and clear mandates to rapidly prototype and iterate on high-impact AI use cases, shielding them from traditional bureaucratic hurdles.

Learn how you can join our contributor community.