The Gist

- Metadata evolution. New metadata fields like "Generated By," "Prompt," and "Series #" are crucial for managing AI-generated content.

- Guideline importance. Brands must establish robust guidelines to determine appropriate usage and disclosure of AI-generated content.

- Legal implications. AI-generated content introduces legal complexities, requiring increased legal team involvement in content approval.

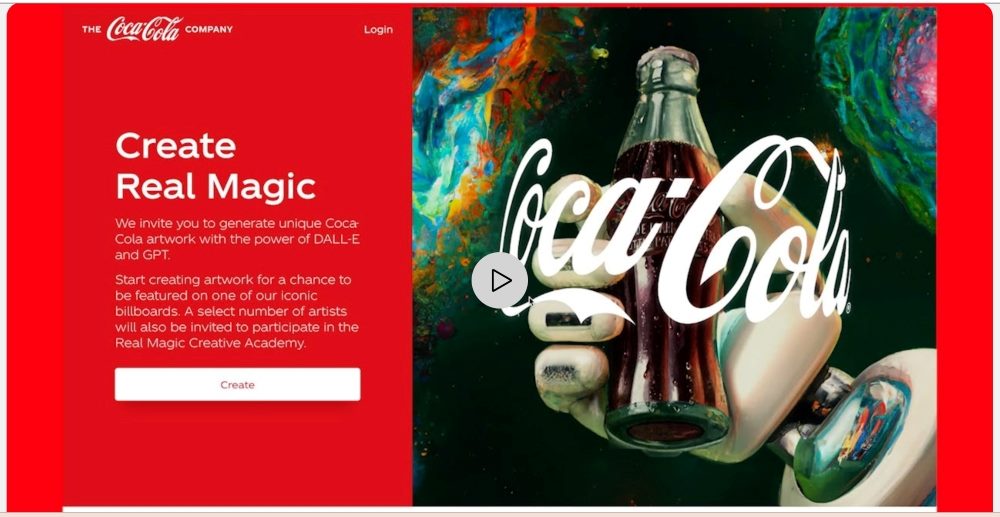

In March 2023, The Coca-Cola Company shook up a can of generative AI and let it explode. Its new marketing campaign, “Create Real Magic," the company invited people to make art using OpenAI’s GPT-4 and DALL-E technologies along with Coca-Cola’s branded assets, like logos and the infamous polar bear. The winning entries will appear on billboards in New York’s Time Square and London’s Piccadilly Circus. Topping it off, Coca-Cola debuted its own AI-aided “Masterpiece,” an ad in which animated museum pieces deliver a Coca-Cola to a boy who needs a sugar rush inspiration to make art.

The campaign raises interesting questions. Is OpenAI the equivalent of a paintbrush, a mere tool, or does it deserve credit alongside the artists? When should or shouldn’t generative AI be used for marketing content? How closely can AI approximate real people without triggering legal repercussions?

Brands are using generative AI, if not for the novelty, then to reduce marketing costs and accelerate campaigns. Meanwhile, the decisions we make in digital asset management (DAM) — the practices we use to organize, protect, deploy and analyze content — will quietly shape the norms of AI in media and culture. I want to highlight three such decisions that brand leaders ought to address now.

New Media, New Metadata

When media and creative processes change, so does the metadata we use to describe them. Based on the Coca-Cola example (and more mundane cases), several new metadata fields will be crucial for AI-generated content.

1. Generated By. While ChatGPT and DALL-E are in the spotlight, there are dozens more generative AIs. Marketers will want to know which AI works best for a given use case, like capturing home goods in an AI-generated living room. They’ll also want to know which AIs are used most often and for what. “Generated By” helps answer those questions.

2. Prompt. Marketers will want to know what text prompt generated each AI asset. The prompt provides context about what the creator intended to make. Marketers will be keen to review the prompts that led to the best-performing assets as measured by views, downloads, social shares, conversions, etc.

3. Series #. If someone enters the same prompt more than once, an AI will produce unique assets each time. Thus, marketers need an identifier, the “Series #,” to distinguish between images that come from the exact same prompt. Series # may help teams determine how many times on average they ought to repeat a prompt to get optimal results.

4. Fact-Checked (Y/N). Fact-checking should be a standard process for many asset types, but it’s especially important when using generative AI. These models can “hallucinate,” to use the industry lingo, leading to assets that are misleading or false. You may remember from my recent article on ChatGPT that it invented product features on its own. AI can also plagiarize copyrighted content, unbeknownst to the creator, posing legal risks.

5. Contributors. In addition to documenting who wrote what prompt, we’ll need a “Contributors” field to identify people who modeled or manually created text, designs, photos, etc., later used by the AI. This information will be relevant to content credits, images rights and licensing.

While marketers will surely discover more fields are needed, these are a good start. They can help teams manage the next issue for generative AI and DAM: brand guidelines.

Related Article: 24 Modern Digital Asset Management Software Platforms Examined

AI Brand Guidelines

When it emerged in January 2023 that CNET had used generative AI to create evergreen articles — with numerous errors and without disclosing its use — people were enraged. CNET violated the audience’s expectation that journalism is rigorously fact-checked and authored by an identifiable individual or team. If only they had stronger brand guidelines that accounted for the audience’s expectations.

To begin, brands need to decide if and when AI-generated content is appropriate for use. This will vary by industry. In real estate, for instance, agents already use AI to draft property listings. If the text is compelling and accurate, there’s no downside. Conversely, organizations that publish authored content need to be careful. As CNET learned, the only thing worse than using AI in journalism might be not disclosing it.

If a brand does use generative AI, it then must decide if and how to identify AI-generated content as such to the audience. On a website where authors aren’t credited anyway, no one will care whether AI generated the copy or images. In cases where human authors get a nod, AI should too. A director who uses ChatGPT to write a script and Runway Research’s Gen-2 to generate a video from that script should acknowledge the AIs.

In cases where an AI acts like a customer or fan of a brand, disclosure is more important — after all, TV advertisers disclose when they use real customers versus actors. If a brand advocate is really AI, the audience deserves to know.

Related Article: Most Consumers: Don't Use AI to Personalize My Experience

New Role for Legal in Messy AI Issues

The messiness of AI brand guidelines means that legal teams will need to be more involved in content approval processes. Several hypothetical examples illustrate why.

Imagine a celebrity who licenses their likeness and voice to AI systems or specific brands. Depending on how those contracts are written, there may be limits on how that AI model can be used. There’s a difference between putting AI William Shatner in a generic hotel booking ad and having Shatner gush about a specific hotel that he’s never visited. Likewise, licensors may stipulate that their likeness can’t be used by political advertisers or hate groups that would have them advocate views contrary to their own beliefs.

Another area for legal counsel may be advertisements in regulated industries, like healthcare. Today, medical brands must get signed releases from patients to use them in marketing. What if they created a strikingly similar AI version of that patient and claimed their testimony is based on real results? Or what if a brand generates an AI child to avoid asking the parents for permission?

AI-generated content will test laws and precedents set in the era before deep fakes. As boundaries get tested, lawyers will help determine which AI images can be used.

In Case We Forget: AI Can't Experience What it Creates

Enthusiasm about generative AI overwhelmingly focuses on ease, efficiency and costs more than quality. Yet people now accuse creators of using AI — as an insult — when their words and ideas seem too dry, tone deaf and simplistic to be human made.

Heed that warning. Sure, DAM can help brands bring order, thoughtfulness and care to how and when they use AI. But DAM cannot guarantee that AIs produce relatable, emotionally meaningful content. If Coca-Cola captured “Real Magic,” it is because the creators and audience members shared in something emotionally meaningful and memorable. What AI makes it cannot experience.

Learn how you can join our contributor community.